Python Requests/BS4 Beginners Series Part 1: How To Build Our First Scraper

When it comes to web scraping Python is the go-to language for web scraping because of its highly active community, great web scraping libraries and popularity within the data science community.

There are lots of articles online, showing you how to make your first basic Python scraper. However, there are very few that walk you through the full process of building a production ready Python scraper.

To address this, we are doing a 6-Part Python Requests/BeautifulSoup Beginner Series, where we're going to build a Python scraping project end-to-end from building the scrapers to deploying on a server and run them every day.

Python Requests/BeautifulSoup 6-Part Beginner Series

-

Part 1: Basic Python Requests/BeautifulSoup Scraper - We'll go over the basics of scraping with Python, and build our first Python scraper. (Part 1)

-

Part 2: Cleaning Dirty Data & Dealing With Edge Cases - Web data can be messy, unstructured, and have lots of edge cases. In this tutorial we'll make our scraper robust to these edge cases, using data classes and data cleaning pipelines. (Part 2)

-

Part 3: Storing Data in AWS S3, MySQL & Postgres DBs - There are many different ways we can store the data that we scrape from databases, CSV files to JSON format, and S3 buckets. We'll explore several different ways we can store the data and talk about their pros, and cons and in which situations you would use them. (Part 3)

-

Part 4: Managing Retries & Concurrency - Make our scraper more robust and scalable by handling failed requests and using concurrency. (Part 4)

-

Part 5: Faking User-Agents & Browser Headers - Make our scraper production ready by using fake user agents & browser headers to make our scrapers look more like real users. (Part 5)

-

Part 6: Using Proxies To Avoid Getting Blocked - Explore how to use proxies to bypass anti-bot systems by hiding your real IP address and location. (Part 6)

For this beginner series, we're going to be using one of the simplest scraping architectures. A single scraper, being given a start URL which will then crawl the site, parse and clean the data from the HTML responses, and store the data all in the same process.

This architecture is suitable for the majority of hobby and small scraping projects, however, if you are scraping business critical data at larger scales then we would use different scraping architectures.

The code for this project is available on Github here!

If you prefer to follow along with a video then check out the video tutorial version here:

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

Part 1: Basic Python Scraper

In this tutorial, Part 1: Basic Python Scraper we're going to cover:

- Our Python Web Scraping Stack

- How to Setup Our Python Environment

- Creating Our Scraper Project

- Laying Out Our Python Scraper

- Retrieving The HTML From Website

- Extracting Data From HTML

- Saving Data to CSV

- How to Navigate Through Pages

For this series, we will be scraping the products from Chocolate.co.uk as it will be a good example of how to approach scraping a e-commerce store. Plus, who doesn't like Chocolate!

Our Python Web Scraping Stack

When it comes to web scraping stacks there are two key components:

- HTTP Client: Which sends a request to the website to retrieve the HTML/JSON response from the website.

- Parsing Library: Which is used to extract the data from the web page.

Due to the popularity of Python for web scraping, we have numerous options for both.

We can use Python Requests, Python HTTPX or Python aiohttp as HTTP clients.

And BeautifulSoup, Lxml, Parsel, etc. as parsing libraries.

Or we could use Python web scraping libraries/frameworks that combine both HTTP requests and parsing like Scrapy, Python Selenium and Requests-HTML.

Each stack has its own pros and cons, however, for the puposes of this beginners series we will use the Python Requests/BeautifulSoup stack as it is by far the most common web scraping stack used by Python developers.

Using the Python Requests/BeautifulSoup stack you can easily build highly scalable scrapers that will retrieve a pages HTML, parse and process the data, and store it the file format and location of your choice.

How to Setup Our Python Environment

With the intro out of the way, let's start developing our scraper. First, things first we need to setup up our Python environment.

Step 1 - Setup your Python Environment

To avoid version conflicts down the raod it is best practice to create a seperate virtual environment for each of your Python projects. This means that any packages you install for a project are kept seperate from other projects, so you don't inadverently end up breaking other projects.

Depending on the operating system of your machine these commands will be slightly different.

MacOS or Linux

Setup a virtual environment on MacOS or any Linux distro.

First, we want to make sure we've the latest version of our packages installed.

$ sudo apt-get update

$ apt install tree

Then install python3-venv if you haven't done so already

$ sudo apt install -y python3-venv

Next, we will create our Python virtual environment.

$ python3 -m venv venv

$ source venv/bin/activate

Windows

Setup a virtual environment on Windows.

Install virtualenv in your Windows command shell, Powershell, or other terminal you are using.

pip install virtualenv

Navigate to the folder you want to create the virtual environment, and start virtualenv.

virtualenv venv

Activate the virtual environment.

source venv\Scripts\activate

Step 2 - Install Python Requests & BeautifulSoup

Finally, we will install Python Requests and BeautifulSoup in our virtual environment.

pip install requests beautifulsoup4

Creating Our Scraper Project

Now that we have our environment setup, we can get onto the fun stuff. Building our first Python scraper!

The first thing we need to do is create our scraper script. This project will hold all the code for our scrapers.

To do this we will create a new file called chocolate_scraper.py in our project folder.

ChocolateScraper

└── chocolate_scraper.py

This chocolate_scraper.py file will contain all the code we will use to scrape the Chocolate.co.uk website.

In the future we will run this scraper by entering the following into the command line:

python chocolate_scraper.py

Laying Out Our Python Scraper

Now that we have our libraries installed and our chocolate_scraper.py created lets layout our scraper.

import requests

from bs4 import BeautifulSoup

list_of_urls = [

'https://www.chocolate.co.uk/collections/all',

]

scraped_data = []

## Scraping Function

def start_scrape():

## Loop Through List of URLs

for url in list_of_urls:

## Send Request

## Parse Data

## Add To Data Output

pass

if __name__ == "__main__":

start_scrape()

print(scraped_data)

Let's walk through what we have just defined:

- Imported both Python Requests and BeautifulSoup into our script so we can use them to retrieve the HTML pages and parse the data from the page.

- Defined a

list_of_urlswe want to scrape. - Defined a

scraped_datalist where we will store the scraped data. - Defined a

start_scrapefunction which is where we will write our scraper. - Created a

__main__which will kick off our scraper when we run the script and a print function that will print out the scraped data.

If we run this script now using python chocolate_scraper.py then we should get a empty list as an output.

python chocolate_scraper.py

## []

Retrieving The HTML From Website

The first step every web scraper must do is retrieve the HTML/JSON response from the target website so that it can extract the data from the response.

We will use the Python Requests library to do this so let's update our scraper to send a request to our target URLs.

import requests

from bs4 import BeautifulSoup

list_of_urls = [

'https://www.chocolate.co.uk/collections/all',

]

scraped_data = []

## Scraping Function

def start_scrape():

## Loop Through List of URLs

for url in list_of_urls:

## Send Request

response = requests.get(url)

if response.status_code == 200:

## Parse Data

print(response.text)

## Add To Data Output

pass

if __name__ == "__main__":

start_scrape()

print(scraped_data)

Here you will see that we added 3 lines of code:

response = requests.get(url)this sends a HTTP request to the URL and returns the response.if response.status_code == 200:here we check if the response is valid (200 status code) before trying to parse the data.print(response.text)for debugging purposes we print the response to make sure we are getting the correct response.

Now when we run the script we will get something like this:

<!doctype html><html class="no-js" lang="en" dir="ltr">

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0, height=device-height, minimum-scale=1.0, maximum-scale=1.0">

<meta name="theme-color" content="#682464">

<title>Products</title><link rel="canonical" href="https://www.chocolate.co.uk/collections/all"><link rel="preconnect" href="https://cdn.shopify.com">

<link rel="dns-prefetch" href="https://productreviews.shopifycdn.com">

<link rel="dns-prefetch" href="https://www.google-analytics.com"><link rel="preconnect" href="https://fonts.shopifycdn.com" crossorigin><link rel="preload" as="style" href="//cdn.shopify.com/s/files/1/1991/9591/t/60/assets/theme.css?v=88009966438304226991661266159">

<link rel="preload" as="script" href="//cdn.shopify.com/s/files/1/1991/9591/t/60/assets/vendor.js?v=31715688253868339281661185416">

<link rel="preload" as="script" href="//cdn.shopify.com/s/files/1/1991/9591/t/60/assets/theme.js?v=165761096224975728111661185416"><meta property="og:type" content="website">

<meta property="og:title" content="Products"><meta property="og:image" content="http://cdn.shopify.com/s/files/1/1991/9591/files/Chocolate_Logo1_White-01-400-400_c4b78d19-83c5-4be0-8e5f-5be1eefa9386.png?v=1637350942">

<meta property="og:image:secure_url" content="https://cdn.shopify.com/s/files/1/1991/9591/files/Chocolate_Logo1_White-01-400-400_c4b78d19-83c5-4be0-8e5f-5be1eefa9386.png?v=1637350942">

<meta property="og:image:width" content="1200">

...

...

Extracting Data From HTML

Now that our scraper is successfully retrieving HTML pages from the website, we need to update our scraper to extract the data we want.

We will do this using the BeautifulSoup library and CSS Selectors (another option are XPath Selectors).

XPath and CSS selectors are like little maps our scraper will use to navigate the DOM tree and find the location of the data we require.

First things first though, we need to load the HTML response into BeautifulSoup so we can navigate the DOM.

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser')

Find Product CSS Selectors

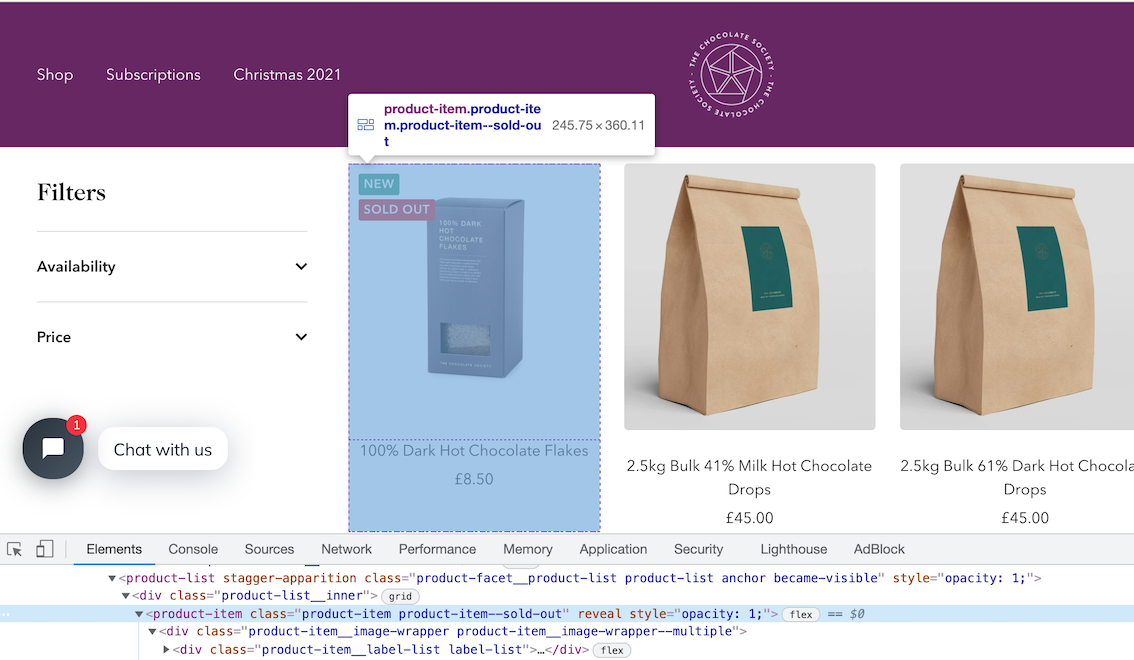

To find the correct CSS selectors to parse the product details we will first open the page in our browsers DevTools.

Open the website, then open the developer tools console (right click on the page and click inspect).

Using the inspect element, hover over the item and look at the id's and classes on the individual products.

In this case we can see that each box of chocolates has its own special component which is called product-item. We can just use this to reference our products (see above image).

soup.select('product-item')

We can see that it has found all the elements that match this selector.

[

<product-item class="product-item product-item--sold-out" reveal=""><div class="product-item__image-wrapper product-item__image-wrapper--multiple"><div class="product-item__label-list label-list"><span class="label label--custom">New</span><span class="label label--subdued">Sold out</span></div><a class="product-item__aspect-ratio aspect-ratio aspect-ratio--square" href="/products/100-dark-hot-chocolate-flakes" style="padding-bottom: 100.0%; --aspect-ratio: 1.0">... </product-item>,

<product-item class="product-item product-item--sold-out" reveal=""><div class="product-item__image-wrapper product-item__image-wrapper--multiple"><div class="product-item__label-list label-list"><span class="label label--custom">New</span><span class="label label--subdued">Sold out</span></div><a class="product-item__aspect-ratio aspect-ratio aspect-ratio--square" href="/products/100-dark-hot-chocolate-flakes" style="padding-bottom: 100.0%; --aspect-ratio: 1.0">... </product-item>,

<product-item class="product-item product-item--sold-out" reveal=""><div class="product-item__image-wrapper product-item__image-wrapper--multiple"><div class="product-item__label-list label-list"><span class="label label--custom">New</span><span class="label label--subdued">Sold out</span></div><a class="product-item__aspect-ratio aspect-ratio aspect-ratio--square" href="/products/100-dark-hot-chocolate-flakes" style="padding-bottom: 100.0%; --aspect-ratio: 1.0">... </product-item>,

...

]

Get First Product

To just get the first product we use .get() appended to the end of the command.

soup.select('product-item')[0]

This returns all the HTML in this node of the DOM tree.

'<product-item class="product-item product-item--sold-out" reveal><div class="product-item__image-wrapper product-item__image-wrapper--multiple"><div class="product-item__label-list label-list"><span class="label label--custom">New</span><span class="label label--subdued">Sold out</span></div><a href="/products/100-dark-hot-chocolate-flakes" class="product-item__aspect-ratio aspect-ratio " style="padding-bottom: 100.0%; --aspect-ratio: 1.0">\n...'

Get All Products

Now that we have found the DOM node that contains the product items, we will get all of them and save this data into a response variable and loop through the items and extract the data we need.

So can do this with the following command.

products = soup.select('product-item')

The products variable, is now an list of all the products on the page.

To check the length of the products variable we can see how many products are there.

len(products)

## --> 24

Extract Product Details

Now lets extract the name, price and url of each product from the list of products.

The products variable is a list of products. When we update our spider code, we will loop through this list, however, to find the correct selectors we will test the CSS selectors on the first element of the list products[0].

Single Product - Get single product.

product = products[0]

Name - The product name can be found with:

product.select('a.product-item-meta__title')[0].get_text()

## --> '100% Dark Hot Chocolate Flakes'

Price - The product price can be found with:

product.select('span.price')[0].get_text()

## --> '\nSale price£9.95'

You can see that the data returned for the price has some extra text.

To remove the extra text from our price we can use the .replace() method. The replace method can be useful when we need to clean up data.

Here we're going to replace the \nSale price£ text with empty quotes '':

product.select('span.price')[0].get_text().replace('\nSale price£', '')

## --> '9.95'

Product URL - Next lets see how we can extract the product url for each individual product. To do that we can use the attrib function on the end of product.select('div.product-item-meta a')[0]

product.select('div.product-item-meta a')[0]['href']

## --> '/products/100-dark-hot-chocolate-flakes'

Updated Scraper

Now, that we've found the correct CSS selectors let's update our scraper.

Our updated scraper code should look like this:

import requests

from bs4 import BeautifulSoup

list_of_urls = [

'https://www.chocolate.co.uk/collections/all',

]

scraped_data = []

## Scraping Function

def start_scrape():

## Loop Through List of URLs

for url in list_of_urls:

## Send Request

response = requests.get(url)

if response.status_code == 200:

## Parse Data

soup = BeautifulSoup(response.content, 'html.parser')

products = soup.select('product-item')

for product in products:

name = product.select('a.product-item-meta__title')[0].get_text()

price = product.select('span.price')[0].get_text().replace('\nSale price£', '')

url = product.select('div.product-item-meta a')[0]['href']

## Add To Data Output

scraped_data.append({

'name': name,

'price': price,

'url': 'https://www.chocolate.co.uk' + url

})

if __name__ == "__main__":

start_scrape()

print(scraped_data)

Here, our scraper does the following steps:

- Makes a request to

'https://www.chocolate.co.uk/collections/all'. - When it gets a response, it extracts all the products from the page using

products = soup.select('product-item'). - Loops through each product, and extracts the name, price and url using the CSS selectors we created.

- Adds the parsed data to the

scraped_datalist so they can be stored in a CSV, JSON, DB, etc later.

When we run the scraper now using python chocolate_scraper.py then we should get a output like this.

[{'name': '100% Dark Hot Chocolate Flakes',

'price': '9.95',

'url': 'https://www.chocolate.co.uk/products/100-dark-hot-chocolate-flakes'},

{'name': '2.5kg Bulk 41% Milk Hot Chocolate Drops',

'price': '45.00',

'url': 'https://www.chocolate.co.uk/products/2-5kg-bulk-of-our-41-milk-hot-chocolate-drops'},

{'name': '2.5kg Bulk 61% Dark Hot Chocolate Drops',

'price': '45.00',

'url': 'https://www.chocolate.co.uk/products/2-5kg-of-our-best-selling-61-dark-hot-chocolate-drops'},

{'name': '41% Milk Hot Chocolate Drops',

'price': '8.75',

'url': 'https://www.chocolate.co.uk/products/41-colombian-milk-hot-chocolate-drops'},

{'name': '61% Dark Hot Chocolate Drops',

'price': '8.75',

'url': 'https://www.chocolate.co.uk/products/62-dark-hot-chocolate'},

{'name': '70% Dark Hot Chocolate Flakes',

'price': '9.95',

'url': 'https://www.chocolate.co.uk/products/70-dark-hot-chocolate-flakes'},

{'name': 'Almost Perfect',

'price': '\nSale priceFrom £1.50\n',

'url': 'https://www.chocolate.co.uk/products/almost-perfect'},

{'name': 'Assorted Chocolate Malt Balls',

'price': '9.00',

'url': 'https://www.chocolate.co.uk/products/assorted-chocolate-malt-balls'},

...

]

Saving Data to CSV

In Part 4 of this beginner series, we go through in much more detail how to save data to various file formats and databases.

However, as a simple example for part 1 of this series we're going to save the data we've scraped and stored in scraped_data into a CSV file once the scrape has been completed.

To do so we will create the following function:

import csv

def save_to_csv(data_list, filename):

keys = data_list[0].keys()

with open(filename + '.csv', 'w', newline='') as output_file:

dict_writer = csv.DictWriter(output_file, keys)

dict_writer.writeheader()

dict_writer.writerows(data_list)

And update our scraper to use this function with our scraped_data:

import csv

import requests

from bs4 import BeautifulSoup

list_of_urls = [

'https://www.chocolate.co.uk/collections/all',

]

scraped_data = []

## Scraping Function

def start_scrape():

## Loop Through List of URLs

for url in list_of_urls:

## Send Request

response = requests.get(url)

if response.status_code == 200:

## Parse Data

soup = BeautifulSoup(response.content, 'html.parser')

products = soup.select('product-item')

for product in products:

name = product.select('a.product-item-meta__title')[0].get_text()

price = product.select('span.price')[0].get_text().replace('\nSale price£', '')

url = product.select('div.product-item-meta a')[0]['href']

## Add To Data Output

scraped_data.append({

'name': name,

'price': price,

'url': 'https://www.chocolate.co.uk' + url

})

def save_to_csv(data_list, filename):

keys = data_list[0].keys()

with open(filename + '.csv', 'w', newline='') as output_file:

dict_writer = csv.DictWriter(output_file, keys)

dict_writer.writeheader()

dict_writer.writerows(data_list)

if __name__ == "__main__":

start_scrape()

save_to_csv(scraped_data, 'scraped_data')

Now when we run the scraper it will create a scraped_data.csv file with all the data once the scrape has been completed.

The output will look something like this:

name,price,url

100% Dark Hot Chocolate Flakes,9.95,https://www.chocolate.co.uk/products/100-dark-hot-chocolate-flakes

2.5kg Bulk 41% Milk Hot Chocolate Drops,45.00,https://www.chocolate.co.uk/products/2-5kg-bulk-of-our-41-milk-hot-chocolate-drops

2.5kg Bulk 61% Dark Hot Chocolate Drops,45.00,https://www.chocolate.co.uk/products/2-5kg-of-our-best-selling-61-dark-hot-chocolate-drops

41% Milk Hot Chocolate Drops,8.75,https://www.chocolate.co.uk/products/41-colombian-milk-hot-chocolate-drops

61% Dark Hot Chocolate Drops,8.75,https://www.chocolate.co.uk/products/62-dark-hot-chocolate

70% Dark Hot Chocolate Flakes,9.95,https://www.chocolate.co.uk/products/70-dark-hot-chocolate-flakes

Almost Perfect,"Sale priceFrom £1.50",https://www.chocolate.co.uk/products/almost-perfect

Assorted Chocolate Malt Balls,9.00,https://www.chocolate.co.uk/products/assorted-chocolate-malt-balls

Blonde Caramel,5.00,https://www.chocolate.co.uk/products/blonde-caramel-chocolate-bar

Blonde Chocolate Honeycomb,9.00,https://www.chocolate.co.uk/products/blonde-chocolate-honeycomb

Blonde Chocolate Honeycomb - Bag,8.50,https://www.chocolate.co.uk/products/blonde-chocolate-sea-salt-honeycomb

Blonde Chocolate Malt Balls,9.00,https://www.chocolate.co.uk/products/blonde-chocolate-malt-balls

Blonde Chocolate Truffles,19.95,https://www.chocolate.co.uk/products/blonde-chocolate-truffles

Blonde Hot Chocolate Flakes,9.95,https://www.chocolate.co.uk/products/blonde-hot-chocolate-flakes

Bulk 41% Milk Hot Chocolate Drops 750 grams,17.50,https://www.chocolate.co.uk/products/bulk-41-milk-hot-chocolate-drops-750-grams

Bulk 61% Dark Hot Chocolate Drops 750 grams,17.50,https://www.chocolate.co.uk/products/750-gram-bulk-61-dark-hot-chocolate-drops

Caramelised Milk,5.00,https://www.chocolate.co.uk/products/caramelised-milk-chocolate-bar

Chocolate Caramelised Pecan Nuts,8.95,https://www.chocolate.co.uk/products/chocolate-caramelised-pecan-nuts

Chocolate Celebration Hamper,55.00,https://www.chocolate.co.uk/products/celebration-hamper

Christmas Cracker,5.00,https://www.chocolate.co.uk/products/christmas-cracker-chocolate-bar

Christmas Truffle Selection,19.95,https://www.chocolate.co.uk/products/pre-order-christmas-truffle-selection

Cinnamon Toast,5.00,https://www.chocolate.co.uk/products/cinnamon-toast-chocolate-bar

Collection of 4 of our Best Selling Chocolate Malt Balls,30.00,https://www.chocolate.co.uk/products/collection-of-our-best-selling-chocolate-malt-balls

Colombia 61%,5.00,https://www.chocolate.co.uk/products/colombian-dark-chocolate-bar

As you might have noticed in the above CSV file, we seem to have a data quality issue with the price for the "Almost Perfect" perfect product. We will deal with this in the Part 2: Data Cleaning & Edge Cases

Navigating to the "Next Page"

So far the code is working great but we're only getting the products from the first page of the site, the URL which we have defined in the list_of_urls list.

So the next logical step is to go to the next page if there is one and scrape the item data from that too! So here's how we do that.

To do so we need to find the correct CSS selector to get the next page button.

And then get the href attribute that contains the url to the next page.

soup.select('a[rel="next"]')[0]['href']

## --> '/collections/all?page=2'

Now, we just need to update our scraper to extract this next page url and add it to our list_of_urls to scrape.

import csv

import requests

from bs4 import BeautifulSoup

list_of_urls = [

'https://www.chocolate.co.uk/collections/all',

]

scraped_data = []

## Scraping Function \{#scraping-function}

def start_scrape():

## Loop Through List of URLs

for url in list_of_urls:

## Send Request

response = requests.get(url)

if response.status_code == 200:

## Parse Data

soup = BeautifulSoup(response.content, 'html.parser')

products = soup.select('product-item')

for product in products:

name = product.select('a.product-item-meta__title')[0].get_text()

price = product.select('span.price')[0].get_text().replace('\nSale price£', '')

url = product.select('div.product-item-meta a')[0]['href']

## Add To Data Output

scraped_data.append({

'name': name,

'price': price,

'url': 'https://www.chocolate.co.uk' + url

})

## Next Page

next_page = soup.select('a[rel="next"]')

if len(next_page) > 0:

list_of_urls.append('https://www.chocolate.co.uk' + next_page[0]['href'])

def save_to_csv(data_list, filename):

keys = data_list[0].keys()

with open(filename + '.csv', 'w', newline='') as output_file:

dict_writer = csv.DictWriter(output_file, keys)

dict_writer.writeheader()

dict_writer.writerows(data_list)

if __name__ == "__main__":

start_scrape()

save_to_csv(scraped_data, 'scraped_data')

Now when we run our scraper it will continue scraping the next page until it has completed all available pages.

Next Steps

We hope you have enough of the basics to get up and running scraping a simple ecommerce site with the above tutorial.

If you would like the code from this example please check out on Github here!

In Part 2 of the series we will work on Cleaning Dirty Data & Dealing With Edge Cases. Web data can be messy, unstructured, and have lots of edge cases so will make our scraper robust to these edge cases, using DataClasses and Data Pipelines.

Need a Free Proxy? Then check out our Proxy Comparison Tool that allows to compare the pricing, features and limits of every proxy provider on the market so you can find the one that best suits your needs. Including the best free plans.