Python Requests/BS4 Beginners Series Part 4: Retries & Concurrency

So far in this Python Requests/BeautifulSoup 6-Part Beginner Series, we learned how to build a basic web scraper in Part 1, get it to scrape some data from a website in Part 2, clean up the data as it was being scraped and then save the data to a file or database in Part 3.

In Part 4, we'll explore how to make our scraper more robust and scalable by handling failed requests and using concurrency.

- Understanding Scraper Performance Bottlenecks

- Retry Requests and Concurrency Importance

- Retry Logic Mechanism

- Concurrency Management

If you prefer to follow along with a video then check out the video tutorial version here:

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

Python Requests/BeautifulSoup 6-Part Beginner Series

-

Part 1: Basic Python Requests/BeautifulSoup Scraper - We'll go over the basics of scraping with Python, and build our first Python scraper. (Part 1)

-

Part 2: Cleaning Dirty Data & Dealing With Edge Cases - Web data can be messy, unstructured, and have lots of edge cases. In this tutorial we'll make our scraper robust to these edge cases, using data classes and data cleaning pipelines. (Part 2)

-

Part 3: Storing Data in AWS S3, MySQL & Postgres DBs - There are many different ways we can store the data that we scrape from databases, CSV files to JSON format, and S3 buckets. We'll explore several different ways we can store the data and talk about their pros, and cons and in which situations you would use them. (Part 3)

-

Part 4: Managing Retries & Concurrency - Make our scraper more robust and scalable by handling failed requests and using concurrency. (Part 4)

-

Part 5: Faking User-Agents & Browser Headers - Make our scraper production ready by using fake user agents & browser headers to make our scrapers look more like real users. (Part 5)

-

Part 6: Using Proxies To Avoid Getting Blocked - Explore how to use proxies to bypass anti-bot systems by hiding your real IP address and location. (Part 6)

The code for this project is available on GitHub.

Understanding Scraper Performance Bottlenecks

In any web scraping project, the network delay acts as the initial bottleneck. Scraping requires sending numerous requests to a website and processing their responses. Even though each request and response travel over the network in mere fractions of a second, these small delays accumulate and significantly impact scraping speed when many pages are involved (say, 5,000).

Although humans visiting just a few pages wouldn't notice such minor delays, scraping tools sending hundreds or thousands of requests can face delays that stretch into hours. Furthermore, network delay is just one-factor impacting scraping speed.

The scraper does not only send and receive requests, but also parses the extracted data, identifies the relevant information, and potentially stores or processes it. While network delays may be minimal, these additional steps are CPU-intensive and can significantly slow down scraping.

Retry Requests and Concurrency Importance

When web scraping, retrying requests, and using concurrency are important for several reasons. Retrying requests helps handle temporary network glitches, server errors, rate limits, or connection timeouts, increasing the chances of a successful response.

Common status codes that indicate a retry is worth trying include:

- 429: Too many requests

- 500: Internal server error

- 502: Bad gateway

- 503: Service unavailable

- 504: Gateway timeout

Websites often implement rate limits to control traffic. Retrying with delays can help you stay within these limits and avoid getting blocked. While scraping, you might encounter pages with dynamically loaded content. This may require multiple attempts and retries at intervals to retrieve all the elements.

Now let’s talk about concurrency. When you make sequential requests to websites, you make one at a time, wait for the response, and then make the next one.

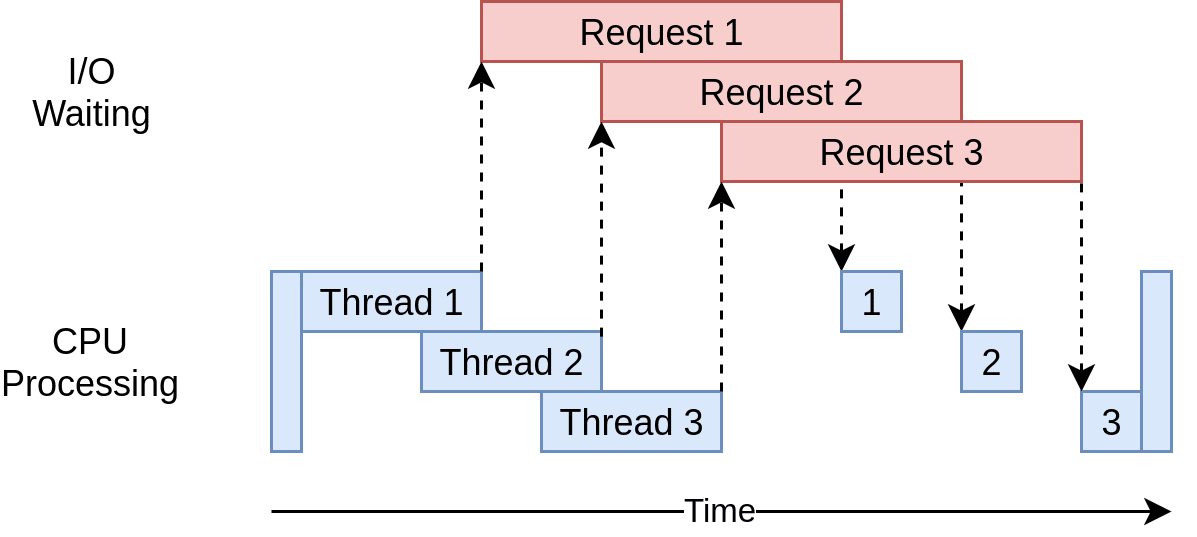

In the diagram below, the blue boxes show the time when your program is actively working, while the red boxes show when it's paused waiting for an I/O operation, such as downloading data from the website, reading data from files, or writing data to files, to complete.

Source: Real Python

However, concurrency allows your program to handle multiple open requests to websites simultaneously, significantly improving performance and efficiency, particularly for time-consuming tasks.

By concurrently sending these requests, your program overlaps the waiting times for responses, reducing the overall waiting time and getting the final results faster.

Source: Real Python

Retry Logic Mechanism

Let's examine how we'll implement retry login functionality within our scraper. Please review the start_scrape function from the previous parts of this series where we iterated through a list of URLs, made requests to them, and checked for a 200 status code.

for url in list_of_urls:

response = requests.get(url)

if response.status_code == 200:

To implement the retry mechanism, we’ll first call the make_request method defined in the RetryLogic class. Then, we'll check the returned status code.

valid, response = **retry_request.make_request(endpoint)**

if valid and response.status_code == 200:

pass

Before we delve into the make_request method, which includes the core retry logic, let's briefly examine the __init__ method to understand the variables it defines. Within __init__, two instance variables are initialized: retry_limit with a default value of 5, and anti_bot_check with a default value of False.

def __init__(self, retry_limit=5, anti_bot_check=False):

self.retry_limit = retry_limit

self.anti_bot_check = anti_bot_check

The make_request method takes a URL, an HTTP method (defaulting to "GET"), and additional keyword arguments (kwargs) for configuring the request.

It sets the default value of allow_redirects to True in the kwargs dictionary. It runs a loop for the specified retry_limit times. Inside the loop, it tries to make the HTTP request using the requests.request method. If the response status code is either 200 or 404, it checks for an anti-bot check, if enabled (self.anti_bot_check == True).

def make_request(self, url, method="GET", **kwargs):

kwargs.setdefault("allow_redirects", True)

for _ in range(self.retry_limit):

try:

response = requests.request(method, url, **kwargs)

if response.status_code in [200, 404]:

if self.anti_bot_check and response.status_code == 200:

if self.passed_anti_bot_check == False:

return False, response

return True, response

except Exception as e:

print("Error", e)

return False, None

If the anti-bot check is enabled and the status code is 200, it checks whether the anti-bot check has passed. If not, it returns False and the response. Otherwise, it returns True and the response.

If an exception occurs during the request (handled by the except block), it prints an error message. If the loop completes without a successful response, it returns False and None.

The passed_anti_bot_check method accepts a response object (presumably an HTTP response) as its argument. It determines whether an anti-bot check has been passed by searching for the specific string ("

False; otherwise, it returns True.

def passed_anti_bot_check(self, response):

if "<title>Robot or human?</title>" in response.text:

return False

return True

Complete Code for the Retry Logic:

class RetryLogic:

def __init__(self, retry_limit=5, anti_bot_check=False):

self.retry_limit = retry_limit

self.anti_bot_check = anti_bot_check

def make_request(self, url, method="GET", **kwargs):

kwargs.setdefault("allow_redirects", True)

for _ in range(self.retry_limit):

try:

response = requests.request(method, url, **kwargs)

if response.status_code in [200, 404]:

if self.anti_bot_check and response.status_code == 200:

if self.passed_anti_bot_check == False:

return False, response

return True, response

except Exception as e:

print("Error", e)

return False, None

def passed_anti_bot_check(self, response):

if "<title>Robot or human?</title>" in response.text:

return False

return True

Concurrency Management

Concurrency refers to the ability to execute multiple tasks or processes concurrently. Concurrency enables efficient utilization of system resources and can often speed up program execution. Python provides several methods and modules to achieve this. One common technique to achieve concurrency is multi-threading.

As the name implies, multi-threading refers to the ability of a processor to execute multiple threads concurrently. Operating systems usually create and manage hundreds of threads, switching CPU time between them rapidly.

Switching between tasks occurs so quickly that the illusion of multitasking is created. It's important to note that the CPU controls thread switching, and developers cannot control it.

Using the concurrent.futures module in Python, you can customize the number of threads you want to create to optimize your code. ThreadPoolExecutor is a popular class within this module that enables you to easily execute tasks concurrently using threads. ThreadPoolExecutor is ideal for I/O-bound tasks, where tasks frequently involve waiting for external resources, such as reading files or downloading data.

Here’s a simple code for adding concurrency to your scraper:

def start_concurrent_scrape(num_threads=5):

while len(list_of_urls) > 0:

with concurrent.futures.ThreadPoolExecutor(max_workers=num_threads) as executor:

executor.map(scrape_page, list_of_urls)

Let’s understand the above code. The statement with concurrent.futures.ThreadPoolExecutor() creates a ThreadPoolExecutor object, which manages a pool of worker threads. The max_workers parameter of the executor is set to the provided num_threads value. The map method of the ThreadPoolExecutor is then used to apply the scrape_page function concurrently to each URL in the list_of_urls list.

Complete Code:

Run the code below and see how your scraper becomes more robust and scalable by handling failed requests and using concurrency.

import os

import time

import csv

import requests

from bs4 import BeautifulSoup

from dataclasses import dataclass, field, fields, InitVar, asdict

import concurrent.futures

@dataclass

class Product:

name: str = ""

price_string: InitVar[str] = ""

price_gb: float = field(init=False)

price_usd: float = field(init=False)

url: str = ""

def __post_init__(self, price_string):

self.name = self.clean_name()

self.price_gb = self.clean_price(price_string)

self.price_usd = self.convert_price_to_usd()

self.url = self.create_absolute_url()

def clean_name(self):

if self.name == "":

return "missing"

return self.name.strip()

def clean_price(self, price_string):

price_string = price_string.strip()

price_string = price_string.replace("Sale price£", "")

price_string = price_string.replace("Sale priceFrom £", "")

if price_string == "":

return 0.0

return float(price_string)

def convert_price_to_usd(self):

return self.price_gb * 1.21

def create_absolute_url(self):

if self.url == "":

return "missing"

return "https://www.chocolate.co.uk" + self.url

class ProductDataPipeline:

def __init__(self, csv_filename="", storage_queue_limit=5):

self.names_seen = []

self.storage_queue = []

self.storage_queue_limit = storage_queue_limit

self.csv_filename = csv_filename

self.csv_file_open = False

def save_to_csv(self):

self.csv_file_open = True

products_to_save = []

products_to_save.extend(self.storage_queue)

self.storage_queue.clear()

if not products_to_save:

return

keys = [field.name for field in fields(products_to_save[0])]

file_exists = (

os.path.isfile(self.csv_filename) and os.path.getsize(self.csv_filename) > 0

)

with open(

self.csv_filename, mode="a", newline="", encoding="utf-8"

) as output_file:

writer = csv.DictWriter(output_file, fieldnames=keys)

if not file_exists:

writer.writeheader()

for product in products_to_save:

writer.writerow(asdict(product))

self.csv_file_open = False

def clean_raw_product(self, scraped_data):

return Product(

name=scraped_data.get("name", ""),

price_string=scraped_data.get("price", ""),

url=scraped_data.get("url", ""),

)

def is_duplicate(self, product_data):

if product_data.name in self.names_seen:

print(f"Duplicate item found: {product_data.name}. Item dropped.")

return True

self.names_seen.append(product_data.name)

return False

def add_product(self, scraped_data):

product = self.clean_raw_product(scraped_data)

if self.is_duplicate(product) == False:

self.storage_queue.append(product)

if (

len(self.storage_queue) >= self.storage_queue_limit

and self.csv_file_open == False

):

self.save_to_csv()

def close_pipeline(self):

if self.csv_file_open:

time.sleep(3)

if len(self.storage_queue) > 0:

self.save_to_csv()

class RetryLogic:

def __init__(self, retry_limit=5, anti_bot_check=False):

self.retry_limit = retry_limit

self.anti_bot_check = anti_bot_check

def make_request(self, url, method="GET", **kwargs):

kwargs.setdefault("allow_redirects", True)

for _ in range(self.retry_limit):

try:

response = requests.request(method, url, **kwargs)

if response.status_code in [200, 404]:

if self.anti_bot_check and response.status_code == 200:

if self.passed_anti_bot_check == False:

return False, response

return True, response

except Exception as e:

print("Error", e)

return False, None

def passed_anti_bot_check(self, response):

# Example Anti-Bot Check

if "<title>Robot or human?</title>" in response.text:

return False

# Passed All Tests

return True

def scrape_page(url):

list_of_urls.remove(url)

valid, response = retry_request.make_request(url)

if valid and response.status_code == 200:

# Parse Data

soup = BeautifulSoup(response.content, "html.parser")

products = soup.select("product-item")

for product in products:

name = product.select("a.product-item-meta__title")[0].get_text()

price = product.select("span.price")[0].get_text()

url = product.select("div.product-item-meta a")[0]["href"]

# Add To Data Pipeline

data_pipeline.add_product({"name": name, "price": price, "url": url})

# Next Page

next_page = soup.select('a[rel="next"]')

if len(next_page) > 0:

list_of_urls.append("https://www.chocolate.co.uk" + next_page[0]["href"])

# Scraping Function

def start_concurrent_scrape(num_threads=5):

while len(list_of_urls) > 0:

with concurrent.futures.ThreadPoolExecutor(max_workers=num_threads) as executor:

executor.map(scrape_page, list_of_urls)

list_of_urls = [

"https://www.chocolate.co.uk/collections/all",

]

if __name__ == "__main__":

data_pipeline = ProductDataPipeline(csv_filename="product_data.csv")

retry_request = RetryLogic(retry_limit=3, anti_bot_check=False)

start_concurrent_scrape(num_threads=10)

data_pipeline.close_pipeline()

Next Steps

We hope you now have a good understanding of why you need to retry requests and use concurrency when web scraping. This includes how the retry logic works, how to check for anti-bots, and how the concurrency management works.

If you would like the code from this example please check out on Github here!

The next tutorial covers how to make our spider production-ready by managing our user agents and IPs to avoid getting blocked. (Part 5)