How To Scrape Walmart.com [2023]

In this guide for our "How To Scrape X" series, we're going to look at how to scrape Walmart.com.

Behind Amazon, Walmart is the 2nd most popular e-commerce website for web scrapers with billions of product pages being scraped every month.

So in this guide we will go through:

- How To Build A List Of Walmart Product URLs

- Scraping Walmart Product Data

- Walmart Anti-Bot Protection

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

How To Build A List Of Walmart Product URLs

The first part of scraping Walmart is designing a web crawler that will generate a list of product URLs for our scrapers to scrape.

With Walmart.com the easiest way to do this is to use the Walmart Search page which returns up to 40 products per page.

For example, here is how we would get search results for iPads.

'https://www.walmart.com/search?q=ipad&sort=best_seller&page=1&affinityOverride=default'

This URL contains a number of parameters that we will explain:

qstands for the search query. In our case,q=ipad. Note: If you want to search for a keyword that contains spaces or special characters then remember you need to encode this value.sortstands for the sorting order of the query. In our case, we usedsort=best_seller, however other options arebest_match,price_lowandprice_high.pagestands for the page number. In our cases, we've requestedpage=1.

Using these parameters we can customise our requests to the search endpoint to start building a list of URLs to scrape.

Walmart only returns a maximum of 25 pages, so if you would to get more results for your particular query then you can:

- More Specific: Say I wanted to find iPhones on Walmart but wanted more than 25 pages of results. Instead of setting the query parameter as "iPhone" you could go "iPhone 14", "iPhone 13", etc.

- Change Sorting: You could make requests with different sorting parameters (

sort=price_lowandsort=price_high) and then filter it for the unique values.

Extracting the list of products returned in the response is actually pretty easy with these Walmart search requests, as the data is available as hidden JSON data on the page.

So we just need to extract the JSON blob in the <script id="__NEXT_DATA__" type="application/json"> tag and parse it into JSON.

<script id="__NEXT_DATA__" type="application/json" nonce="">"{

...DATA...

}"

</script>

This JSON response is pretty big but the data we are looking for is in:

product_list = json_blob["props"]["pageProps"]["initialData"]["searchResult"]["itemStacks"][0]["items"]

Here is an example Python Requests/BeautifulSoup scraper that retrieves all products from a input keyword from all 25 pages.

import json

import requests

from bs4 import BeautifulSoup

from urllib.parse import urlencode

def create_walmart_product_url(product):

return 'https://www.walmart.com' + product.get('canonicalUrl', '').split('?')[0]

headers={"User-Agent": "Mozilla/5.0 (iPad; CPU OS 12_2 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Mobile/15E148"}

product_url_list = []

## Walmart Search Keyword

keyword = 'ipad'

## Loop Through Walmart Pages Until No More Products

for page in range(1, 5):

try:

payload = {'q': keyword, 'sort': 'best_seller', 'page': page, 'affinityOverride': 'default'}

walmart_search_url = 'https://www.walmart.com/search?' + urlencode(payload)

response = requests.get(walmart_search_url, headers=headers)

if response.status_code == 200:

html_response = response.text

soup = BeautifulSoup(html_response, "html.parser")

script_tag = soup.find("script", {"id": "__NEXT_DATA__"})

if script_tag is not None:

json_blob = json.loads(script_tag.get_text())

product_list = json_blob["props"]["pageProps"]["initialData"]["searchResult"]["itemStacks"][0]["items"]

product_urls = [create_walmart_product_url(product) for product in product_list]

product_url_list.extend(product_urls)

if len(product_urls) == 0:

break

except Exception as e:

print('Error', e)

print(product_url_list)

The output will look like this:

[

"https://www.walmart.com/ip/2021-Apple-10-2-inch-iPad-Wi-Fi-64GB-Space-Gray-9th-Generation/483978365",

"https://www.walmart.com/ip/2021-Apple-iPad-Mini-Wi-Fi-64GB-Purple-6th-Generation/996045822",

"https://www.walmart.com/ip/2022-Apple-10-9-inch-iPad-Air-Wi-Fi-64GB-Purple-5th-Generation/860872590",

"https://www.walmart.com/ip/2021-Apple-11-inch-iPad-Pro-Wi-Fi-128GB-Space-Gray-3rd-Generation/354993710",

"https://www.walmart.com/ip/2021-Apple-12-9-inch-iPad-Pro-Wi-Fi-128GB-Space-Gray-5th-Generation/774697337",

"https://www.walmart.com/ip/2020-Apple-10-9-inch-iPad-Air-Wi-Fi-64GB-Sky-Blue-4th-Generation/462727496",

"https://www.walmart.com/ip/2021-Apple-iPad-Mini-Wi-Fi-Cellular-64GB-Starlight-6th-Generation/406091219",

"https://www.walmart.com/ip/2020-Apple-10-9-inch-iPad-Air-Wi-Fi-Cellular-64GB-Silver-4th-Generation/470306039",

"https://www.walmart.com/ip/2022-Apple-10-9-inch-iPad-Air-Wi-Fi-Cellular-64GB-Blue-5th-Generation/234669711",

"https://www.walmart.com/ip/2021-Apple-10-2-inch-iPad-Wi-Fi-Cellular-64GB-Space-Gray-9th-Generation/414515010",

"https://www.walmart.com/ip/2021-Apple-11-inch-iPad-Pro-Wi-Fi-Cellular-128GB-Space-Gray-3rd-Generation/851470965",

"https://www.walmart.com/ip/2021-Apple-12-9-inch-iPad-Pro-Wi-Fi-Cellular-256GB-Space-Gray-5th-Generation/169993514"

]

Now that we have way to get lists of Walmart product URLs we can extract the data from each individual Walmart product page.

The Walmart Search request returns a lot more information than just the product URLs. You can get the product name, price, image URL, rating, number of reviews, etc from the JSON blob as well. So depending on what data you need you mightn't actually need to request each product page as you can get the data from the search results.

def extract_product_data(product):

return {

'url': create_walmart_url(product),

'name': product.get('name', ''),

'description': product.get('description', ''),

'image_url': product.get('image', ''),

'average_rating': product['rating'].get('averageRating'),

'number_reviews': product['rating'].get('numberOfReviews'),

}

product_data_list = [extract_product_data(product) for product in product_list]

Scraping Walmart Product Data

Once we have a list of Walmart product URLs then we can scrape all the product data from each individual Walmart product page.

Again, as Walmart returns the data in the <script id="__NEXT_DATA__" type="application/json"> tag in the HTML response it is pretty easy to extract the data.

<script id="__NEXT_DATA__" type="application/json" nonce="">"{

...DATA...

}"

</script>

We don't need to build CSS/xPath selectors for each field, just take the data we want from the JSON response. An extra bonus from this is that the data is very clean so we have to little to no data cleaning.

The product data can be found here:

product_data = json_blob["props"]["pageProps"]["initialData"]["data"]["product"]

And the product reviews can be found here:

product_reviews = json_blob["props"]["pageProps"]["initialData"]["data"]["reviews"]

The JSON blob with the product data is pretty big so we will configure our scraper to only extract the data we want.

Here is an example Python Requests/BeautifulSoup scraper that will request the page of each Walmart URL and parse the data we want.

import json

import requests

from bs4 import BeautifulSoup

from urllib.parse import urlencode

headers={"User-Agent": "Mozilla/5.0 (iPad; CPU OS 12_2 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Mobile/15E148"}

product_data_list = []

## Loop Through Walmart Product URL List

for url in product_url_list:

try:

response = requests.get(url, headers=headers)

if response.status_code == 200:

html_response = response.text

soup = BeautifulSoup(html_response, "html.parser")

script_tag = soup.find("script", {"id": "__NEXT_DATA__"})

if script_tag is not None:

json_blob = json.loads(script_tag.get_text())

raw_product_data = json_blob["props"]["pageProps"]["initialData"]["data"]["product"]

product_data_list.append({

'id': raw_product_data.get('id'),

'type': raw_product_data.get('type'),

'name': raw_product_data.get('name'),

'brand': raw_product_data.get('brand'),

'averageRating': raw_product_data.get('averageRating'),

'manufacturerName': raw_product_data.get('manufacturerName'),

'shortDescription': raw_product_data.get('shortDescription'),

'thumbnailUrl': raw_product_data['imageInfo'].get('thumbnailUrl'),

'price': raw_product_data['priceInfo']['currentPrice'].get('price'),

'currencyUnit': raw_product_data['priceInfo']['currentPrice'].get('currencyUnit'),

})

except Exception as e:

print('Error', e)

print(product_data_list)

Here an example output:

[

{

"id": "4SR8VU90LQ0P",

"type": "Tablet Computers",

"name": "2021 Apple 10.2-inch iPad Wi-Fi 64GB - Space Gray (9th Generation)",

"brand": "Apple",

"averageRating": 4.7,

"manufacturerName": "Apple",

"shortDescription": "Powerful. Easy to use. Versatile. The new iPad has a beautiful 10.2-inch Retina display, powerful A13 Bionic chip, an Ultra Wide front camera with Center Stage, and works with Apple Pencil and the Smart Keyboard. iPad lets you do more, more easily. All for an incredible value.<p></p>",

"thumbnailUrl": "https://i5.walmartimages.com/asr/86cda84e-4f55-4ffa-954e-9ca5ae27b723.8a72a9690e1951f535eed412cc9e5fc3.jpeg",

"price": 299,

"currencyUnit": "USD"

},

]

You can expand this scraper to extract much more information from the JSON blob.

Walmart Anti-Bot Protection

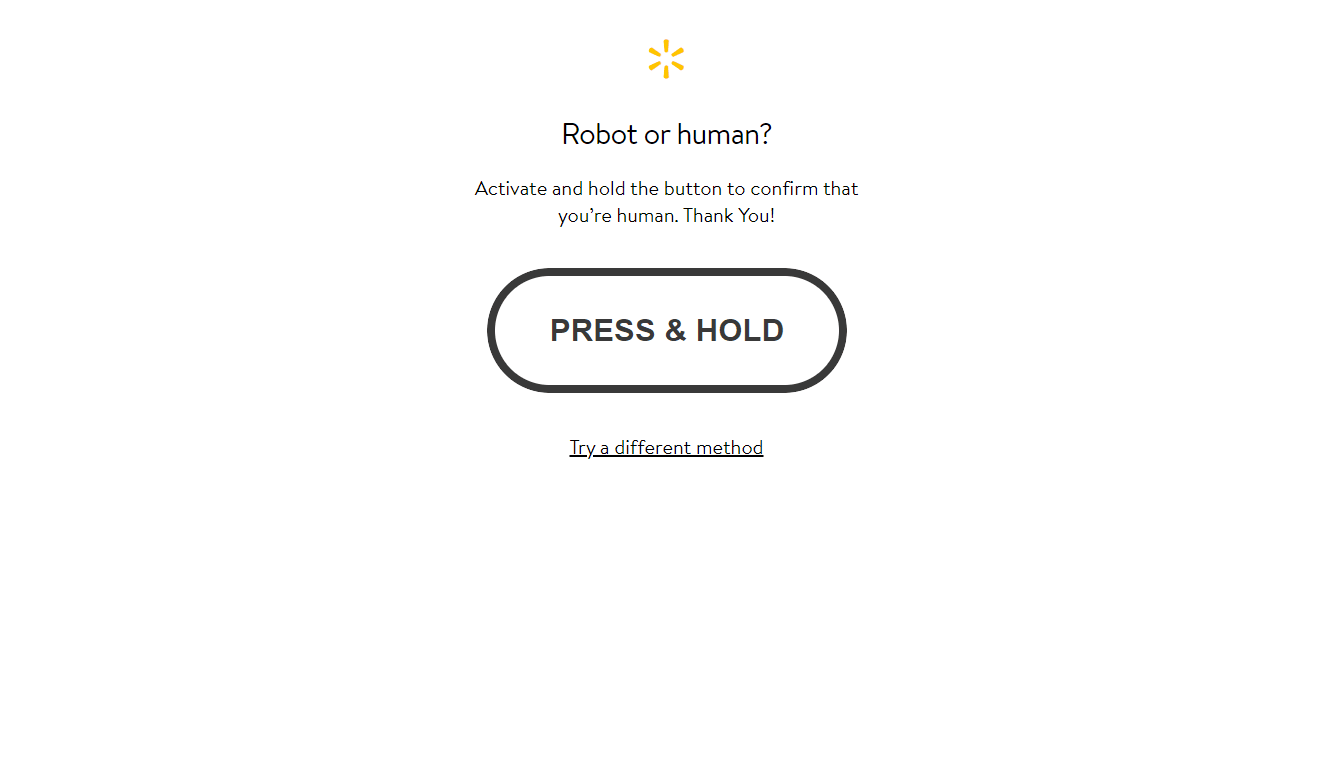

As you might have seen already if you run this code a couple times Walmart might already have started to redirecting you to its blocked page.

This is because Walmart uses anti-bot protection to try and prevent (or at least make it harder) developers from scraping their site.

There anti-bot isn't super complex, however, it is still pretty sophisticated so you will need to using rotating proxies, browser-profiles and possibly fortify your headless browser if you want to scrape it reliably at scale.

We have written about how to do this here:

- Guide to Web Scraping Without Getting Blocked

- Web Scraping Guide: Headers & User-Agents Optimization Checklist

However, if you don't want to implement all this anti-bot bypassing logic yourself the easier option is to use a smart proxy solution like ScrapeOps Proxy Aggregator.

The ScrapeOps Proxy Aggregator is a smart proxy that handles everything for you:

- Proxy rotation & selection

- Rotating user-agents & browser headers

- Ban detection & CAPTCHA bypassing

- Country IP geotargeting

- Javascript rendering with headless browsers

To use the ScrapeOps Proxy Aggregator, we just need to send the URL we want to scrape to the Proxy API instead of making the request directly ourselves. We can do this with a simple wrapper function:

SCRAPEOPS_API_KEY = 'YOUR_API_KEY'

def scrapeops_url(url):

payload = {'api_key': SCRAPEOPS_API_KEY, 'url': url, 'country': 'us'}

proxy_url = 'https://proxy.scrapeops.io/v1/?' + urlencode(payload)

return proxy_url

walmart_url = 'https://www.walmart.com/search?q=ipad&sort=best_seller&page=1&affinityOverride=default'

## Send URL To ScrapeOps Instead of Walmart

response = requests.get(scrapeops_url(walmart_url))

You can get a API key with 1,000 free API credits by signing up here.

Here is our updated Walmart Product Scraper using the ScrapeOps Proxy:

import json

import requests

from bs4 import BeautifulSoup

from urllib.parse import urlencode

SCRAPEOPS_API_KEY = 'YOUR_API_KEY'

def scrapeops_url(url):

payload = {'api_key': SCRAPEOPS_API_KEY, 'url': url, 'country': 'us'}

proxy_url = 'https://proxy.scrapeops.io/v1/?' + urlencode(payload)

return proxy_url

product_data_list = []

## Loop Through Walmart Product URL List

for url in product_url_list:

try:

response = requests.get(scrapeops_url(url))

if response.status_code == 200:

html_response = response.text

soup = BeautifulSoup(html_response, "html.parser")

script_tag = soup.find("script", {"id": "__NEXT_DATA__"})

if script_tag is not None:

json_blob = json.loads(script_tag.get_text())

raw_product_data = json_blob["props"]["pageProps"]["initialData"]["data"]["product"]

product_data_list.append({

'id': raw_product_data.get('id'),

'type': raw_product_data.get('type'),

'name': raw_product_data.get('name'),

'brand': raw_product_data.get('brand'),

'averageRating': raw_product_data.get('averageRating'),

'manufacturerName': raw_product_data.get('manufacturerName'),

'shortDescription': raw_product_data.get('shortDescription'),

'thumbnailUrl': raw_product_data['imageInfo'].get('thumbnailUrl'),

'price': raw_product_data['priceInfo']['currentPrice'].get('price'),

'currencyUnit': raw_product_data['priceInfo']['currentPrice'].get('currencyUnit'),

})

except Exception as e:

print('Error', e)

print(product_data_list)

Now when we make requests with our scraper Walmart won't be block them.

More Web Scraping Guides

In this edition of our "How To Scrape X" series, we went through how you can scrape Walmart.com including how to bypass its anti-bot protection.

If you would like to learn how to scrape other popular websites then check out our other How To Scrape Guides:

Or if you would like to learn more about web scraping in general, then be sure to check out The Web Scraping Playbook, or check out one of our more in-depth guides: