The Best Python Headless Browsers For Web Scraping in 2024

When learning to scrape the web, we often run into the term Headless Browser. Headless browsers are web browsers that operate without a graphical user interface (GUI), meaning they run in the background without displaying any visible windows or tabs.

In this article, we'll compare the best headless browsers for web scraping in Python. We'll provide the pros and cons of each option, along with the appropriate use case for each one.

- TLDR: Best Python Headless Browsers For Web Scraping

- The 5 Best Headless Browsers For Python

- Comparing Headless Browser Options

- Case Study: Scrape Behance for Infinite Scrolling

- Conclusion

- More Python Web Scraping Guides

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

TLDR: Best Python Headless Browsers For Web Scraping

The headless browsers offer numerous advantages over standard HTTP requests when scraping the web. When you use a headless browser, you the ability to do all of the following driectly from a Python script:

- Appear as a legitimate user

- Simulate actual user actions: (scrolling, clicking, etc.)

- Deal with dynamic content and JavaScript

Here's a concise summary and comparison of the headless browsers that we'll review in the rest of the article:

| Feature / Browser | Selenium | Playwright | Puppeteer | Splash | ScrapeOps Headless Browser |

|---|---|---|---|---|---|

| Release Year | 2004 | 2020 | 2017 | 2014 | 2022 |

| Primary Use Case | All-in-one scraping solution | High performance, async | Lightweight, async | Lightweight, requires server | Lightweight, built-in proxy |

| JavaScript Execution | Yes | Yes | Yes | Yes | Yes |

| Ease of Use | Moderate (learning curve) | Moderate (async) | Moderate (async) | Moderate (additional setup) | Moderate (primitive) |

| Resource Intensity | High | Lower than Selenium | Lower than Playwright | Low | Very Low |

| Browser Support | Multiple types | Multiple types | Chromium only | Any HTTP client | Any HTTP client |

| Async Support | No | Yes | Yes | N/A (depends on your client) | N/A (depends on your client) |

| Proxy Support | Requires configuration | Requires configuration | Requires configuration | Requires Configuration | Built-in |

| Installation | Requires WebDriver | pip install playwright | pip install pyppeteer | Docker, run as server | Simple HTTP requests |

| Difficulty with Protected Sites | Yes | Yes | Yes | Yes | No |

| Unique Features | Large ecosystem, documentation | Auto-wait, async | Smaller/more compact, async | Extremely flexible | Flexible, proxy, no server needed |

The 5 Best Headless Browsers For Python

Here are some of the best Python headless browsers commonly used for web scraping:

Selenium

First released in 2004, Selenium is probably the most well known and widely used option on our list of Headless Browsers.

Selenium is pretty much an all in one scraping solution. Selenium gives us the ability to control our normal browser through its WebDriver API. There are tons of third party tools and add-ons available to increase Selenium's functionality even further.

Selenium has given us a unique and intuitive way to scrape the web for two decades and will most likely be used for decades to come.

Pros:

- Supports multiple browser types

- JavaScript execution

- Ease of use

- Large ecosystem of documentation and third party integrations

- Supports multiple browsers

Cons:

- Resource intensive

- Learning curve

- Maintenance

- Additional dependencies in your project

- Difficulty with protected sites

To install Selenium, first you need to make sure you have your browser of choice installed. Then you need to find the WebDriver that matches your actual browser.

You can check your version of Chrome with the following command:

google-chrome -version

Once you know which version of Chrome you are using, you need to head on over and find your webdriver. After installing your webdriver, you can install Selenium with the following command:

pip install selenium

Once we're installed and ready to go, we can run this script:

from selenium import webdriver

#create an options instance

options = webdriver.ChromeOptions()

#add the argument to run in headless mode

options.add_argument("--headless")

#start webdriver with our custom options

driver = webdriver.Chrome(options=options)

#go to the site

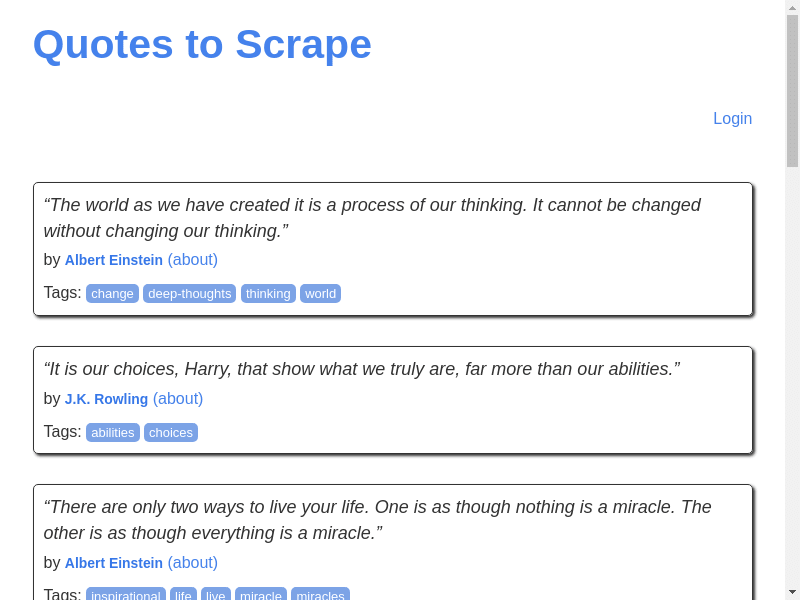

driver.get("https://quotes.toscrape.com")

#take a screenshot

driver.save_screenshot("selenium-example.png")

#close the browser gracefully

driver.quit()

In the code above, we:

importwebdriver from the Selenium package- use

ChromeOptionsto create a custom set of options options.add_argument("--headless")adds the headless option to our argumentswebdriver.Chrome(options=options)launches Chrome with our customs optionsdriver.get()takes us to the site we want to scrapedriver.save_screenshot()takes a screenshot of the site we're scraping

If you run this script, it will output a screenshot similar to the one below:

Playwright

Playwright also gives us the convenience of a browser but it is built with first class support for async functionality as well. This can greatly increase the performance of our scraper, but it also gives us a little more overhead.

We need to import the additional Python library, asyncio and we need to write our script differently and use the await keyword so we can await asynchronous actions.

Pros:

- Supports multiple browser types

- JavaScript execution

- Auto-wait

- Async support

Cons:

- Learning curve

- More limited than Playwright in JavaScript

- Resource intensive

- Difficulty with protected sites

To install Playwright with Python, simply run the following command:

pip install playwright

Then run:

playwright install

To test our Playwright install, we can use the script below.

from playwright.async_api import async_playwright

import asyncio

#create an async run function

async def run(playwright):

#launch a browser

browser = await playwright.chromium.launch(headless=True)

#create a new page

page = await browser.new_page()

#go to the site

await page.goto("https://quotes.toscrape.com")

#take a screenshot

await page.screenshot(path="playwright-example.png")

#close the browser

await browser.close()

#async main function

async def main():

async with async_playwright() as playwright:

await run(playwright)

#run the main function using asyncio

asyncio.run(main())

In the code above, we:

importasync_playwrightandasyncioin order to run Playwright and to haveasyncsupport in our Python script- Create an asynchronous

runfunction holds all of our actual scraping logic:- Launch a headless browser with

playwright.chromium.launch(headless=True) - Open a new page with

browser.new_page() - Go to the site with

page.goto() - Take a screenshot with

page.screenshot(path="playwright-example.png") - Close the browser gracefully with

browser.close()

- Launch a headless browser with

- Create a

mainfunction that runs ourrunfunction asynchronously - Run our

mainfunction withasyncio.run(main())

This code give us the following result:

As you can see in the image above, our screenshot is cleaner and allowed the Header element of the page to load. This is primarily due to Playwright's async support.

Puppeteer

Another headless browser, very closely related to Playwright is Puppeteer. Both tools were originally built off of Chrome's DevTool protocol.

Puppeteer offers much of the same functionality we get from Playwright and it even has an almost identical syntax. Puppeteer has a Python port called Pyppeteer.

Here are the pros and cons to using Pyppeteer:

Pros:

- JavaScript execution

- Dynamic content support

- Async by default

- Smaller/more compact than Selenium and Playwright

Cons:

- Limited to Chromium

- Learning curve

- More limited than the JavaScript version of Puppeteer

- Difficulty with protected sites

To install Pyppeteer, run the following command:

pip install pyppeteer

You can then finish the installation with:

pyppeteer-install

Warning: You should not have Pyppeteer and Python Playwright installed on the same machine at the same time!!! If you would like to do both the Playwright and the Pyppeteer tutorial, either use virtual environments or uninstall Playwright when you're finished with that portion of the tutorial.

Once you have Pyppeteer set and ready to go, try running this script:

import asyncio

from pyppeteer import launch

#async main function

async def main():

#launch a browser

browser = await launch()

#create a new page

page = await browser.newPage()

#go to the site

await page.goto("https://quotes.toscrape.com")

#take the screenshot

await page.screenshot({"path": "pyppeteer-quotes.png"})

#close the browser

await browser.close()

#run the main function

asyncio.get_event_loop().run_until_complete(main())

The code above is very similar to the Playwright example. We do the following:

launch()a browser- Create a new page with

browser.newPage() page.goto()takes us to our site- When taking a screenshot, we pass in our path as a dictionary/JSON object,

page.screenshot({"path": "pyppeteer-quotes.png"}) - Gracefully shut the browser down with

browser.close()

Here is the screenshot from Puppeteer:

Splash

Splash is another super lightweight headless browser we can use. Designed for scripting in Lua, Splash does not have a simple Python port available.

To use Splash with Python, we actually run Splash as a local server. In this model, we send a request to the Splash server, Splash then executes the instructions, and sends the results back to us.

Pros:

- JavaScript exectution

- Extremely lightweight and fast

- Extremely flexible...can interact with any HTTP client

- Resource efficient

Cons

- Additional setup

- Need to run a server

- Extremely primitive

- No native Python API

This requires much more overhead than the previous examples discussed in this tutorial. We'll start by installing Splash. First you need to ensure you have docker installed. Once you have docker running, go ahead and run the following command.

sudo docker pull scrapinghub/splash

The command above finds and downloads the docker image for Splash. Now we need to run Splash as a server, to do that, run the following command:

docker run -p 8050:8050 scrapinghub/splash

Depending on your OS, you may need to use the sudo command:

sudo docker run -p 8050:8050 scrapinghub/splash

This runs Splash as a server on port 8050. At first, you should see a lot of dependency downloads after running this command, this is to finish setting up Splash. When splash is ready to go, you should see a message like the one below.

Because we have such a primitive setup, we can actually control Splash using any HTTP client. For the sake of simplicity, we'll use requests in this tutorial.

Here is our same screenshot example using Splash. Remember to have your local Splash server running!

import requests

#url of our Splash server, in this case localhost

splash_url = "http://localhost:8050/render.png"

#url of the page we want to scrape

target_url = "https://quotes.toscrape.com"

#params to tell Splash what to do

params = {

#url we'd like to go to

"url": target_url,

#wait 2 seconds for JS rendering

"wait": 2,

}

#send the request to Splash server

response = requests.get(splash_url, params=params)

#write the response to a file

with open("splash-quotes.png", "wb") as file:

file.write(response.content)

In the script above we do the following:

- Create our

splash_urlvariable - Save our

target_urlas a variable - Create a

dictobject calledparams... this object holds the instructions we'd like Splash to execute:target_urlandwait - Send our request to Splash with

requests.get(splash_url, params=params) - We use

open("splash-quotes.png", "wb")to open a file in "write binary" mode file.write(response.content)writes the response in binary so we can save our image

Here is the resulting screenshot:

3rd Party Headless Browsers

Very similar to Splash, there are other 3rd Party Headless browsers we can use. We can actually even use the ScrapeOps headless browser directly. This gives us a proxy built-in to our browser. This way, all we need to worry about is our requests library and our scraping logic. We also don't need the additional overhead of running our own server.

Pros:

- Built-in proxy support

- No running a local server

- Super flexible, can take any HTTP client

- Resource efficient... the browser isn't even running on your machine

Cons:

- Slower, the proxy server acts as a middleman

- Primitive, no native Python API

- Requires an API key

- No native screenshots

When we send a request to a server, we receive a response back. When we request a specific webpage, we receive our response back in the form of HTML. Since requests has no way of taking a screenshot, and there is no native screenshot parameter with the ScrapeOps API, we can use imgkit to render our HTML into a screenshot.

First install imgkit:

pip install imgkit

Afterward, we need to install the wkhtmltopdf library so we can render our content. When using proxies, links to CSS files will often get broken and this case is no exception but we will be able to render our HTML and capture the response as a picture.

sudo apt-get install wkhtmltopdf

Here is an example taking a screenshot using the ScrapeOps headless browser:

import requests

from urllib.parse import urlencode

import imgkit

#url of our proxy server

proxy_url = "https://proxy.scrapeops.io/v1/"

#url of the page we want to scrape

target_url = "https://quotes.toscrape.com"

#params to authenticate and tell the browser what to do

params = {

#your scrapeops api key

"api_key": "YOUR-SUPER-SECRET-API-KEY",

#url we'd like to go to

"url": target_url,

#wait 2 seconds for rendering

"wait": 2,

}

#send the request to proxy server

response = requests.get(proxy_url, params=params, timeout=120)

#use imgkit to convert the html to a png file

imgkit.from_string(response.text, "scrapeops-quotes.png")

In this example, we:

- Create a

proxy_urlvariable - Create a

target_urlvariable - Create a

dictof params to pass to the proxy server:"api_key","url", and"wait" - Send a request to the server

- Convert our HTML response into a

.pngfile usingimgkit

When we run this code, we receive the following screenshot:

Comparing Headless Browser Options

When using headless browsers for scraping, we have all sorts of options ranging from a built-in browser all the way to running a browser on an external machine.

Depending on how you want to code, you can even use regular old HTTP requests to a server in the middle (like Splash or ScrapeOps).

When To Use Each of These Headless Browsers

- Selenium: You're very comfortable writing in traditional Python and you want an all in one solution for your headless browsing needs.

- Playwright: You want many of the features availiable in Selenium, but you want something that consumes less resources and supports

asyncprogramming. - Puppeteer: You enjoy the

asyncfeatures available in Playwright, but you need something more lightweight and you don't mind using plain old Chromium as a browser. - Splash: You want a super lightweight scraping client. You don't mind tinkering with

requestsand executing your browser instructions in their params. - ScrapeOps Headless Browser: You're similar to a Splash user and don't mind dealing with the lower level code. You're comfortable executing your page actions through request parameters and you need a solid proxy that can get through even the strongest of anti-bots with ease.

Case Study: "Cool Stuff" on Amazon

In this section, we're going to pit these browsers against eachother to show where they really shine. We're simply going to lookup the phrase "cool stuff" on Amazon.

Selenium

from selenium import webdriver

#create an options instance

options = webdriver.ChromeOptions()

#add the argument to run in headless mode

options.add_argument("--headless")

#start webdriver with our custom options

driver = webdriver.Chrome(options=options)

#go to the site

driver.get("https://www.amazon.com/s?k=cool+stuff")

#take a screenshot

driver.save_screenshot("selenium-amazon.png")

#close the browser gracefully

driver.quit()

Selenium took 3.148 seconds to access the page and take a screenshot of the result.

Here is the result:

As you can see above, Selenium only gets a portion of the page when doing the screenshot and we have a somewhat slow loadtime of 3.148 seconds. Selenium shows its strength best as an all around browser.

Playwright

Now, we'll do the same with Playwright. With Playwright, we get the ability to take a Full Page screenshot.

from playwright.async_api import async_playwright

import asyncio

#create an async run function

async def run(playwright):

#launch a browser

browser = await playwright.chromium.launch(headless=True)

#create a new page

page = await browser.new_page()

#go to the site

await page.goto("https://www.amazon.com/s?k=cool+stuff")

#take a screenshot

await page.screenshot(path="playwright-amazon.png", full_page=True)

#close the browser

await browser.close()

#async main function

async def main():

async with async_playwright() as playwright:

await run(playwright)

#run the main function using asyncio

asyncio.run(main())

Here is the result:

This code took 3.937 seconds to run. Quite a bit slower than Selenium, but Playwright gave us a screenshot of the full page. This is where Playwright really shines. This kind of functionality simply isn't available in Selenium. We get full, accurate readable results from a simple screenshot.

Pyppeteer

Here is the Pyppeteer example to do the same thing:

import asyncio

from pyppeteer import launch

#async main function

async def main():

#launch a browser

browser = await launch()

#create a new page

page = await browser.newPage()

#go to the site

await page.goto("https://www.amazon.com/s?k=cool+stuff")

#take the screenshot

await page.screenshot({"path": "pyppeteer-amazon.png", "fullPage": True})

#close the browser

await browser.close()

#run the main function

asyncio.get_event_loop().run_until_complete(main())

Our Puppeteer example took 4.123 seconds to execute. It is a bit slower than Playwright, but our screenshot is just as accurate:

Pyppeteer is best when you need the functionality of Playwright, but not all the bells and whistles it comes with. You don't need three different browsers built into the package, and you don't mind passing your keyword arguments as JSON objects.

Splash

Here is the code to do the same using Splash with Requests (make sure your Splash server is running!):

import requests

#url of our Splash server, in this case localhost

splash_url = "http://localhost:8050/render.png"

#url of the page we want to scrape

target_url = "https://www.amazon.com/s?k=cool+stuff"

#params to tell Splash what to do

params = {

#url we'd like to go to

"url": target_url,

#wait 2 seconds for JS rendering

"wait": 2,

}

#send the request to Splash server

response = requests.get(splash_url, params=params)

#write the response to a file

with open("splash-amazon.png", "wb") as file:

file.write(response.content)

Here is the result:

Similar to Selenium, we can't get a full page. The code depending on the run the code took between 4.249 seconds and 6.04 seconds. The average run was 4.777 seconds. Splash is actually perfect if you are looking to use minimal resources and scrape in a lightweight format.

ScrapeOps Headless Browser

Here is our example using the ScrapeOps Headless Browser:

import requests

from bs4 import BeautifulSoup

from urllib.parse import urlencode

import imgkit

#url of our proxy server

proxy_url = "https://proxy.scrapeops.io/v1/"

#url of the page we want to scrape

target_url = "https://www.amazon.com/s?k=cool+stuff"

#params to authenticate and tell the browser what to do

params = {

#your scrapeops api key

"api_key": "YOUR-SUPER-SECRET-API-KEY",

#url we'd like to go to

"url": target_url,

}

#send the request to proxy server

response = requests.get(proxy_url, params=params, timeout=120)

soup = BeautifulSoup(response.text)

for script in soup.find_all("script"):

script.decompose()

#use imgkit to convert the html to a png file

imgkit.from_string(soup.text, "scrapeops-amazon.png")

This example took 4.716 seconds to run and here is the resulting screenshot:

As you probably noticed, all styling from this page is completely gone. This is actually not due to ScrapeOps, but imgkit. imgkit is unable to convert JavaScript into HTML, so we need to remove it before taking our "screenshot".

Even more lightweight than Splash, we have ScrapeOps Headless Browser. ScrapeOps is actually best used in combination with another headless browser.

For example, if you want to browse Amazon via ScrapeOps, you would integrate one of the other headless browsers with the ScrapeOps Proxy.

As a headless browser itself, ScrapeOps is usable, but you should really only depend solely on the ScrapeOps Browser if you don't have the resources to run one of the other headless browsers mentioned in this article.

Understanding Headless Browsers

What is a Headless Browser?

A headless browser gives us the ability to surf the web right from a Python script. Because it doesn't have a head (GUI), we don't need to waste valuable resources on our machine. Some great examples of headless browsers are:

- Selenium

- Playwright

- Puppeteer

- Splash

- ScrapeOps Headless Browser

Why Use Headless Browsers Over HTTP Clients

Headless browsers offer a more comprehensive and versatile solution for tasks that involve interacting with dynamic web content, executing JavaScript, and simulating user behavior.

-

Support for page interactions: Headless browsers allow you to interact with web pages programmatically, simulating user actions like clicking buttons, filling out forms, and scrolling. Unlike traditional HTTP clients, which only fetch static HTML content, headless browsers provide a full browsing environment that enables dynamic interaction with web pages.

-

Screenshots: Headless browsers can capture screenshots of web pages, allowing you to visually inspect the rendered content or save snapshots for documentation and reporting purposes.

-

Appears more like a real browser to the server: When making requests to a server, headless browsers mimic the behavior of real web browsers more closely compared to traditional HTTP clients.

-

Abstracting lower-level code in headless browsers: Headless browsers provide higher-level APIs and libraries that abstract away many of the complexities involved in making HTTP requests and handling responses.

Differences Between Headless and Traditional Browsers

When using any headless browser, we get to take advantage of the following benefits:

- Less resources: When we don't have to run a GUI, our machine has more resources free to execute our logic.

- Speed: When we automate processes with a headless browser, our instructions can be executed much faster than a human user would be able to execute them.

- Consistency: Because headless browsers run using a predetermined script, once you have a decent script, you are not susceptible to random human errors and inconsistencies.

- Data Aggregation: Computers are much better suited to both scrape and aggregate data than people. They have more storage and they cache and store that data efficiently.

Key Features to Look for in a Headless Browser for Web Scraping

When choosing a headless browser all of the following are important to think about:

- JavaScript Support: On the modern web, we often run into sites that are protected by anti-bot software. This software checks traffic by sending it a JavaScript Challenge. When scraping the web, your scraper needs to be able to solve these JS challenges.

- Custom User Agents: Another challenge posed by anti-bots comes through header analysis. If we can send custom user agents from our scraper, it makes it easier for us to appear more like a standard browser.

- Proxy Support: When scraping in production, it is best practice to use a proxy. A decent headless browser should make this process easy.

- Session/Cookie Management: Authentication can be a major issue when scraping the web. Login sessions are typically managed through cookies. A decent headless browser will allow you to add cookies from a previous browsing session (whether that be from your headless browser or your normal one) to your current headless browsing session. This allows you to easily deal with logging in and out of different sites.

- Screenshot Capabilities: Screenshots are perhaps the most convenient way to capture data on the web. Screenshots are both extremely easy to take and also extremely easy for a human to review. While not an absolute necessity, they really do make the scraping jobs much easier and faster.

Conclusion

When scraping the web, headless browsers bring us numerous benefits including: JavaScript execution, page interactions, we get to appear more legitimate, and we ge to abstract away much of the low level boilerplate that we'd need to write in order to do everything using a standard HTTP client.

- Selenium: an all in one scraping solution for everybody.

- Playwright: like Selenium, but supports

asyncprogramming. - Puppeteer: Very similar to Playwright, but way more compact and resource friendly.

- Splash: Even more lightweight and flexible. We can interact with it via any HTTP client.

- ScrapeOps Headless Browser: Lightweight and flexible like Splash, except you don't even need to run your own server! Never get blocked by any site because you have a proxy built-in to your browser!

If you are interested in any of the tools or frameworks used in this article, take a look at their docs below!

More Python Web Scraping Guides

Now that you have a decent understanding of headless browsers, go build something! Wanna learn more but not sure where to start?

Check our The Python Web Scraping Playbook to be come a Python web scraping pro!

You can also take a look at the articles below!