freeCodeCamp Scrapy Beginners Course Part 10: Deploying & SchedulingSpiders With Scrapyd

In Part 10 of the Scrapy Beginner Course, we go through how you can deploy and run your spiders in the cloud with Scrapyd.

There are several ways to run and deploy your scrapers to the cloud which we will cover in this course:

However, in Part 10 we will show you a method using Scrapyd:

- What Is Scrapyd?

- How to Setup Scrapyd

- Controlling Spiders With Scrapyd

- Scrapyd Dashboards

- Integrating Scrapyd with ScrapeOps

- Integrating Scrapyd with ScrapydWeb

The code for this part of the course is available on Github here!

If you prefer video tutorials, then check out the video version of this course on the freeCodeCamp channel here.

This guide is part of the 12 Part freeCodeCamp Scrapy Beginner Course where we will build a Scrapy project end-to-end from building the scrapers to deploying on a server and run them every day.

If you would like to skip to another section then use one of the links below:

- Part 1: Course & Scrapy Overview

- Part 2: Setting Up Environment & Scrapy

- Part 3: Creating Scrapy Project

- Part 4: First Scrapy Spider

- Part 5: Crawling With Scrapy

- Part 6: Cleaning Data With Item Pipelines

- Part 7: Storing Data In CSVs & Databases

- Part 8: Faking Scrapy Headers & User-Agents

- Part 9: Using Proxies With Scrapy Spiders

- Part 10: Deploying & Scheduling Spiders With Scrapyd

- Part 11: Deploying & Scheduling Spiders With ScrapeOps

- Part 12: Deploying & Scheduling Spiders With Scrapy Cloud

The code for this project is available on Github here!

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

What Is Scrapyd?

Scrapyd is an open source package that allows us to deploy Scrapy spiders to a server and run them remotely using a JSON API. Scrapyd allows you to:

- Run Scrapy jobs.

- Pause & Cancel Scrapy jobs.

- Manage Scrapy project/spider versions.

- Access Scrapy logs remotely.

Scrapyd is a great option for developers who want an easy way to manage production Scrapy spiders that run on a remote server.

With Scrapyd you can manage multiple servers from one central point by using a ready-made Scrapyd management tool like ScrapeOps, an open source alternative or by building your own.

Here you can check out the full Scrapyd docs and Github repo.

How to Setup Scrapyd

Getting Scrapyd setup is simple, and you can run it locally or on a server.

First step is to install Scrapyd:

pip install scrapyd

And then start the server by using the command:

scrapyd

This will start Scrapyd running on http://localhost:6800/. You can open this URL in your browser and you should see the following screen:

Deploying Spiders To Scrapyd

To run jobs using Scrapyd, we first need to eggify and deploy our Scrapy project to the Scrapyd server.

To do this, there is a library called scrapyd-client that makes this process very simple.

First, let's install scrapyd-client:

pip install git+https://github.com/scrapy/scrapyd-client.git

Once installed, navigate to our bookscraper project we want to deploy and open our scrapyd.cfg file, which should be located in your project's root directory.

You should see something like this, with the "bookscraper" text being replaced by your Scrapy projects name:

## scrapy.cfg

[settings]

default = bookscraper.settings

[deploy]

#url = http://localhost:6800/

project = bookscraper

Here the scrapyd.cfg configuration file defines the endpoint your Scrapy project should be deployed to. To enable us to deploy our project to Scrapyd, we just need to uncomment the url value if we want to deploy it to a locally running Scrapyd server.

## scrapy.cfg

[settings]

default = bookscraper.settings

[deploy]

url = http://localhost:6800/

project = bookscraper

Then run the following command in your Scrapy projects root directory:

scrapyd-deploy default

This will then eggify your Scrapy project and deploy it to your locally running Scrapyd server. You should get a result like this in your terminal if it was successful:

$ scrapyd-deploy default

Packing version 1640086638

Deploying to project "bookscraper" in http://localhost:6800/addversion.json

Server response (200):

{"node_name": "DESKTOP-67BR2", "status": "ok", "project": "bookscraper", "version": "1640086638", "spiders": 1}

Now your Scrapy project has been deployed to your Scrapyd and is ready to be run.

Controlling Spiders With Scrapyd

Scrapyd comes with a minimal web interface which can be accessed at http://localhost:6800/, however, this interface is just a rudimentary overview of what is running on a Scrapyd server and doesn't allow you to control the spiders deployed to the Scrapyd server.

To control your spiders with Scrapyd you have 3 options:

- Scrapyd JSON API

- Python-Scrapyd-API Library

- Scrapyd Dashboards

For this tutorial we will focus on using free Scrapyd dashboards like ScrapeOps & ScrapydWeb. However, if you would like to learn more about the other options then check out our in-depth Scrapyd guide.

Scrapyd Dashboards

Using Scrapyd's JSON API to control your spiders is possible, however, it isn't ideal as you will need to create custom workflows on your end to monitor, manage and run your spiders. Which can become a major project in itself if you need to manage spiders spread across multiple servers.

Other developers ran into this problem so luckily for us, they decided to create free and open-source Scrapyd dashboards that can connect to your Scrapyd servers so you can manage everything from a single dashboard.

There are many different Scrapyd dashboard and admin tools available:

If you'd like to choose the best one for your requirements then be sure to check out our Guide to the Best Scrapyd Dashboards here.

For this tutorial we will focus on using free Scrapyd dashboards like ScrapeOps & ScrapydWeb.

Integrating Scrapyd with ScrapeOps

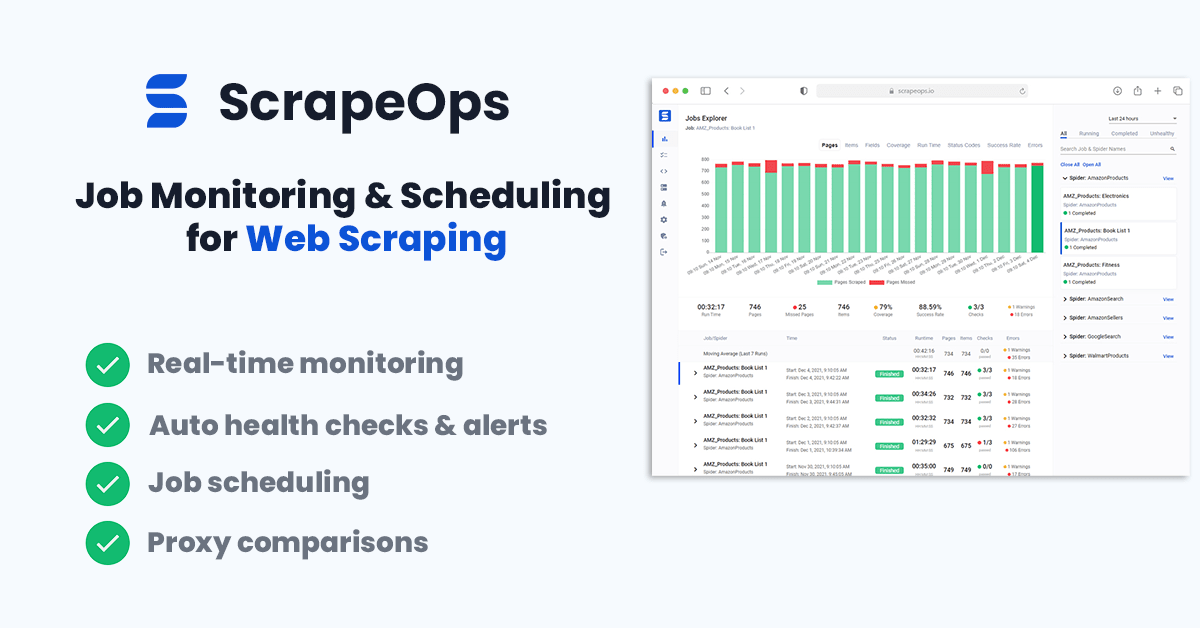

ScrapeOps is a free monitoring tool for web scraping that also has a Scrapyd dashboard that allows you to schedule, run and manage all your scrapers from a single dashboard.

Live demo here: ScrapeOps Demo

With a simple 30 second install ScrapeOps gives you all the monitoring, alerting, scheduling and data validation functionality you need for web scraping straight out of the box.

Unlike the other Scrapyd dashboard, ScrapeOps is a full end-to-end web scraping monitoring and management tool dedicated to web scraping that automatically sets up all the monitors, health checks and alerts for you.

ScrapeOps Features

Once setup, ScrapeOps will:

- 🕵️♂️ Monitor - Automatically monitor all your scrapers.

- 📈 Dashboards - Visualise your job data in dashboards, so you see real-time & historical stats.

- 💯 Data Quality - Validate the field coverage in each of your jobs, so broken parsers can be detected straight away.

- 📉 Auto Health Checks - Automatically check every jobs performance data versus its 7 day moving average to see if its healthy or not.

- ✔️ Custom Health Checks - Check each job with any custom health checks you have enabled for it.

- ⏰ Alerts - Alert you via email, Slack, etc. if any of your jobs are unhealthy.

- 📑 Reports - Generate daily (periodic) reports, that check all jobs versus your criteria and let you know if everything is healthy or not.

ScrapeOps Integration

There are two steps to integrate ScrapeOps with your Scrapyd servers:

- Install ScrapeOps Logger Extension

- Connect ScrapeOps to Your Scrapyd Servers

Note: You can't connect ScrapeOps to a Scrapyd server that is running locally, and isn't offering a public IP address available to connect to.

Once setup you will be able to schedule, run and manage all your Scrapyd servers from one dashboard.

Step 1: Install Scrapy Logger Extension

For ScrapeOps to monitor your scrapers, create dashboards and trigger alerts you need to install the ScrapeOps logger extension in each of your Scrapy projects.

Simply install the Python package:

pip install scrapeops-scrapy

And add 3 lines to your settings.py file:

## settings.py

## Add Your ScrapeOps API key

SCRAPEOPS_API_KEY = 'YOUR_API_KEY'

## Add In The ScrapeOps Extension

EXTENSIONS = {

'scrapeops_scrapy.extension.ScrapeOpsMonitor': 500,

}

## Update The Download Middlewares

DOWNLOADER_MIDDLEWARES = {

'scrapeops_scrapy.middleware.retry.RetryMiddleware': 550,

'scrapy.downloadermiddlewares.retry.RetryMiddleware': None,

}

From there, your scraping stats will be automatically logged and automatically shipped to your dashboard.

Step 2: Connect ScrapeOps to Your Scrapyd Servers

The next step is giving ScrapeOps the connection details of your Scrapyd servers so that you can manage them from the dashboard.

Enter Scrapyd Server Details

Within your dashboard go to the Servers page and click on the Add Scrapyd Server at the top of the page.

In the dropdown section then enter your connection details:

- Server Name

- Server Domain Name (optional)

- Server IP Address

Whitelist Our Server (Optional)

Depending on how you are securing your Scrapyd server, you might need to whitelist our IP address so it can connect to your Scrapyd servers. There are two options to do this:

Option 1: Auto Install (Ubuntu)

SSH into your server as root and run the following command in your terminal.

wget -O scrapeops_setup.sh "https://assets-scrapeops.nyc3.digitaloceanspaces.com/Bash_Scripts/scrapeops_setup.sh"; bash scrapeops_setup.sh

This command will begin the provisioning process for your server, and will configure the server so that Scrapyd can be managed by Scrapeops.

Option 2: Manual Install

This step is optional but needed if you want to run/stop/re-run/schedule any jobs using our site. If we cannot reach your server via port 80 or 443 the server will be listed as read only.

The following steps should work on Linux/Unix based servers that have UFW firewall installed.:

Step 1: Log into your server via SSH

Step 2: Enable SSH'ing so that you don't get blocked from your server

sudo ufw allow ssh

Step 3: Allow incoming connections from 46.101.44.87

sudo ufw allow from 46.101.44.87 to any port 443,80 proto tcp

Step 4: Enable ufw & check firewall rules are implemented

sudo ufw enable

sudo ufw status

Step 5: Install Nginx & setup a reverse proxy to let connection from scrapeops reach your scrapyd server.

sudo apt-get install nginx -y

Add the proxy_pass & proxy_set_header code below into the "location" block of your nginx default config file (default file usually found in /etc/nginx/sites-available)

proxy_pass http://localhost:6800/;

proxy_set_header X-Forwarded-Proto http;

Reload your nginx config

sudo systemctl reload nginx

Once this is done you should be able to run, re-run, stop, schedule jobs for this server from the ScrapeOps dashboard.

Integrating Scrapyd with ScrapydWeb

Another popular option is ScrapydWeb.

ScrapydWeb is a great solution for anyone looking for a robust spider management tool that can be integrated with their Scrapyd servers.

With ScrapydWeb, you can schedule, run and see the stats from all your jobs across all your servers on a single dashboard. ScrapydWeb supports all the Scrapyd JSON API endpoints so can also stop jobs mid-crawl and delete projects without having to log into your Scrapyd server.

When combined with LogParser, ScrapydWeb will also extract your Scrapy logs from your server and parse them into an easier to understand way.

Although, ScrapydWeb has a lot of spider management functionality, its monitoring/job visualisation capabilities are quite limited, and there are a number of user experience issues that make it less than ideal if you plan to rely on it completely as your main spider monitoring solution.

Installing ScrapydWeb

Getting ScrapydWeb installed and setup is super easy. (This is a big reason why it has become so popular).

To get started we need to install the latest version of ScrapydWeb:

pip install --upgrade git+https://github.com/my8100/scrapydweb.git

Next to run Scrapydweb we just need to use the command:

scrapydweb

This will build a ScrapydWeb instance for you, create the necessary settings files and launch a ScrapydWeb server on http://127.0.0.1:5000.

Sometimes the first time you run scrapydweb it will just create the ScrapydWeb files but won't start the server. If this happens just run the scrapydweb command again and it will start the server.

Now, when you open http://127.0.0.1:5000 in your browser you should see a screen like this.

Install Logparser

With the current setup you can use ScrapydWeb to schedule and run your scraping jobs, but you won't see any stats for your jobs in your dashboard.

Not to worry however, the developers behind ScrapydWeb have created a library called Logparser to do just that.

If you run Logparser in the same directory as your Scrapyd server, it will automatically parse your Scrapy logs and make them available to your ScrapydWeb dashboard.

To install Logparser, enter the command:

pip install logparser

Then in the same directory as your Scrapyd server, run:

logparser

This will start a daemon that will automatically parse your Scrapy logs for ScrapydWeb to consume.

Note: If you are running Scrapyd and ScrapydWeb on the same machine then it is recommended to set the LOCAL_SCRAPYD_LOGS_DIR path to your log files directory and ENABLE_LOGPARSER to True in your ScrapydWeb's settings file.

At this point, you will have a running Scrapyd server, a running logparser instance, and a running ScrapydWeb server. From here, we are ready to use ScrapydWeb to schedule, run and monitor our jobs.

Connecting To Scrapyd Servers

Adding Scrapyd servers to ScrapydWeb dashboard is pretty simple. You just need to edit your ScrapydWeb settings file.

By default ScrapydWeb is setup to connect to locally running Scrapyd server on localhost:6800.

SCRAPYD_SERVERS = [

'127.0.0.1:6800',

# 'username:password@localhost:6801#group', ## string format

#('username', 'password', 'localhost', '6801', 'group'), ## tuple format

]

If you want to connect to remote Scrapyd servers, then just add them to the above array, and restart the server. You can add servers in both a string or tuple format.

Note: you need to make sure bind_address = 0.0.0.0 in your settings file, add restart Scrapyd to make it visible externally.

With this done, you should see something like this on your servers page: http://127.0.0.1:5000/1/servers/.

Running Spiders

Now, with your server connected we are able to schedule and run spiders from the projects that have been deployed to our Scrapyd server.

Navigate to the Run Spider page (http://127.0.0.1:5000/1/schedule/), and you will be able to select and run spiders.

This will then send a POST request to the /schedule.json endpoint of your Scrapyd server, triggering Scrapyd to run your spider.

You can also schedule jobs to run periodically by enabling the timer task toggle and entering your cron details.

Job Stats

When Logparser is running, ScrapydWeb will periodicially poll the Scrapyd logs endpoint and display your job stats so you can see how they have performed.

Next Steps

In this part, we looked at how we can use Scrapyd to deploy and run our spiders in the cloud, and control them using ScrapeOps and ScrapydWeb.

So in Part 11, we will look at how you can use ScrapeOps free server manager & job scheduler to deply and run Scrapy spiders in the cloud.

All parts of the 12 Part freeCodeCamp Scrapy Beginner Course are as follows:

- Part 1: Course & Scrapy Overview

- Part 2: Setting Up Environment & Scrapy

- Part 3: Creating Scrapy Project

- Part 4: First Scrapy Spider

- Part 5: Crawling With Scrapy

- Part 6: Cleaning Data With Item Pipelines

- Part 7: Storing Data In CSVs & Databases

- Part 8: Faking Scrapy Headers & User-Agents

- Part 9: Using Proxies With Scrapy Spiders

- Part 10: Deploying & Scheduling Spiders With Scrapyd

- Part 11: Deploying & Scheduling Spiders With ScrapeOps

- Part 12: Deploying & Scheduling Spiders With Scrapy Cloud