Build Your Own Custom Scrapy Middleware, Full Example

What makes Scrapy great isn't just the fact that it comes with so much functionality out of the box; it's that Scrapy's core functionality is easily customizable.

Default middlewares are very powerful, but creating custom ones allows you to tailor Scrapy to your specific use cases.

In this guide, we'll go step-by-step through the process of creating a custom Scrapy Downloader Middleware by building our own proxy middleware, which you can adapt for your projects.

- How Scrapy Middlewares Work?

- Scrapy Middleware Use Cases

- Setting Up Your Scrapy Project for Middleware Development

- Planning A Scrapy Proxy Middleware

- Writing the Custom Proxy Middleware

- Testing Your Middleware

- Real-World Example: Building a Custom Retry Middleware

- Conclusion

- More Python Web Scraping Guides

How Scrapy Middlewares Work?

Scrapy middlewares sit between the Scrapy engine and the downloader, or between the engine and the spider.

-

When the middleware is between the engine and the downloader, it's called a downloader middleware.

-

If it sits between the engine and the spider, it's called a spider middleware.

-

Downloader Middleware: This is used to handle things at the request and response level. Common usecases for downloader middleware include proxies, retry logic and custom headers or user-agents.

-

Spider Middleware: This type of middleware sits between the actual response and the spider. Use it to drop responses and items. You can also use it to alter responses before they get to the extraction phase.

Scrapy Middleware Use Cases

Because of their location in the project, Scrapy middlewares are excellent for adding all sorts of functionality.

Take a look at the list below to see what you can do with Scrapy middleware.

- Rotating Proxies: Implement rotating proxies when making requests.

- Managing IP Bans: Use middleware to see if your IP has been banned. If it has, you can rotate proxies for a new IP address.

- Handling Timeouts: Middleware can decide if the scraper has waited too long for a response. Throw an error if a response is taking too long.

- Retry Logic: If you receive a bad response, or if your timeout middleware kicks in, retry your request for a proper response.

- Custom Headers: Some sites require custom headers in order to give a proper response. With middleware, we can set all of our requests to use these specific headers.

- Authentication: Sometimes you need a cookie or an API key to access certain content. Middleware can handle these authentication objects for you.

- Rate Limiting: If you're getting a status 429 (too many requests), you can create a middleware to throttle your requests based on the status code. For example, if you get a 429, your middleware can pause the scraper for 5 or 10 seconds to adjust for this rate limiting.

Setting Up Your Scrapy Project for Middleware Development

Let's get started using Scrapy and write some custom middleware.

We'll go through everything you need to do from installing Python to setting up the project.

Prerequisites

Python

To follow along, you really need to make sure you have Python installed. Follow the instructions below to make sure you've got it installed.

You can check your installation by running the following command.

python --version

Your output should look something like this.

Python 3.10.12

Scrapy

Next, you can install Scrapy using pip.

pip install scrapy

Setting Up Our Project

Now, we'll create a new Scrapy project. The command below creates a new project folder with a basic Scrapy setup.

scrapy startproject my_custom_middleware

At this point, you need to create a new middlewares folder inside the project.

Your full file tree is going to look like this when we're finished.

.

├── my_custom_middleware

│ ├── __init__.py

│ ├── items.py

│ ├── middlewares

│ │ ├── __init__.py

│ │ ├── proxy_middleware.py

│ │ ├── __pycache__

│ │ │ ├── __init__.cpython-310.pyc

│ │ │ ├── proxy_middleware.cpython-310.pyc

│ │ │ └── retry_middleware.cpython-310.pyc

│ │ └── retry_middleware.py

│ ├── middlewares.py

│ ├── pipelines.py

│ ├── __pycache__

│ │ ├── __init__.cpython-310.pyc

│ │ └── settings.cpython-310.pyc

│ ├── settings.py

│ └── spiders

│ ├── __init__.py

│ ├── __pycache__

│ │ ├── __init__.cpython-310.pyc

│ │ └── test_spider.cpython-310.pyc

│ └── test_spider.py

└── scrapy.cfg

As you probably noticed, I have quite a few files that you don't have yet. That's ok, we're going to create them now.

Inside your new middlewares folder, add a file called __init__.py. This allows Scrapy to find and execute your middleware.

Alongside your __init__ file, go ahead and create two Python files, proxy_middleware.py and retry_middleware.py.

You can test your Scrapy installation with the following command.

scrapy fetch https://lumtest.com/echo.json

Near the end of your console output, you should see something similar to this.

However, the location information will be specific to your actual location.

{"country":"FR","asn":{"asnum":3215,"org_name":"Orange"},"geo":{"city":"Champvans","region":"BFC","region_name":"Bourgogne-Franche-Comté","postal_code":"39100","latitude":47.1071,"longitude":5.4313,"tz":"Europe/Paris","lum_city":"champvans","lum_region":"bfc"},"method":"GET","httpVersion":"1.1","url":"/echo.json","headers":{"Host":"lumtest.com","X-Real-IP":"90.100.196.78","X-Forwarded-For":"90.100.196.78","X-Forwarded-Proto":"https","X-Forwarded-Host":"lumtest.com","Accept":"text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8","Accept-Language":"en","User-Agent":"Scrapy/2.11.0 (+https://scrapy.org)","Accept-Encoding":"gzip, deflate, br, zstd"}}

It's not necessary at the moment, but you can also use a spider with the genspider command.

This creates a spider that hits the same API endpoint we just used to check our location information.

scrapy genspider test_spider https://lumtest.com/echo.json

If you open the spider up, you'll see some code like this. As you can see, our parse method uses the pass keyword.

This spider essentially does nothing at the moment.

import scrapy

class TestSpiderSpider(scrapy.Spider):

name = "test_spider"

allowed_domains = ["lumtest.com"]

start_urls = ["https://lumtest.com/echo.json"]

def parse(self, response):

pass

We're going to modify it to print our responses from the API.

import scrapy

class TestSpiderSpider(scrapy.Spider):

name = "test_spider"

allowed_domains = ["lumtest.com"]

start_urls = ["https://lumtest.com/echo.json"]

def parse(self, response):

self.log("[DEBUG] Response received")

self.log(f"[DEBUG] Request meta: {response.request.meta}")

self.log(f"[DEBUG] Request headers: {response.request.headers}")

self.log(f"[DEBUG] Response body: {response.text}")

You can run this spider with the following command from your root directory.

scrapy runspider my_custom_middleware/spiders/test_spider.py

If you search your output, you'll see something similar to the output you saw earlier from the fetch command.

We've now got a functioning Scrapy project.

The Basics of a Scrapy Downloader Middleware

Downloader middlewares sit at the request/response level of our scraper. With Scrapy, the process_request() method is used to alter our requests before they take place.

In the code below, process_request() is used to modify our headers. process_response() is used to log our status code to the console.

We can add all sorts of functionality based on these methods.

class BasicDownloaderMiddleware:

def process_request(self, request, spider):

#modify the request by adding a custom header

request.headers['X-Custom-Header'] = 'MyCustomValue'

spider.logger.info(f"Modified request: {request.url}")

return None

def process_response(self, request, response, spider):

#log the response status and return the response

spider.logger.info(f"Received response {response.status} for {request.url}")

return response

def process_exception(self, request, exception, spider):

#handle exceptions and log them

spider.logger.error(f"Exception {exception} occurred for {request.url}")

return None

The code above is for a hypothetical middleware. It doesn't really do much of anything.

In the next few sections, we'll show some real world examples of downloader middleware.

Planning A Scrapy Proxy Middleware

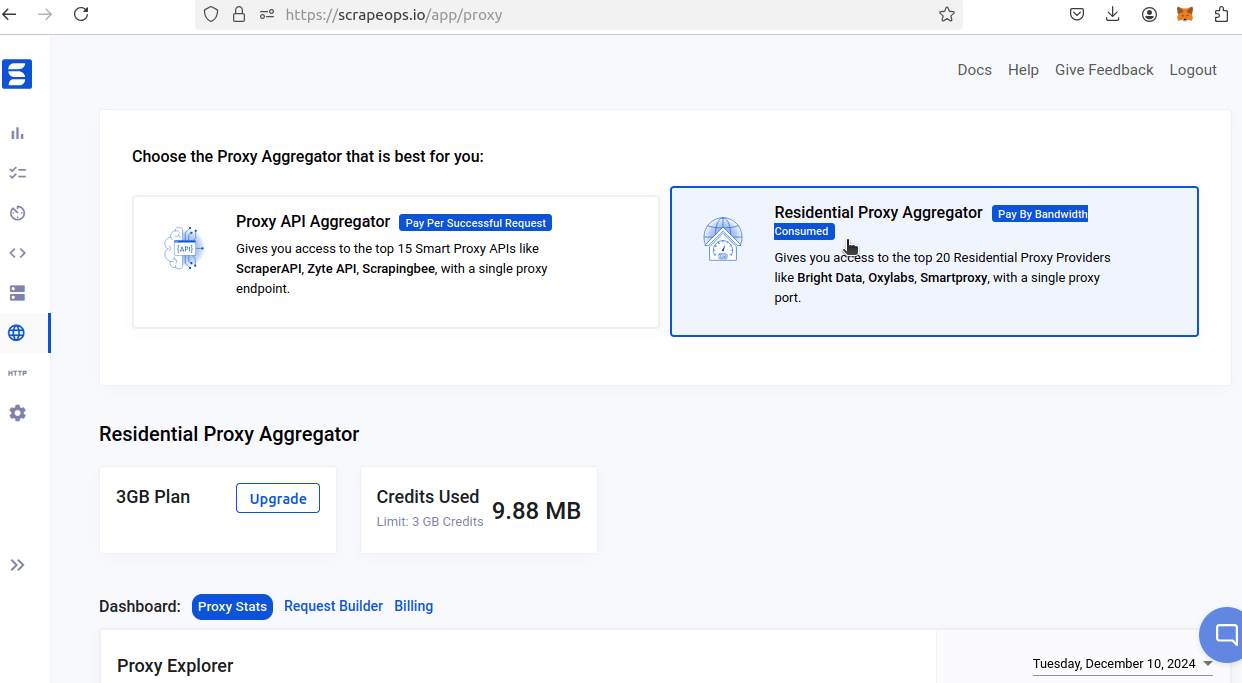

We're going to create a proxy middleware that uses the ScrapeOps Residential Proxy.

Our Residential Proxy Aggregator automatically rotates proxies and allows us to choose our target location. Everything is basically handled for us, you just need to hook it up.

To start, you need a ScrapeOps account. You can create a new account here. Fill in the required information and you'll get an account with an API key and some free usage credits.

Next, you'll be taken to the dashboard. On the left hand side, click on Proxy Aggregator.

You can click on Request Builder to reveal your API key. Save your API key somewhere safe. This is what we'll use to hook our scraper to our ScrapeOps account.

Using our process_request() method, we'll set all requests to go through our proxy connection.

To create the connection, we need our PROXY_URL, PROXY_USER and PROXY_PASS. In other words, we need a URL, a username and a password.

PROXY_URL:http://residential-proxy.scrapeops.ioPROXY_USER:scrapeopsPROXY_PASS: The ScrapeOps API key you created earlier.

We'll use our username and password to create an authentication string: f"{PROXY_USER}:{PROXY_PASS}".

Once we've got this string, we need to encode it using base64 so we can send it using the Proxy-Authorization header.

When ScrapeOps receives this header, they can connect our request to our ScrapeOps account.

Writing the Custom Proxy Middleware

Now, let's write our custom proxy middleware.

In the code below, we use the same parameters we mentioned in the section above (PROXY_URL, PROXY_USER, PROXY_PASS) and use them to authenticate our proxy connection.

Then we pass them into our Proxy-Authorization header once they've been encoded in base64.

import base64

from scrapy.exceptions import NotConfigured

class ProxyMiddleware:

def __init__(self, proxy_url):

self.proxy_url = proxy_url

print(f"[DEBUG] ProxyMiddleware initialized with proxy: {proxy_url}")

@classmethod

def from_crawler(cls, crawler):

proxy_url = crawler.settings.get("PROXY_URL")

if not proxy_url:

raise NotConfigured("PROXY_URL is not set in settings.")

print(f"[DEBUG] PROXY_URL from settings: {proxy_url}")

return cls(proxy_url)

def process_request(self, request, spider):

print(f"[DEBUG] Processing request: {request.url}")

request.meta['proxy'] = self.proxy_url

proxy_user = spider.settings.get("PROXY_USER")

proxy_pass = spider.settings.get("PROXY_PASS")

if proxy_user and proxy_pass:

proxy_auth = f"{proxy_user}:{proxy_pass}"

encoded_auth = base64.b64encode(proxy_auth.encode()).decode()

request.headers['Proxy-Authorization'] = f'Basic {encoded_auth}'

print(f"[DEBUG] Using proxy authentication: {proxy_user}")

print(f"[DEBUG] Proxy set to {self.proxy_url}")

Pay close attention to process_request():

request.meta['proxy'] = self.proxy_urltells Scrapy to use ourPROXY_URL.- We use

spider.settings.get()to retrieve our username and password from the settings file. - Next, we create a plain text authentication string:

proxy_auth = f"{proxy_user}:{proxy_pass}". - We encode this into a base64 string with

encoded_auth = base64.b64encode(proxy_auth.encode()).decode(). We use thedecode()method to convert the actual encoded bytes into a string we can send over the web. - We then add this to our

Proxy-Authorizationheader:request.headers['Proxy-Authorization'] = f'Basic {encoded_auth}'. - When making requests, Scrapy reads our

PROXY_URLand sends our authentication string to the proxy server.

Testing Your Middleware

Now, let's test out this middleware. We're going to ping the same API endpoint we used earlier.

You can test using the spider we created earlier, or you can simply use the fetch method that's been built into Scrapy.

Before we dive into testing, we need to turn our middleware on in the settings.

Uncomment the middleware section and add the path to your middleware.

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

PROXY_URL = "http://residential-proxy.scrapeops.io:8181"

PROXY_USER = "scrapeops"

PROXY_PASS = "your-super-secret-api-key"

DOWNLOADER_MIDDLEWARES = {

"my_custom_middleware.middlewares.proxy_middleware.ProxyMiddleware": 543,

"scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware": 750,

}

Test Using Fetch

To test it using fetch, run this command.

scrapy fetch https://lumtest.com/echo.json

You should see something like this at the end of the console output.

{"country":"DZ","asn":{"asnum":36947,"org_name":"Telecom Algeria"},"geo":{"city":"Sidi Bel Abbes","region":"22","region_name":"Sidi Bel Abbès","postal_code":"22000","latitude":34.8934,"longitude":-0.6526,"tz":"Africa/Algiers","lum_city":"sidibelabbes","lum_region":"22"},"method":"GET","httpVersion":"1.1","url":"/echo.json","headers":{"Host":"lumtest.com","X-Real-IP":"41.100.13.111","X-Forwarded-For":"41.100.13.111","X-Forwarded-Proto":"https","X-Forwarded-Host":"lumtest.com","Accept":"text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8","Accept-Language":"en","User-Agent":"Scrapy/2.11.0 (+https://scrapy.org)","Accept-Encoding":"gzip, deflate, br, zstd"}}

Test Using the Spider

Earlier, we used the runspider method and passed in the entire path to the spider. This time, we're going to use the crawl command.

scrapy crawl test_spider

You'll have to scroll through your console output, but you'll find the same response in there. Look for the portion following Response body.

2024-12-21 18:20:51 [scrapy.utils.log] INFO: Scrapy 2.11.0 started (bot: my_custom_middleware)

2024-12-21 18:20:51 [scrapy.utils.log] INFO: Versions: lxml 4.9.3.0, libxml2 2.10.3, cssselect 1.2.0, parsel 1.8.1, w3lib 2.1.2, Twisted 22.10.0, Python 3.10.12 (main, Nov 6 2024, 20:22:13) [GCC 11.4.0], pyOpenSSL 23.2.0 (OpenSSL 3.1.3 19 Sep 2023), cryptography 41.0.4, Platform Linux-6.8.0-49-generic-x86_64-with-glibc2.35

2024-12-21 18:20:51 [scrapy.addons] INFO: Enabled addons:

[]

2024-12-21 18:20:51 [asyncio] DEBUG: Using selector: EpollSelector

2024-12-21 18:20:51 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.asyncioreactor.AsyncioSelectorReactor

2024-12-21 18:20:51 [scrapy.utils.log] DEBUG: Using asyncio event loop: asyncio.unix_events._UnixSelectorEventLoop

2024-12-21 18:20:51 [scrapy.extensions.telnet] INFO: Telnet Password: 47a4a69c4e540214

2024-12-21 18:20:51 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.memusage.MemoryUsage',

'scrapy.extensions.logstats.LogStats']

2024-12-21 18:20:51 [scrapy.crawler] INFO: Overridden settings:

{'BOT_NAME': 'my_custom_middleware',

'FEED_EXPORT_ENCODING': 'utf-8',

'NEWSPIDER_MODULE': 'my_custom_middleware.spiders',

'REQUEST_FINGERPRINTER_IMPLEMENTATION': '2.7',

'ROBOTSTXT_OBEY': True,

'SPIDER_MODULES': ['my_custom_middleware.spiders'],

'TWISTED_REACTOR': 'twisted.internet.asyncioreactor.AsyncioSelectorReactor'}

[DEBUG] PROXY_URL from settings: http://residential-proxy.scrapeops.io:8181

[DEBUG] ProxyMiddleware initialized with proxy: http://residential-proxy.scrapeops.io:8181

2024-12-21 18:20:51 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware',

'scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'my_custom_middleware.middlewares.proxy_middleware.ProxyMiddleware',

'my_custom_middleware.middlewares.retry_middleware.RetryMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2024-12-21 18:20:51 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2024-12-21 18:20:51 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2024-12-21 18:20:51 [scrapy.core.engine] INFO: Spider opened

2024-12-21 18:20:51 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2024-12-21 18:20:51 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

[DEBUG] Processing request: https://lumtest.com/robots.txt

[DEBUG] Using proxy authentication: scrapeops

[DEBUG] Proxy set to http://residential-proxy.scrapeops.io:8181

2024-12-21 18:20:51 [test_spider] INFO: Modifying request: https://lumtest.com/robots.txt

2024-12-21 18:20:53 [test_spider] INFO: Processing response: 404 for https://lumtest.com/robots.txt

2024-12-21 18:20:53 [scrapy.core.engine] DEBUG: Crawled (404) <GET https://lumtest.com/robots.txt> (referer: None)

[DEBUG] Processing request: https://lumtest.com/echo.json

[DEBUG] Using proxy authentication: scrapeops

[DEBUG] Proxy set to http://residential-proxy.scrapeops.io:8181

2024-12-21 18:20:53 [test_spider] INFO: Modifying request: https://lumtest.com/echo.json

2024-12-21 18:20:54 [test_spider] INFO: Processing response: 200 for https://lumtest.com/echo.json

2024-12-21 18:20:54 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://lumtest.com/echo.json> (referer: None)

2024-12-21 18:20:54 [test_spider] DEBUG: [DEBUG] Response received

2024-12-21 18:20:54 [test_spider] DEBUG: [DEBUG] Request meta: {'download_timeout': 180.0, 'proxy': 'http://residential-proxy.scrapeops.io:8181', '_auth_proxy': 'http://residential-proxy.scrapeops.io:8181', 'download_slot': 'lumtest.com', 'download_latency': 0.8209846019744873}

2024-12-21 18:20:54 [test_spider] DEBUG: [DEBUG] Request headers: {b'Accept': [b'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8'], b'Accept-Language': [b'en'], b'User-Agent': [b'Scrapy/2.11.0 (+https://scrapy.org)'], b'Proxy-Authorization': [b'Basic c2NyYXBlb3BzOmRmNmFiZjg0LTVjZTgtNDgyZC1iM2UwLTc2MDg1YjczNjliMA=='], b'Accept-Encoding': [b'gzip, deflate, br, zstd']}

2024-12-21 18:20:54 [test_spider] DEBUG: [DEBUG] Response body: {"country":"MA","asn":{"asnum":36925,"org_name":"ASMedi"},"geo":{"city":"Casablanca","region":"06","region_name":"Casablanca-Settat","postal_code":"","latitude":33.5792,"longitude":-7.6133,"tz":"Africa/Casablanca","lum_city":"casablanca","lum_region":"06"},"method":"GET","httpVersion":"1.1","url":"/echo.json","headers":{"Host":"lumtest.com","X-Real-IP":"45.219.45.52","X-Forwarded-For":"45.219.45.52","X-Forwarded-Proto":"https","X-Forwarded-Host":"lumtest.com","Accept":"text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8","Accept-Language":"en","User-Agent":"Scrapy/2.11.0 (+https://scrapy.org)","Accept-Encoding":"gzip, deflate, br, zstd"}}

2024-12-21 18:20:54 [scrapy.core.engine] INFO: Closing spider (finished)

2024-12-21 18:20:54 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 649,

'downloader/request_count': 2,

'downloader/request_method_count/GET': 2,

'downloader/response_bytes': 1220,

'downloader/response_count': 2,

'downloader/response_status_count/200': 1,

'downloader/response_status_count/404': 1,

'elapsed_time_seconds': 3.092111,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2024, 12, 21, 23, 20, 54, 336685, tzinfo=datetime.timezone.utc),

'log_count/DEBUG': 9,

'log_count/INFO': 14,

'memusage/max': 64987136,

'memusage/startup': 64987136,

'response_received_count': 2,

'robotstxt/request_count': 1,

'robotstxt/response_count': 1,

'robotstxt/response_status_count/404': 1,

'scheduler/dequeued': 1,

'scheduler/dequeued/memory': 1,

'scheduler/enqueued': 1,

'scheduler/enqueued/memory': 1,

'start_time': datetime.datetime(2024, 12, 21, 23, 20, 51, 244574, tzinfo=datetime.timezone.utc)}

2024-12-21 18:20:54 [scrapy.core.engine] INFO: Spider closed (finished)

Here is the JSON pulled from the full log.

{"country":"MA","asn":{"asnum":36925,"org_name":"ASMedi"},"geo":{"city":"Casablanca","region":"06","region_name":"Casablanca-Settat","postal_code":"","latitude":33.5792,"longitude":-7.6133,"tz":"Africa/Casablanca","lum_city":"casablanca","lum_region":"06"},"method":"GET","httpVersion":"1.1","url":"/echo.json","headers":{"Host":"lumtest.com","X-Real-IP":"45.219.45.52","X-Forwarded-For":"45.219.45.52","X-Forwarded-Proto":"https","X-Forwarded-Host":"lumtest.com","Accept":"text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8","Accept-Language":"en","User-Agent":"Scrapy/2.11.0 (+https://scrapy.org)","Accept-Encoding":"gzip, deflate, br, zstd"}}

Enhancing the Proxy Middleware

You can enhance your proxy middleware even further.

In the code below, we'll show you how to change your user-agent. Webservers read user-agents to identify where the request is coming from.

Remember the user-agent we received in our response from earlier: "User-Agent":"Scrapy/2.11.0 (+https://scrapy.org)".

Here, Scrapy is announcing itself to the site. In production, this isn't a very good idea.

Let's take a more recent Chrome user-agent for Windows.

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36

To set this in our code, we'll add this to our User-Agent header.

import base64

from scrapy.exceptions import NotConfigured

USER_AGENT = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36"

class ProxyMiddleware:

def __init__(self, proxy_url):

self.proxy_url = proxy_url

print(f"[DEBUG] ProxyMiddleware initialized with proxy: {proxy_url}")

@classmethod

def from_crawler(cls, crawler):

proxy_url = crawler.settings.get("PROXY_URL")

if not proxy_url:

raise NotConfigured("PROXY_URL is not set in settings.")

print(f"[DEBUG] PROXY_URL from settings: {proxy_url}")

return cls(proxy_url)

def process_request(self, request, spider):

print(f"[DEBUG] Processing request: {request.url}")

request.meta['proxy'] = self.proxy_url

proxy_user = spider.settings.get("PROXY_USER")

proxy_pass = spider.settings.get("PROXY_PASS")

if proxy_user and proxy_pass:

proxy_auth = f"{proxy_user}:{proxy_pass}"

encoded_auth = base64.b64encode(proxy_auth.encode()).decode()

request.headers['Proxy-Authorization'] = f'Basic {encoded_auth}'

request.headers['User-Agent'] = USER_AGENT

print(f"[DEBUG] Using proxy authentication: {proxy_user}")

print(f"[DEBUG] Proxy set to {self.proxy_url}")

- First, we create our fake user-agent:

USER_AGENT = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36". - Later on in the code, after we set the authorization header, we set the

User-Agentheader as well:request.headers['User-Agent'] = USER_AGENT.

Real-World Example: Building a Custom Retry Middleware

Now that we've built some proxy middleware, we're going to build some retry middleware.

In our from_crawler() method, we define a retry limit and we create a list of bad status codes.

If we receive a bad status code during process_response(), we'll retry the request with the _retry() method.

from scrapy.exceptions import IgnoreRequest

from scrapy.http import Request

from scrapy.utils.response import response_status_message

class RetryMiddleware:

def __init__(self, retry_times, retry_http_codes):

self.retry_times = retry_times

self.retry_http_codes = set(retry_http_codes)

@classmethod

def from_crawler(cls, crawler):

return cls(

retry_times=crawler.settings.getint("RETRY_TIMES", 3),

retry_http_codes=crawler.settings.getlist("RETRY_HTTP_CODES", [500, 502, 503, 504, 522, 524, 408])

)

def process_request(self, request, spider):

spider.logger.info(f"Modifying request: {request.url}")

return None

def process_response(self, request, response, spider):

retries = request.meta.get("retry_times", 0)

if retries > 0:

spider.logger.info(f"Retry response received: {response.status} for {response.url} (Retry {retries})")

else:

spider.logger.info(f"Processing response: {response.status} for {response.url}")

if response.status in self.retry_http_codes:

spider.logger.warning(f"Retrying {response.url} due to HTTP {response.status}")

return self._retry(request, response_status_message(response.status), spider)

return response

def process_exception(self, request, exception, spider):

spider.logger.warning(f"Exception encountered: {exception} for {request.url}")

return self._retry(request, str(exception), spider)

def _retry(self, request, reason, spider):

retries = request.meta.get("retry_times", 0) + 1

if retries <= self.retry_times:

spider.logger.info(f"Retrying {request.url} ({retries}/{self.retry_times}) due to: {reason}")

retry_req = request.copy()

retry_req.meta["retry_times"] = retries

retry_req.dont_filter = True

return retry_req

else:

spider.logger.error(f"Gave up retrying {request.url} after {self.retry_times} attempts")

raise IgnoreRequest(f"Request failed after retries: {request.url}")

from_crawler()is used to set up our retry limit and our bad status codes:retry_times=crawler.settings.getint("RETRY_TIMES", 3),retry_http_codes=crawler.settings.getlist("RETRY_HTTP_CODES", [500, 502, 503, 504, 522, 524, 408])

- If the status code is in

self.retry_http_codes:- We retry it:

return self._retry(request, response_status_message(response.status), spider)

- We retry it:

- If we encounter an exception (error) during runtime, we retry it using our

process_exception()method.

Best Practices for Middleware Development

As you've learned, middleware is a really powerful way to add functionality to your Scrapy project.

When using middleware in production, it requires careful planning for scalability, performance and maintainability.

When writing your own middleware, keep the following things in mind.

-

Keep it Modular: Middlewares are meant to be small. You shouldn't create one middleware to rule them all. Each middleware should have its own special functionality and focus only on that functionality. Putting too much code in your middleware could cause code conflicts and make it difficult to maintain.

-

Prioritize Usability: If your middleware is just generic enough, you can reuse this across multiple Scrapy projects. This can save you valuable time and resources during development.

-

Document Your Code: Document your code. Someone else should be able to come look at it and understand what it does. If your colleagues have no idea how your middleware works, they're far less likely to use it or help you work on it.

Conclusion

You've done it! You now know how to use Scrapy middlewares effectively. Use these new skills and bend Scrapy to your own personal will.

You know how to add proxies and retry logic to your scraper. These are two of the most important pieces of code when scraping professionally.

Go build something!

If you'd like to know more about Scrapy in general, take a look at their official docs here.

More Python Web Scraping Guides

We love Scrapy. We love it so much, we wrote a whole playbook on it!

Take a look here. By the time you've read the whole thing, you could master your command of Scrapy.

If you'd like a taste of this playbook, check out the articles below.