Puppeteer Guide - How to Bypass DataDome with Puppeteer

Datadome is the most reliable and hard-to-bypass anti-bot toolset currently available. DataDome offers a bot protection solution that utilizes machine learning and behavioral analysis to identify and mitigate bot traffic in real-time.

In the following article, we will go through the details of why they are so powerful and how to bypass DataDome using Puppeteer. There isn't a one-size-fits-all solution for bypassing DataDome, but an exciting and promising approach is using the Puppeteer headless browser along with the Puppeteer-Stealth plugin.

- Understanding Datadome

- How DataDome Detects Web Scrapers and Prevents Automated Access

- How to Bypass DataDome with Puppeteer

- Case Study: Scraping Hermes With Puppeteer

- Conclusion

- More Puppeteer Guides

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

Understanding DataDome

DataDome is a leading provider of AI-powered online fraud and bot management; they offer ultimate tools to prevent web automation and halt web crawlers.

Crawlers should strive to respect the robots.txt provided by respective sites, but besides that, the current architecture of the web doesn't provide enough tools to site owners to genuinely enforce the rules outlined in the robots.txt.

DataDome puts some of the power back in the hands of the site owner to properly enforce the rules within their site.

How DataDome Detects Web Scrapers and Prevents Automated Access

Bot management systems such as DataDome operate using a trust score system.

A bot trust score measures the probability of a request being automated and coming from a non-whitelisted source.

Such trust score is computed for each site visitor, and based on it three actions can be taken:

- Allow users to navigate while still actively monitoring.

- Prompt the user with a captcha to determine if the user is a bot.

- Reject request, commonly with a 403 error response.

Datadome trust score scale is not publicly available, but as a reference, we can use the Cloudflare Trust Score scale

| Category | Range |

|---|---|

| Not computed | 0 |

| Automated | 1 |

| Likely automated | Bot scores of 2 through 29. |

| Likely human | Bot scores of 30 through 99. |

| Verified bot | Non-malicious automated traffic (used to power search engines and other applications). |

The trust score is compiled by aggregating multiple signals used to detect bots. The signals in use, and the weight of each on the final trust score, are trade secrets.

However, we can list a few possibilities and divide them into two categories: Frontend signals and Backend signals.

Backend Signals

Backend signals encompass data and indicators that are observed on the server side of a web application or website. Unlike frontend signals, backend signals are processed on the server, and they help identify potential malicious activities or anomalies in the requests received.

-

IP validation:

- Check the IP against different ban lists to filter out server-grade IPs, assess the quality of the ISP originating the IP, and verify past bans associated with the IP address.

-

TLS fingerprinting:

-

HTTPS uses TLS (Transport Layer Security), a cryptographic protocol that encrypts web traffic. In the TLS protocol, one of the initial steps is a handshake between the backend and the client.

-

TLS fingerprinting involves identifying a client based on the fields in its Client Hello message during a TLS handshake.

-

This fingerprint enables Datadome and similar services to distinguish between users employing a genuine browser and those using an HTTP client such as Postman or Node.js native HTTP client.

-

-

HTTP Header Analysis:

-

Analyzes HTTP headers for anomalies in user agents, referral headers, etc.

-

The request header user agent is typically compared with the fingerprinted client's expected User Agent.

-

-

Session Tracking:

-

Cookies containing session information are validated against the original IP and user agent, and fingerprint data is scrutinized for inconsistencies.

-

Signal information from the front end is shared with the backend as part of the encrypted cookie.

-

Front end signals:

Frontend signals refer to data and indicators that are observed directly on the user's device or browser. These signals are collected and analyzed by the client-side (in the user's browser) to assess the legitimacy of user interactions and to identify potential automated bot behavior.

-

Behavioral Analysis:

- Services such as Datadome monitor browser usage, store the information within the sessions, and compare such information vs machine learning models that outline real human behavior, many signals part of the process

- Randomness within the Mouse Jitter when moving the mouse.

- User typing behavior

- User scroll behavior

- Services such as Datadome monitor browser usage, store the information within the sessions, and compare such information vs machine learning models that outline real human behavior, many signals part of the process

-

Device check:

-

Is a new service offered by Datadome that works as an invisible captcha, triggering in browser calculations in order to fingerprint devices:

-

This new product was released on December 12, 2023, and promises to be able to detect even the most advanced bots! Its current availability is limited!

-

-

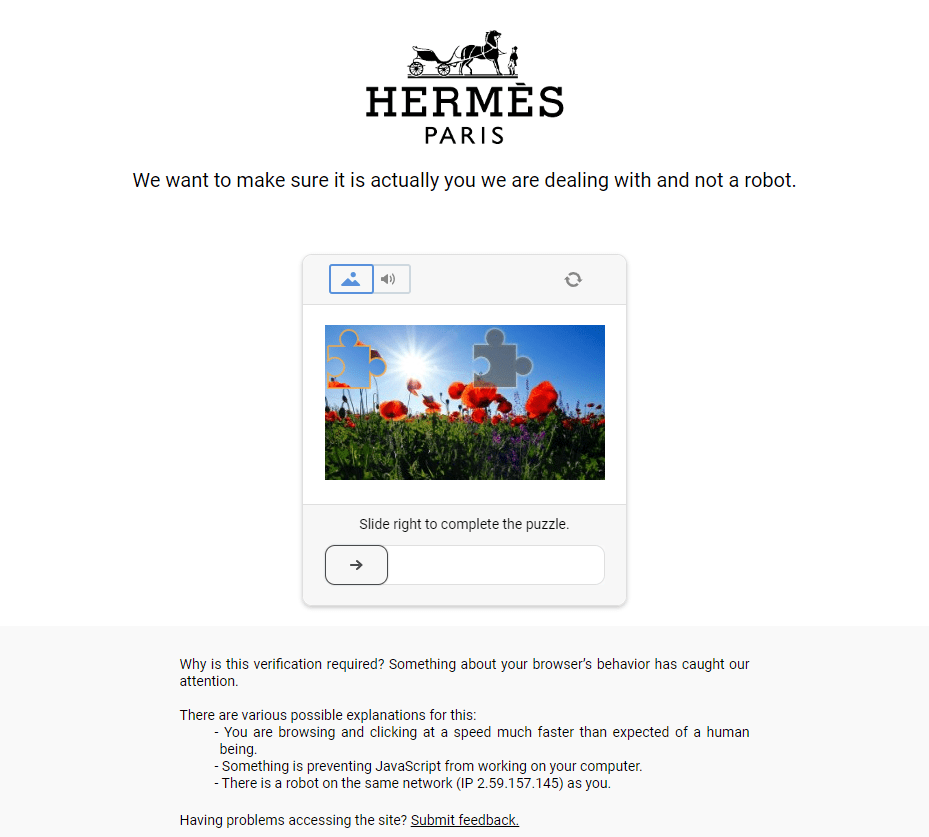

Traditional Captcha:

- Once the trust score is low enough, the user gets prompted with traditional captcha in order to validate if its a real user.

How to Bypass DataDome with Puppeteer

DataDome is an extremely sophisticated anti-bot system, making it challenging to bypass with Puppeteer. You will need to counter all the signals outlined above.

You can use the tactics below to enhance the robustness of your web scraper:

Use Residential & Mobile IPs

Use high-quality residential or mobile IP addresses; any time you send a new request with no cookie, and Datadome does not have a stored fingerprint of your browser, you are a new different user on their system.

Rotate Real Browser Headers

Use HTTP2 and real browser headers such as User Agent, and ensure order of real web browser

Use Headless Browsers

We need to use automated browsers like Puppeteer, Selenium, or Playwright, which have been fortified so they don't leak fingerprints.

To have any chance of doing so, you need to use Puppeteer-extra-plugin-stealth in combination with residential/mobile proxies and rotating IPs. However, it isn't guaranteed, as DataDome can often still detect you based on the security settings set on the website.

In the following sections, we will show you how to approach setting up Puppeteer-extra-plugin-stealth to try and bypass DataDome. We will also demonstrate how to use Smart Proxies like ScrapeOps Proxy Aggregator to bypass DataDome.

Case Study: Scraping Hermes With Puppeteer

In this case study, we will attempt to scrape Hermes, a high-end French luxury brand that utilizes DataDome to detect bot traffic.

This article is for educational purposes only. Readers are responsible for complying with legal and ethical guidelines. I highly encourage readers to go through this Ethical Scraping guide.

Method 1: Bypass DataDome Using Puppeteer-extra-plugin-stealth

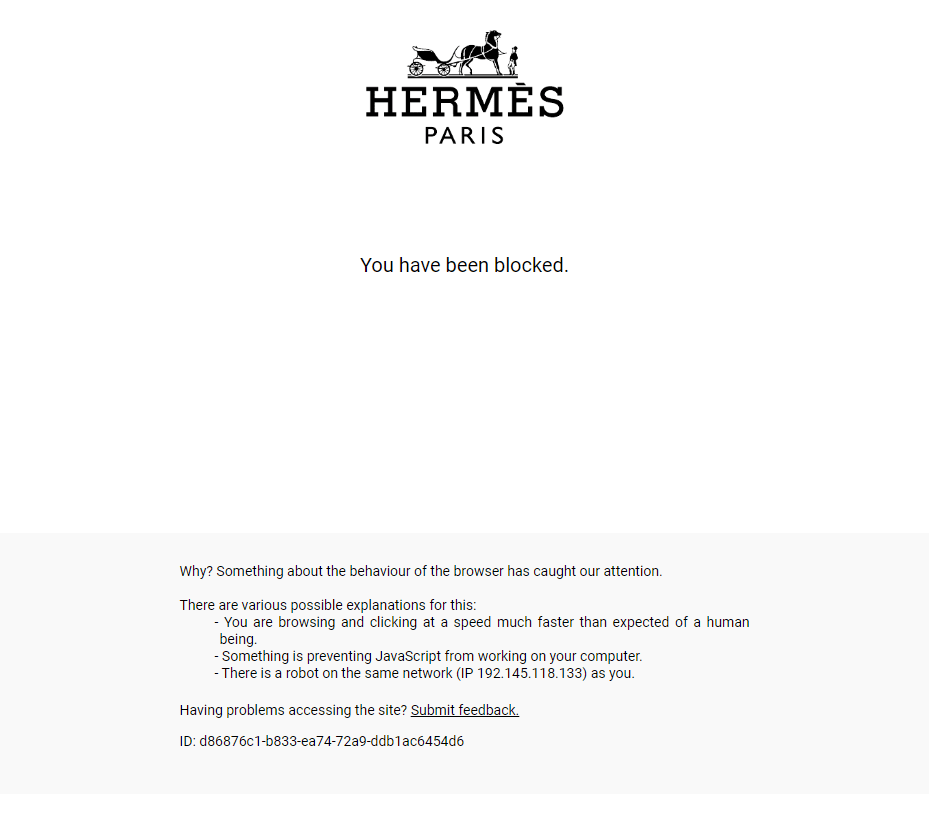

If you tried accessing the site using Puppeteer directly, you would be immediately banned.

Vanilla Puppeteer leaks a lot of tiny details, that platforms such as Datadome can pick up on, in order to determine if you are a real regular browser, or not.

We use the plugin, Puppeteer Stealth in order to handle all those leaks

| Puppeteer Leak | Puppeteer Stealth Fix |

|---|---|

| User agent headers reflect different than chrome | Uses same user agent as chrome |

| Lacks propietary media codecs | Spoofs the object media.codecs |

| TLS fingerprint is different than chrome | Matches TLS fingerprint to chrome |

You can visit the official website of the plugin or our Puppeteer Stealth Guide to get more details about how it enhances Puppeteer.

Here is how to use Node.js and Puppeteer Stealth in practice to access a site:

First, we need to install our dependencies:

npm install puppeteer puppeteer-extra-plugin-stealth puppeteer-extra

Then let's create the following index.js file:

const puppeteer = require('puppeteer-extra');

// Add stealth plugin and use defaults (all evasion techniques)

const StealthPlugin = require('puppeteer-extra-plugin-stealth');

puppeteer.use(StealthPlugin());

async function main() {

// { headless : 'new' } uses the new headless chromium

// Which improves your chances of not being detected

// https://developer.chrome.com/docs/chromium/new-headless

const browser = await puppeteer.launch({ headless: 'new' });

console.log('Running tests..');

const page = await browser.newPage();

await page.goto('https://hermes.com');

await page.waitForTimeout(5000);

await page.screenshot({ path: 'testresult-success-hermes.png', fullPage: true });

await browser.close();

console.log(`All done, check the screenshot. ✨`);

}

main();

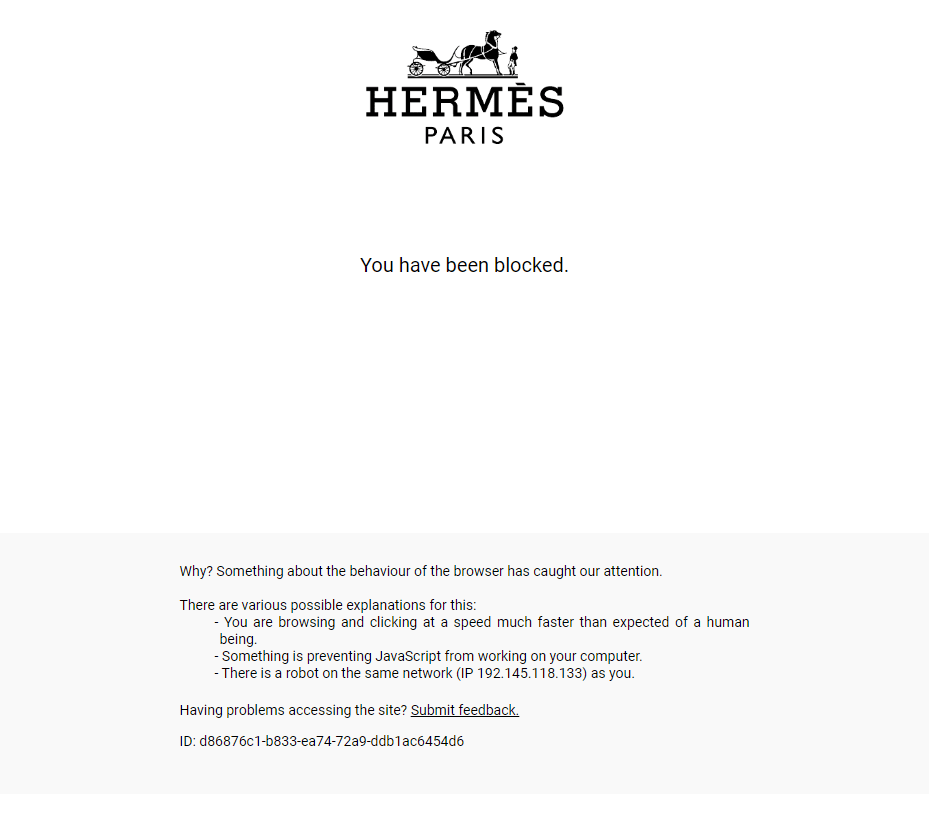

I expect you to be able to get a successful result on the first try but, here is what subsequent tries will probably look like:

Try 2:

Try 3:

This seems to be a consequence of the newly released Datadome feature, device check, and the fact that you are using the same IP on each subsequent request, which plummets your trust score.

Is there anything you could do?

Yes!

Method 2: Bypass DataDome Using ScrapeOps Proxy Aggregator and Puppeteer

Using a proxy aggregator, such as ScrapeOps Proxy Aggregator, can offer several advantages in web scraping and data extraction activities. Here are some reasons why you might choose to use a proxy aggregator:

- provide a pool of IP addresses from different locations.

- offer IP addresses from different regions,

- add a layer of anonymity to your web scraping activities.

- provide a scalable solution for handling large-scale web scraping tasks.

Why is it better able to bypass DataDome than Puppeteer?

ScrapeOps Proxy Aggregator allows you always to use the best proxy for the job while rotating the IP on each request.

Here is an example implementation on how to build a Puppeteer crawler that leverages ScrapeOps Proxy Aggregator:

const puppeteer = require('puppeteer-extra');

const StealthPlugin = require('puppeteer-extra-plugin-stealth');

puppeteer.use(StealthPlugin());

// Residential Proxy Credentials (Replace with your actual details)

const PROXY_HOST = 'http://residential-proxy.scrapeops.io'; // e.g., proxy.provider.com

const PROXY_PORT = 8181; // e.g., 8080

const PROXY_USERNAME = 'scrapeops';

const PROXY_PASSWORD = 'YOUR_API_KEY';

// Async Main Function

async function main() {

// Launch Puppeteer with Proxy

const browser = await puppeteer.launch({

headless: true,

args: [

`--proxy-server=${PROXY_HOST}:${PROXY_PORT}`

]

});

// Open a new page

const page = await browser.newPage();

// Authenticate proxy if needed

await page.authenticate({

username: PROXY_USERNAME,

password: PROXY_PASSWORD

});

// Navigate to the target URL

await page.goto('https://hermes.com', { waitUntil: 'load', timeout: 60000 });

// Wait for 5 seconds

await new Promise(resolve => setTimeout(resolve, 5000));

// Screenshot the resulting page

await page.screenshot({ path: 'testresult-success-hermes.png', fullPage: true });

// Close the browser

await browser.close();

console.log(`All done, check the screenshot. ✨`);

}

main().catch(console.error);

In this code, we do the following:

- Import

puppeteer-extraand thepuppeteer-extra-plugin-stealthpackages withrequire(). - Create a function,

getScrapeOpsUrl()which takes in a normal url and converts it to a proxied url using basic string formatting. - Open a new browser instance with

await puppeteer.launch() - Open a new page with

await browser.newPage() - We pass our url into

getScrapeOpsUrl(), and then we pass the result of that intopage.goto()... This is takes us to the proxied version of the page. - Wait 5 seconds for the page to timeout

- Take a screenshot with

page.screenshot() - Close the browser, display a message in the console, and exit the program.

Take a look at the screenshot below. We have access to the site. The CSS is broken, but this doesn't matter, we're looking for the actual site data, which is all completely in-tact. It's a long scroll, but this is a good thing.

Conclusion

DataDome's comprehensive features, including robust browser fingerprinting and real-time behavior analysis, pose formidable challenges. However, armed with strategic bypass techniques—leveraging residential or mobile IPs, rotating browser headers, and harnessing the power of Puppeteer-extra-plugin-stealth—users can fortify their scraping endeavors.

If you'd like to learn more about Puppeteer check the links below:

More Puppeteer Web Scraping Guides

If you would like to learn more about Web Scraping with Puppeteer, then be sure to check out The Puppeteer Web Scraping Playbook.

Or check out one of our more in-depth guides that you might find interesting: :