Playwright Guide: Capturing Background XHR Requests

When you use a website with dynamic content, more often than not, your website is making requests to a server and then updating the page when the server returns the request. These requests are done using HTTP (Hyper-Text Transfer Protocol). While there are countless ways of making HTTP requests, a webapp typically does this through xhr (XMLHttpRequests) or through a newer HTTP API known as fetch.

When running Playwright, content is fetched using routes. When we know what these routes are, we can do all sorts of interesting things with them. Whether you're looking to block requests from a server, or figure out which endpoints a webapp makes queries to, capturing HTTP requests is a super useful skill to have in your toolbox.

In this guide, we delve into the art of capturing Background XMLHttpRequest (XHR) requests with Playwright.

- TL:DR - How to Capture Background XHR Requests

- Understanding XHR Requests

- Common Reasons to Capture XHR Requests

- Capturing XHR Requests With Playwright

- Analyzing the Captured Data

- Advanced Techniques

- Common Challenges and Solutions

- Best Practices For XHR Request Capture

- Conclusion

- More Web Scraping Guides

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

TLDR - How to Capture Background XHR Requests

Here's a quick and short breakdown of how to capture Background XHR Requests:

//import playwright

const playwright = require("playwright");

//create an async function to scrape the data

async function scrapeData() {

//launch Chromium

const browser = await playwright.chromium.launch({

headless: false

});

//create a constant "page" instance

const page = await browser.newPage();

//any time we get a request

page.on("request", request => {

//if the request is "xhr" or "fetch", log the url to the console

if (request.resourceType() === "xhr" || request.resourceType() === "fetch") {

console.log("Captured request", request.url());

}

});

//now that we've set our rules, navigate to the site

await page.goto("https://www.espn.com/");

await page.waitForTimeout(5000);

//close the browser

await browser.close();

}

//run the scrapeData function

scrapeData();

In summary, the script uses Playwright to automate a Chromium browser, set up an event listener to capture specific network requests, navigate to a website, and finally, close the browser.

This is a foundational script for monitoring and capturing XHR or Fetch requests during web scraping tasks.

Understanding XHR Requests

Perhaps the most well known HTTP client is cURL, a simple, yet very low level HTTP client written in C. Anyone familiar with BASH or another type of shell has probably used cURL before.

While there are many things HTTP clients can do, there are four main operations that you need to remember:

- GET: Gets information from a server. This is used when you navigate to a webpage. You browser gets the page, reads the HTML, and then renders it on your screen.

- POST: Posts information to a server. This is used when you wish to add or post information on a website.

- PUT: Tells the server that you wish to modify existing content, such as editing a social media post or a blog.

- DELETE: Tells the server that you wish to delete existing content. When you delete a post from social media, your app (the client) tells the server to find that specific item and remove it from the database.

Common Reasons to Capture XHR Requests

When we capture XHR requests, we could be doing it for any number of reasons whether that be from curiousity, to discover API endpoints, to find raw data (and possibly eliminate a need for scraping entirely), or to simply improve the performance of a scraper that doesn't need to wait for that information to come back.

Capturing XHR and Fetch requests gives you all sorts of utility that we could use in the future in any of the following areas:

- API endpoint discovery:

-

By capturing XMLHttpRequest (XHR) requests, you can inspect the network traffic and discover the various API endpoints that the application interacts with.

-

This is crucial for understanding the underlying data flow and endpoints utilized in the application's architecture.

-

- Capturing raw data (and possibly eliminating your need to scrape entirely)

-

Instead of extracting data from the HTML structure of a webpage, capturing XHR requests allows you to fetch data directly from the server.

-

This can be more efficient, especially when dealing with dynamic and frequently updated content, potentially eliminating the need for conventional web scraping methods.

-

- Blocking requests with

abort()-

Using the abort() method on XHR requests allows you to simulate network interruptions or prevent specific requests from reaching completion.

-

This is useful for testing how the application responds to incomplete or blocked requests and assessing its resilience.

-

- Penetration Testing

- Penetration testers often capture XHR requests to analyze the communication between the client and server.

- By scrutinizing the content and parameters of these requests, security professionals can identify potential vulnerabilities, such as data exposure or inadequate input validation, and ensure the application's security.

Capturing XHR Requests with Playwright

Let's create a script that goes to a site and listens for outgoing XHR and Fetch requests. To handle page requests, we'll use the resourceType() method for the page.

If the request is either xhr or fetch, we'll print the url to the console. If the request is any other type, we'll simply let it through.

Take a look at the code example below.

//import playwright

const playwright = require("playwright");

//create an async function to scrape the data

async function scrapeData() {

//launch Chromium

const browser = await playwright.chromium.launch({

headless: false

});

//create a constant "page" instance

const page = await browser.newPage();

//any time we get a request

page.on("request", request => {

//if the request is "xhr" or fetch, log the url to the console

if (request.resourceType() === "xhr" || request.resourceType() === "fetch") {

console.log("Captured request", request.url());

}

});

//now that we've set our rules, navigate to the site

await page.goto("https://www.espn.com/");

await page.waitForTimeout(5000);

//close the browser

await browser.close();

}

//run the scrapeData function

scrapeData();

In the code above, we do the following:

- Import playwright with

require("playwright") - Create a browser instance with

playwright.chromium.launch() - Create a page instance with

browser.newPage() - Set rules for page requests with

page.on() - If

request.resourceType()returns eitherxhrorfetch, we log the url to the console - Navigate to the url with

page.goto() - Use

page.waitForTimeout()to let the page run for 5 seconds and capture the requests

If we run the script, we get a console output like the image below.

While the image above doesn't contain all the requests captured by the session (the full list is very, very long) you get a decent idea by looking at the list.

Each time a request is made from our page on the frontend, the the url of the request is logged to the console.

Analyzing the Captured Data

With a bit of fine tuning, we can filter this information to get a detailed breakdown of all these requests.

Let's create a some simple counters to see what types of requests (GET, POST, PUT, DELETE) are being made.

//import playwright

const playwright = require("playwright");

//create an async function to scrape the data

async function scrapeData() {

//launch Chromium

const browser = await playwright.chromium.launch({

headless: false

});

//create a constant "page" instance

const page = await browser.newPage();

//create counters for requests

let gets = 0;

let posts = 0;

let puts = 0;

let deletes = 0;

//any time we get a request

page.on("request", request => {

//if the request is "xhr" or fetch, handle accordingly

if (request.resourceType() === "xhr" || request.resourceType() === "fetch") {

const method = request.method();

if (method === "GET") {

//increment gets

gets++;

} else if (method === "POST") {

//increment posts

posts++;

} else if (method === "PUT") {

//increment puts

puts++;

} else if (method === "DELETE") {

//increment deletes

deletes++;

}

}

});

//now that we've set our rules, navigate to the site

await page.goto("https://www.espn.com/");

await page.waitForTimeout(5000);

//close the browser

await browser.close();

//log the counts for each request type

console.log("GET:", gets);

console.log("POST:", posts);

console.log("PUT:", puts);

console.log("DELETE:", deletes);

}

//run the scrapeData function

scrapeData();

The code above builds off the previous example by:

- Creating four different counters:

gets,posts,puts, anddeletes - When a request happens, instead of logging the request, we create save the request method as a variable

- If the method is one of our methods (GET, POST, PUT, DELETE), we update the counter for the corresponding method

- After the browser has closed, we log each of the counters to the console to see how many of each request we get

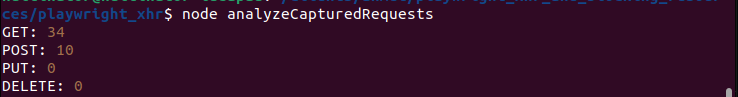

The image below contains the results of a test run on our script. The site is changing all the time, so your counts may differ from the ones below, but this image gives a decent breakdown of what's going on.

As you can see in the image above, we get 34 GET requests, 10 POST requests, 0 PUT and 0 DELETE. Out of the 44 total requests, 34 (about 77%) of them are GET requests and the rest are POST.

As a general rule, most requests we make are GET requests, meaning that most of the time, we're getting information from the server.

A small portion of our requests are POST, in which we're posting information to the server.

If we wanted to reconstruct API endpoints, we could analyze even more information about these requests to reuse them later. If we want to know what information we're sharing with the server, we could save the data of the POST requests.

If we wanted to share less of our information with the server, we could even abort() and block these POST requests.

Advanced Techniques

The code below queries a different website, but builds on the previous example. This time, instead of using page.on(), we use the async method, page.route(). All resources on the page have a route.

When we have the route of the resource, we can block the resource with abort() or allow it through with continue().

//import playwright

const playwright = require("playwright");

//create an async function to scrape the data

async function scrapeData() {

//launch Chromium

const browser = await playwright.chromium.launch({

headless: false

});

//create a constant "page" instance

const page = await browser.newPage();

//create counters for requests

let gets = 0;

let posts = 0;

let puts = 0;

let deletes = 0;

//any time we get a request

await page.route("**/*", (route, request) => {

//if the request is "xhr" or fetch, handle accordingly

if (request.resourceType() === "xhr" || request.resourceType() === "fetch") {

const method = request.method();

if (method === "GET") {

//increment gets

gets++;

} else if (method === "POST") {

//abort post

route.abort()

//increment posts

posts++;

} else if (method === "PUT") {

//increment puts

puts++;

} else if (method === "DELETE") {

//increment deletes

deletes++;

} else {

route.continue();

}

} else {

route.continue();

}

});

//now that we've set our rules, navigate to the site

await page.goto("https://www.amazon.com/");

await page.waitForTimeout(5000);

//close the browser

await browser.close();

//log the counts for each request type

console.log("GET:", gets);

console.log("Blocked POST:", posts);

console.log("PUT:", puts);

console.log("DELETE:", deletes);

}

//run the scrapeData function

scrapeData();

Key differences in the example above:

page.route("**/*", (route, request))is a slightly more complex method that takes a tuple (our route and our request) as an argument- If the request returns

xhrorfetchandrequest.method()returnsPOST, we abort therouteto block the request - This time we're looking at Amazon instead of ESPN

We can see the results of this script below.

As you can see above, we successfully blocked 10 POST requests. Amazon uses far fewer requests in total but 10 out of the total 22 are POST. This is indicative of Amazon collecting more user data than ESPN.

Common Challenges and Solutions

When capturing XHR or Fetch requests with Playwright, the most difficult issues that you may run into are CORS (Cross Origin Resource Sharing) errors and typical issues that occur in async programming.

When dealing with CORS errors, your best bet is to use a proxy server. You can sign up for the ScrapeOps Proxy to mitigate this and many other issues developers run into when scraping the web.

The other issues that tend to occur are async issues. Always check your code and make sure you're using the await keyword where it is needed. Without proper await statements, you won't get the correct return values or even the correct data types for that matter. Instead you will receive an object called a Promise. When we use the await keyword, it waits until a Promise has been fulfilled and then returns the value of the Promise object.

Best Practices for XHR Request Capture

When capturing XHR or Fetch requests, you should always use proper keywords. You need to define your main function (ours was scrapeData()) as async. Within your async main function, you need to make sure to await all asynchronous operations.

Think back to the following:

await playwright.chromium.launch()await browser.newPage()await page.route()await page.goto()await page.waitForTimeout()

When handling routes with if/else statements, always make sure to block the route with:

route.abort()

Or allow the route with:

route.continue()

If you don't properly handle your routes under all of your if/else clauses, your browser could launch and simply hang there without going to a page because you've created a chain of conditions that it doesn't entirely know how to handle.

Conclusion

You now know how to handle XHR and Fetch requests using NodeJS Playwright. This is a very useful and unique skill for your tech stack and you can apply it in all sorts of creative ways. Go build something!

More Web Scraping Guides

Want to learn more? Take a look at the links below!