Playwright Guide - How To Bypass Cloudflare with Playwright

Cloudflare is a network of computers designed to protect systems from DDOS (distributed denial of service) attacks and malicious bots. Cloudflare poses a significant challenge for developers and data analysts seeking to automate browser interactions or extract data programmatically.

In this comprehensive guide, we delve into how to bypass Cloudflare using Playwright, covering everything from understanding how Cloudflare operates to implementing advanced strategies for bypassing its protections, ensuring you have all the knowledge necessary to overcome this hurdle.

- TLDR - How to Bypass Cloudflare with Playwright

- Understanding Cloudflare's Challenges

- Strategies to Bypass Cloudflare With Playwright

- Real-Case Study: Scrape a Website Protected by Cloudflare Using Playwright

- Conclusion

- More Playwright Web Scraping Guides

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

TLDR: How to Bypass Cloudflare with Playwright

Before we deep dive into understanding how Cloudflare works and detects bots, here's a quick script that can help you bypass Cloudflare:

// Import Playwright

const playwright = require("playwright");

// Residential Proxy Aggregator Credentials

const PROXY_SERVER = "http://residential-proxy.scrapeops.io:8181";

const PROXY_USERNAME = "scrapeops";

const PROXY_PASSWORD = "YOUR-SCRAPEOPS-RESIDENTIAL-PROXY-API-KEY";

// Define async function to scrape the data

async function main() {

// Launch Playwright with proxy settings

const browser = await playwright.chromium.launch({

headless: false,

proxy: {

server: PROXY_SERVER,

username: PROXY_USERNAME,

password: PROXY_PASSWORD

}

});

// Launch a new browser page

const page = await browser.newPage();

// Go to the site

await page.goto("https://whatismybrowser.com");

// Wait 4 seconds so we can view the screen

await page.waitForTimeout(4000);

// Close the browser

await browser.close();

}

main();

In the code above, we simply create a function to convert a regular url into a proxied one, and let the proxy server handle all the tedious stuff for us.

getScrapeOpsUrl()converts regular urls into proxied ones- We then pass

getScrapeOpsUrl(ourUrlName)into thepage.goto()function.

From there, everything else is gravy. The ScrapeOps Proxy takes care of everything and we can just continue to scrape the web focusing on the difficult things we need to do!

Understanding Cloudflare's Challenges

Cloudflare is used a a reverse proxy. This means that cloudflare sits between the client (your browser) and the actual web server. When you access a Cloudflare protected site, your browser talks to the Cloudflare server, and if the Cloudflare server believes that your are not a threat to the site, it forwards your requests to the actual server, or origin server that the site runs on. Then Cloudflare receives the output(s) from the origin server and sends them back to the client (your browser).

Cloudflare uses several methods to determine whether or not you are trying to access a site legitimately. As is stated on Cloudflare Docs, one of the goals of Cloudflare is to "reduce the lifetimes of human time spent solving CAPTCHAs accross the internet".

According to their documentation, Cloudflare implements two types of challenges:

- JS Challenge

- Interactive Challenges:

- Click a button

- Solve a CAPTCHA

JS Challenge

The JS Challenge is a Managed Challenge, in other words, the challenge is managed by the browser itself. This type of challenge aligns with their goal of reducing the amount of time humans waste on CAPTCHAs. The Cloudflare server sends a challenge (written in JavaScript) for your browser to solve automatically. Your browser solves it, and you are given access to the site.

Interactive Challenges

Interactive Challenges while not recommended by Cloudflare, are also a solution that Cloudflare employs to combat malicious traffic. An interactive challenge is designed to require some level of human interaction. These challenges range in complexity from simply clicking a button, to wasting time on a CAPTCHA.

When determining which type of challenge to implement, Cloudflare uses a mix of passive and active techniques.

Passive techniques include:

- Scrutinizing HTTP headers

- IP address reputations

- TLS and HTTP/2 fingerprinting

Active techniques include:

- CAPTCHAs

- Canvas fingerprinting

- Browser queries

Strategies to Bypass Cloudflare With Playwright

When attempting to bypass Cloudflare, there are a whole slew of tactics we can use. We can:

- Strategy 1: Scrape Origin Server

- Strategy 2: Scrape Google Cache Version

- Strategy 3: Be More Human

- Strategy 4: Fortify Playwright

- Strategy 5: Use Playwright Extra Stealth

- Strategy 6: Use Smart Proxy Like ScrapeOps

Strategy 1: Scrape Origin Server

While Cloudflare itself is an excellent gatekeeper, sometimes, if we know the IP address of the actual site, we can completely bypass Cloudflare entirely by navigating to the IP of the actual server itself instead of the domain name.

In the example below, we'll hit PetsAtHome.com using Playwright. Instead of using its domain name, we'll use its IP address. If if doesn't work for your site, don't worry!

This method only works if the site is not properly hosted.

//import playwright

const playwright = require("playwright");

//define async function to scrape the data

async function main() {

//launch playwright with a head

const browser = await playwright.chromium.launch({

headless: false

});

//open a new page

const page = await browser.newPage();

//go to the site

await page.goto("http://88.211.26.45/");

//wait 4 seconds so we can view the screen

await page.waitForTimeout(4000);

//close the browser

await browser.close();

}

main();

Strategy 2: Scrape Google Cache Version

When Google crawls sites for its SEO rankings, it saves a cached version of each site. We can actually scrape some of the site data by navigating to this cached version instead of the actual site. This method is not entirely accurate because we aren't even accessing the true site. Instead we are relying on Google's most recent snapshot of the site.

We can get the cached version by modifying our url. https://petsathome.com would then become https://webcache.googleusercontent.com/search?q=cache:https://www.petsathome.com/. Similar to our previous method of scraping the origin server, this method is not entirely reliable, and some sites even stop Google from caching their sites.

The code below accesses Google's cached version of the site.

//import playwright

const playwright = require("playwright");

//define async function to scrape the data

async function main() {

//launch playwright with a head

const browser = await playwright.chromium.launch({

headless: false

});

//open a new page

const page = await browser.newPage();

//go to the site

await page.goto("https://webcache.googleusercontent.com/search?q=cache:https://www.petsathome.com/");

//wait 4 seconds so we can view the screen

await page.waitForTimeout(4000);

//close the browser

await browser.close();

}

main();

Strategy 3: Be More Human

Our third method of bypassing Cloudflare is actually a really simple concept, but perhaps the hardest to implement correctly. Cloudflare is designed to block non-human traffic. To be more human, we can add things into our script that actual humans would do. This method is slightly more difficult because not only do we need to programmatically interact with the page, we need to do so the way a human would.

If you know anything about humans (you're probably human so you definitely do), you know that humans are random. Random is extremely difficult to program, when we write a script, we are writing a set of pre-determined actions, which is the opposite of random.

//import playwright

const playwright = require("playwright");

//get random integer function

function getRandomInt(min, max) {

min = Math.ceil(min);

max = Math.floor(max);

return Math.floor(Math.random() * (max - min + 1)) + min;

}

//define async function to scrape the data

async function main() {

//launch playwright with a head

const browser = await playwright.chromium.launch({

headless: false

});

//open a new page

const page = await browser.newPage();

//go to the site

await page.goto("https://www.petsathome.com/");

//wait until the page has loaded

await page.waitForLoadState("networkidle");

//we will look at 5 random spots on the page

var scrollActionsCount = 5;

//while our count is great than zero

while (scrollActionsCount > 0) {

//generate a random amount to scroll down

const scrollDownAmount = getRandomInt(10, 1000);

//scroll down by the random amount

await page.mouse.wheel(0, scrollDownAmount);

//create a random wait time between 1 and 10 seconds

const randomWait = getRandomInt(1000, 10000);

//create a random amount to scroll back up the page

const scrollUpAmount = 0 - getRandomInt(10, 1000);

//wait the randomWait time that we created

await page.waitForTimeout(randomWait);

//scroll up by the random amount

await page.mouse.wheel(0, scrollUpAmount);

//decrement the scrollActionsCount

scrollActionsCount--

}

//the actions count has reached 0, exit the loop

//get the links on the page

const links = await page.$$("a");

//iterate through the links

for (const link of links) {

//if a link is visible and enabled, click on it

if (await link.isVisible() && await link.isEnabled()) {

await link.click();

await page.goBack();

}

}

//close the browser

await browser.close();

}

main();

In the code above, we create a getRandomInt() function. We then use this function to create some random activity on the page:

await page.mouse.wheel(0, scrollDownAmount);scrolls down by a random amountawait page.waitForTimeout(randomWait);waits for a random amount of timeawait page.mouse.wheel(0, scrollUpAmount);scrolls back up the page by a random amount After our random scrolling, we retrieve all the links on the page. Pay attention to the following code:const links = await page.$$("a")finds allaelements and returns them in a listawait link.isVisible() && await link.isEnabled()ensures that a link is visible and enabled before we click on it

As you can see, trying to add a fake human to your scraper is both very tedious and inefficient. You spend more time worrying about what and where to click than you might spend just looking the page up manually!

Strategy 4: Fortify Playwright

A better attempt would be to fortify Playwright. To fortify our Playwright instance, can make use of fake user agents and residential/mobile proxies. We would typically launch a headless instance of Playwright, set a fake user agent and purchase a plan from a residential proxy provider. When using residential proxies, costs add up very fast. Providers usually charge per GB of bandwidth used.

The code example below sets up a fake user agent for our browser and tests it. We retrieve user agents from ScrapeOps. We use axios to perform a basic GET request. We also use the ScrapeOps Proxy to conceal any further information about our scraper.

You can install axios to your NodeJS project with npm install axios.

// Import Playwright and Axios

const playwright = require("playwright");

const axios = require("axios");

// Residential Proxy Aggregator Credentials

const PROXY_SERVER = "http://residential-proxy.scrapeops.io:8181";

const PROXY_USERNAME = "scrapeops";

const PROXY_PASSWORD = "YOUR-SCRAPEOPS-RESIDENTIAL-PROXY-API-KEY";

// Function for a random integer

function getRandomInt(min, max) {

min = Math.ceil(min);

max = Math.floor(max);

return Math.floor(Math.random() * (max - min + 1)) + min;

}

// Function to fetch a random User-Agent (Replace with your own API or static list if needed)

async function getRandomUserAgent() {

try {

const response = await axios.get("https://fake-useragent.herokuapp.com/browsers/0.1.11");

const userAgents = response.data.browsers.chrome; // Adjust based on preferred browsers

return userAgents[getRandomInt(0, userAgents.length - 1)];

} catch (error) {

console.error("Error fetching user agents:", error);

return "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"; // Default User-Agent

}

}

// Define async function to scrape the data

async function main() {

// Get a random User-Agent

const randomUserAgent = await getRandomUserAgent();

// Launch Playwright with residential proxy settings

const browser = await playwright.chromium.launch({

headless: false,

proxy: {

server: PROXY_SERVER,

username: PROXY_USERNAME,

password: PROXY_PASSWORD

}

});

// Create a new context with the random User-Agent

const context = await browser.newContext({

userAgent: randomUserAgent

});

// Launch a new browser page with the newly created context

const page = await context.newPage();

// Go to the site

await page.goto("https://whatismybrowser.com");

// Wait 4 seconds so we can view the screen

await page.waitForTimeout(4000);

// Close the browser

await browser.close();

}

main();

In the code above, there are just a few differences that you really need to pay attenton to:

- We perform a simple

GETrequest to the ScrapeOps API. - We receive a

responsethat contains a list of fake user agents for us to try. - We select a random user agent from our list.

- We use

browser.Context()to set our fake user agent. - Instead of calling

browser.newPage(), we callcontext.newPage(), which runs our scraper with the context that we just created. - When passing the url into

page.goto(), we actually pass it intogetScrapeOpsUrl()and pass the result of that function intopage.goto()so we can navigate to the proxied result of the page.

The actions above conceal not only our browser type, but also our IP address from the actual website.

Strategy 5: Use Playwright Extra Stealth

In this section, we'll run Playwright but use the Puppeteer Extra Stealth plugin alongside it to further increase our security. While designed for Puppeteer, we can use it as a plugin with Playwright in order to bypass bot detectors. It is built specifically to make our browser appear more human.

We can install them both with npm.

npm install playwright-extra puppeteer-extra-plugin-stealth

Take a look a the example below.

//import playwright

const {chromium} = require("playwright-extra");

//import stealth

const stealth = require("puppeteer-extra-plugin-stealth")();

//define async function to scrape the data

async function main() {

//set chromium to use the stealth plugin

chromium.use(stealth);

//launch playwright with a head

const browser = await chromium.launch({

headless: false,

});

//open a new browser page

const page = await browser.newPage();

//go to the site

await page.goto("https://whatismybrowser.com");

//wait 4 seconds so we can view the screen

await page.waitForTimeout(4000);

//close the browser

await browser.close();

}

main();

Once again, this script only has a few subtle differences (mainly in our startup):

- The way we import Playwright itself

const {chromium} = require("playwright-extra");. You need to import Chromium inside of of the curly braces. If you don't, Playwright will fail to run. - The way we import Stealth,

const stealth = require("puppeteer-extra-plugin-stealth")();. While syntactically, this is about as ugly as it can get, the()at the end of therequire()statement makes it a function. Without the additional (), the Stealth plugin will fail. - Before running the browser, we add Stealth as a plugin with with

chromium.use(stealth);

Strategy 6: Use Smart Proxy Like ScrapeOps

In Strategy 4, we set up a combination of the ScrapeOps proxy and fake user agents. In this section, we'll dive deeper into the ScrapeOps proxy, and why it is your one-stop solution for scraping the web.

Often, when browsing a site, the server keeps track at the requests that are coming from our IP address and how fast they're coming. A multitude of requests coming from a single IP in a very short period of time is obviously a bot. If it moves faster than a human ever could, there's no way it's a human. Proxies, such as the ScrapeOps Proxy, rotate IP addresses by default.

In short, even though you're making a bunch of different requests from your location, to the Cloudflare server, it looks like all these requests are coming from different locations and are therefore different users.

These proxies also avoid rate limiting and IP bans because as you read in the previous paragraph, the requests are all coming from different locations. Another reason proxies make us appear more human, they slow things down.

When you send a batch of requests all at once to the same server, you're obviously a bot. When using a proxy traffic moves in the following steps:

- We query the proxy

- The proxy calls the site

- The proxy recieves the result

- The proxy sends the result back to us

- We interpret the result and create a new request

- Repeat steps 1 through 5

In the example below, we also use the ScrapeOps API, but this time we use it without manually setting fake user agents. If you run the code multiple times and watch your browser, you should notice that whatismybrowser results of your browser and OS come up differently on multiple runs.

The reason for this: ScrapeOps takes care of all the tedious and boring stuff for you! All you have to do is scrape the web like you normally would!

// Import Playwright

const playwright = require("playwright");

// Residential Proxy Credentials

const PROXY_SERVER = "http://residential-proxy.scrapeops.io:8181";

const PROXY_USERNAME = "scrapeops";

const PROXY_PASSWORD = "YOUR-SCRAPEOPS-RESIDENTIAL-PROXY-API-KEY"; // Example: us.smartproxy.com:10000

// Define an async function to scrape the data

async function main() {

// Launch Playwright with a proxy

const browser = await playwright.chromium.launch({

headless: false, // Set to true for headless browsing

proxy: {

server: PROXY_SERVER,

username: PROXY_USERNAME,

password: PROXY_PASSWORD

}

});

// Launch a new browser page

const page = await browser.newPage();

// Go to the site

await page.goto("https://whatismybrowser.com");

// Wait 4 seconds so we can view the screen

await page.waitForTimeout(4000);

// Close the browser

await browser.close();

}

// Run the script

main();

Cloudflare is the most common anti-bot system being used by websites today, and bypassing it depends on which security settings the website has enabled.

To combat this, we offer 3 different Cloudflare bypasses designed to solve the Cloudflare challenges at each security level.

| Security Level | Bypass | API Credits | Description |

|---|---|---|---|

| Low | cloudflare_level_1 | 10 | Use to bypass Cloudflare protected sites with low security settings enabled. |

| Medium | cloudflare_level_2 | 35 | Use to bypass Cloudflare protected sites with medium security settings enabled. On large plans the credit multiple will be increased to maintain a flat rate of $3.50 per thousand requests. |

| High | cloudflare_level_3 | 50 | Use to bypass Cloudflare protected sites with high security settings enabled. On large plans the credit multiple will be increased to maintain a flat rate of $4 per thousand requests. |

The script above is our most simple and yet it is extremely effective. The major difference between this script and normal Playwright operation:

getScrapeOpsUrl(): this function takes a regular url as an argument and returns a proxied url for us to use.- We pass our url into

getScrapeOpsUrl()and pass that result intopage.goto()

Everything else is just Playwright as normal... with almost zero overhead!

Real-Case Study: Scrape a Website Protected by Cloudflare Using Playwright

Next, we'll actually scrape some data PetsAtHome.com. We'll compare the standard method of Scraping (without any of the strategies above), and the ScrapeOps Proxy.

The code below attempts to scrape the site with no additional strategies.

//import playwright

const playwright = require("playwright");

//define async function to scrape the data

async function main() {

//launch playwright with a head

const browser = await playwright.chromium.launch({

headless: false,

});

//launch a new browser page

const page = await browser.newPage();

//go to the site

await page.goto("https://www.petsathome.com");

//wait until the network is idle

await page.waitForLoadState("networkidle");

await page.screenshot({path: "standard.png"})

//close the browser

await browser.close();

}

main();

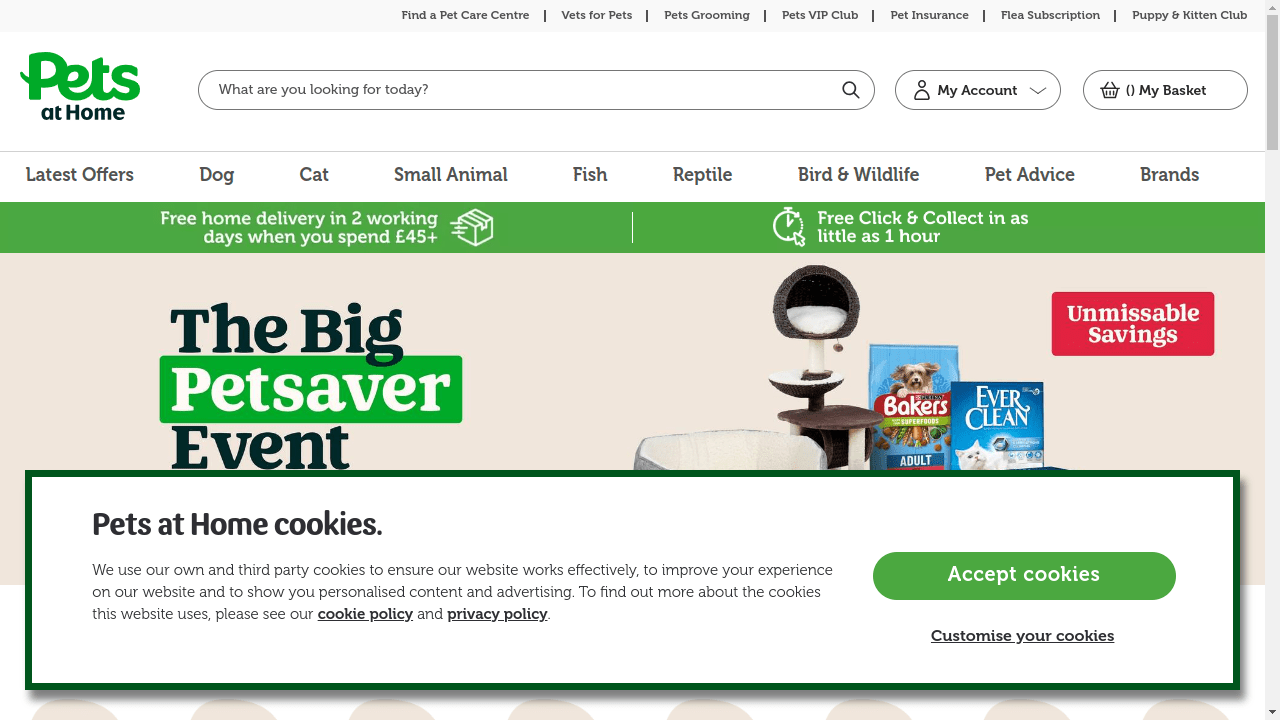

Here is the screenshot:

As you can see in the image above, we are detected and we need to complete one of Cloudflare's challenges. Cloudflare did its job and our scraper did not.

Here is an example of the same scraper, but we'll use the ScrapeOps Proxy.

// Import Playwright

const playwright = require("playwright");

// Residential Proxy Credentials

const PROXY_SERVER = "http://residential-proxy.scrapeops.io:8181";

const PROXY_USERNAME = "scrapeops";

const PROXY_PASSWORD = "YOUR-SCRAPEOPS-RESIDENTIAL-PROXY-API-KEY"; // Example: us.smartproxy.com:10000

// Define an async function to scrape the data

async function main() {

// Launch Playwright with a proxy

const browser = await playwright.chromium.launch({

headless: false, // Set to true for headless browsing

proxy: {

server: PROXY_SERVER,

username: PROXY_USERNAME,

password: PROXY_PASSWORD

}

});

// Launch a new browser page

const page = await browser.newPage();

// Go to the site

await page.goto("https://www.petsathome.com");

// Wait until the DOM has loaded

await page.waitForLoadState("domcontentloaded");

// Take a screenshot

await page.screenshot({ path: "proxied.png" });

// Close the browser

await browser.close();

}

// Run the script

main();

The code above is almost identical to vanilla Playwright scraping. The main differences:

getScrapeOpsUrl()is once again used to convert our normal url into a proxied one.- We use

await page.waitForLoadState("domcontentloaded");instead of"networkidle"because the proxy slows down our results. When we wait for the network to go idle using a proxy, we will often receive a timeout error because there are other things happening under the hood.

Here are the results when using the ScrapeOps Proxy:

This method allows us to bypass Cloudflare in the simplest way possible and continue on with the important logic we need in our code.

Conclusion

You've reached the end of this tutorial! You now know various methods for attempting to bypass Cloudflare and you understand that the easiest way to do so is to use a Proxy. If you would like to get more information, you can visit the Playwright Documentation and Cloudflare Documentation.

More Playwright Web Scraping Guides

Want to learn More? Take a look at the links below: