Python Selenium Beginners Series Part 1: How To Build Our First Scraper

When it comes to web scraping, Python is the go-to language due to its highly active community, excellent web scraping libraries, and popularity within the data science community.

Many articles online show how to create a basic Python scraper. However, a few articles walk you through the full process of building a production-ready scraper.

To address this gap, we are doing a 6-Part Python Selenium Beginner Series. In this series, we'll build a Python scraping project from scratch, covering everything from creating the scraper to making it production-ready.

Python Selenium 6-Part Beginner Series

-

Part 1: Basic Python Selenium Scraper - We'll go over the basics of scraping with Python, and build our first Python scraper. (This article)

-

Part 2: Cleaning Dirty Data & Dealing With Edge Cases - Web data can be messy, unstructured, and have lots of edge cases. In this tutorial we'll make our scraper robust to these edge cases, using data classes and data cleaning pipelines. Part 2

-

Part 3: Storing Data in AWS S3, MySQL & Postgres DBs - There are many different ways we can store the data that we scrape from databases, CSV files to JSON format, and S3 buckets. We'll explore several different ways we can store the data and talk about their pros, and cons and in which situations you would use them. Part 3

-

Part 4: Managing Retries & Concurrency - Make our scraper more robust and scalable by handling failed requests and using concurrency. Part 4

-

Part 5: Faking User-Agents & Browser Headers - Make our scraper production ready by using fake user agents & browser headers to make our scrapers look more like real users. (Coming Soon)

-

Part 6: Using Proxies To Avoid Getting Blocked - Explore how to use proxies to bypass anti-bot systems by hiding your real IP address and location. (Coming Soon)

In this tutorial, Part 1: Basic Python Selenium Scraper we're going to cover:

- Our Python Web Scraping Stack

- How to Setup Our Python Environment

- Creating Our Scraper Project

- Laying Out Our Python Scraper

- Launching the Browser

- Switching to Headless Mode

- Extracting Data

- Saving Data to CSV

- Navigating to the Next Page

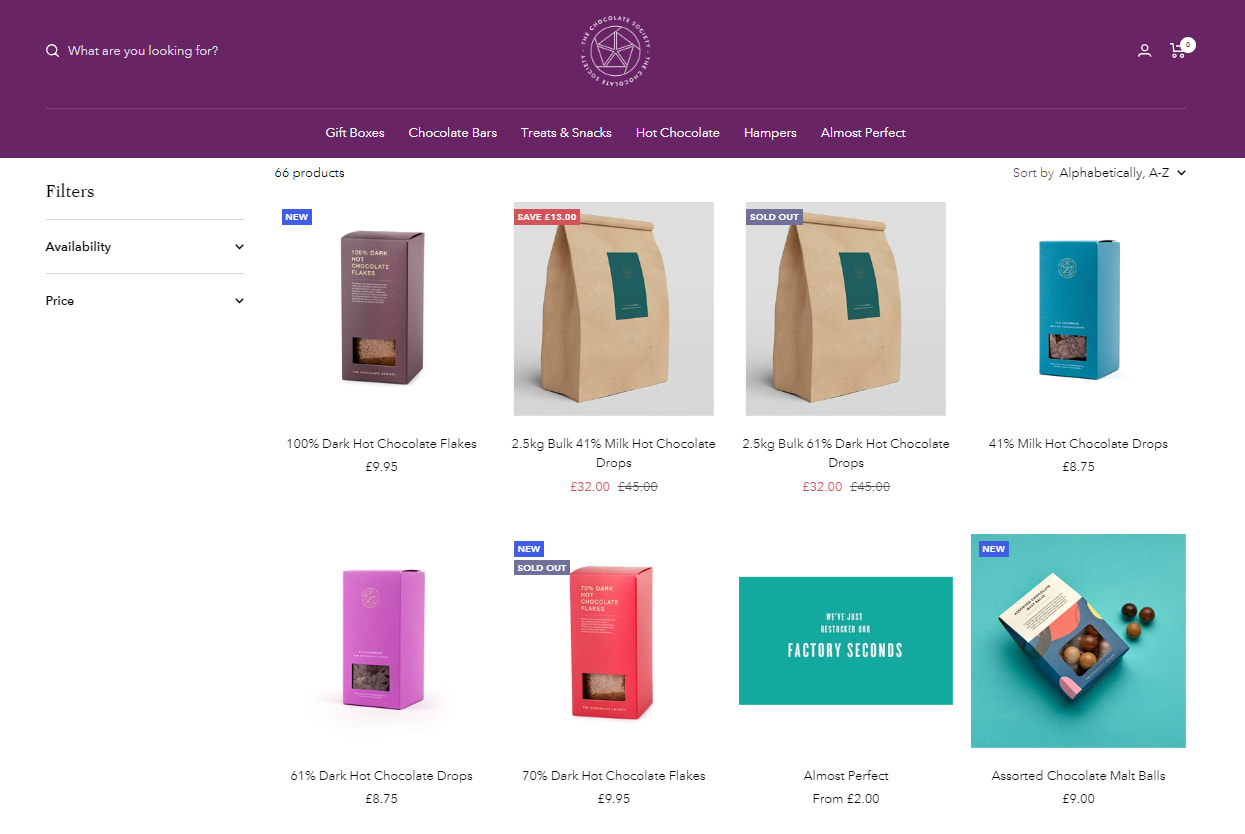

For this series, we'll be scraping the products from Chocolate.co.uk because it's a good example of how to approach scraping an e-commerce store. Plus, who doesn't love chocolate?

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

Our Python Web Scraping Stack

To scrape data from a website, we need two key components: an HTTP client and a parsing library. The HTTP client is responsible for sending a request to retrieve the HTML content of the web page, while the parsing library is used to extract the data from that HTML content.

Due to the popularity of Python for web scraping, we've numerous options for both. We can use libraries like Requests, HTTPX, or AIOHTTP (an asynchronous HTTP client) for the HTTP client. Similarly, for the parsing library, we can use BeautifulSoup, lxml, Parsel, and others.

Alternatively, we could use Python web scraping libraries/frameworks such as Scrapy, Selenium, and Requests-HTML that combine both functionalities of making HTTP requests and parsing the retrieved data.

Each stack has its pros and cons. However, for this beginner series, we'll be using Python Selenium. It is a popular open-source library that offers cross-language and cross-browser support and is particularly useful for handling dynamic websites, complex interactions, and browser-specific rendering, such as JavaScript-heavy elements.

How to Setup Our Python Environment

Before we start building our scraper, we need to set up our Python environment. Here's how you can do it:

Step 1: Set up your Python Environment

To prevent any potential version conflicts in the future, it is recommended to create a distinct virtual environment for each of your Python projects. This approach guarantees that any packages you install for a particular project are isolated from other projects.

For MacOS or Linux:

-

Make sure you've the latest version of your packages installed:

$ sudo apt-get update

$ apt install tree -

Install

python3-venvif you haven't done so already:$ sudo apt install -y python3-venv -

Create your Python virtual environment:

$ python3 -m venv venv

$ source venv/bin/activate

For Windows:

-

Install

virtualenvD:\selenium-series> pip install virtualenv -

Navigate to the folder where you want to create the virtual environment and run the command to create a virtual environment with the name myenv.

D:\selenium-series> Python -m venv myenv -

Activate the virtual environment.

D:\selenium-series> myenv\Scripts\activate

Step 2: Install Python Selenium and WebDriver

Finally, we’ll install Python Selenium and WebDriver in our virtual environment. WebDriver acts as an interface that allows you to control and interact with web browsers.

There are two ways to install Selenium and WebDriver:

-

WebDriver Manager (Recommended): This method is simpler and recommended for beginners as it automatically downloads and manages the appropriate WebDriver version. Open the command prompt and run:

pip install selenium==4.17.2 webdriver-manager==4.0.1 -

Manually Setting up WebDriver: This method requires more manual setup. To begin, install the Chrome driver that matches your Chrome browser version. Once the Chrome driver is set up in your preferred location, you can proceed with installing Python Selenium.

pip install selenium==4.17.2

Creating Our Scraper Project

Now that our environment is set up, let's dive into the fun stuff: building our first Python scraper! The first step is creating our scraper script. We'll create a new file called chocolate_scraper.py within the ChocolateScraper project folder.

ChocolateScraper

└── chocolate_scraper.py

This chocolate_scraper.py file will contain all the code we use to scrape the Chocolate.co.uk website. In the future, we can run this scraper by entering the following command into the command line:

python chocolate_scraper.py

Laying Out Our Python Scraper

Now that we've our libraries installed and chocolate_scraper.py created, let's lay out our scraper.

from webdriver_manager.chrome import ChromeDriverManager

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.by import By

from selenium import webdriver

list_of_urls = [

'https://www.chocolate.co.uk/collections/all',

]

scraped_data = []

def start_scrape():

"""

Function to initiate the scraping process

"""

for url in list_of_urls:

# Perform scraping for each URL

pass

def save_to_csv(data_list, filename="scraped_data.csv"):

"""

Function to save scraped data to a CSV file

"""

pass

if __name__ == "__main__":

start_scrape()

save_to_csv(scraped_data)

Let's go over what we've just defined:

- We imported necessary classes from Selenium and WebDriver Manager to automate Chrome interactions and data retrieval.

- We created a

list_of_urlscontaining the product pages we want to scrape. - We defined a

scraped_datalist to store the extracted data. - We created a

start_scrapefunction where the scraping logic will be written. - We defined a

save_to_csvfunction to save scraped data in a CSV file. - We created a

__main__block that will kick off our scraper when you run the script.

Launching the Browser

The first step is to open the browser and navigate to the website. This allows you to retrieve the HTML, to extract the data you need. You can open the browser with the webdriver module.

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

chromedriver_path = r"C:\Program Files\chromedriver.exe"

driver = webdriver.Chrome(service=Service(chromedriver_path))

list_of_urls = [

"https://www.chocolate.co.uk/collections/all",

]

scraped_data = []

def start_scrape():

for url in list_of_urls:

driver.get(url)

print(driver.page_source)

if __name__ == "__main__":

start_scrape()

driver.quit()

Here’s how the code works:

driver = webdriver.Chrome(chromedriver_path)created a new Chrome WebDriver instance.driver.get(url)navigates to the provided URL.print(driver.page_source)prints the HTML source code.

Now, when you run the script, you'll see the HTML source code of the webpage printed to the console.

<html class="js" lang="en" dir="ltr" style="--window-height:515.3333129882812px;--announcement-bar-height:53.0625px;--header-height:152px;--header-height-without-bottom-nav:152px;">

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width,initial-scale=1.0,height=device-height,minimum-scale=1.0,maximum-scale=1.0">

<meta name="theme-color" content="#682464">

<title>Products</title>

<link rel="canonical" href="https://www.chocolate.co.uk/collections/all">

<link rel="preconnect" href="https://cdn.shopify.com">

<link rel="dns-prefetch" href="https://productreviews.shopifycdn.com">

<link rel="dns-prefetch" href="https://www.google-analytics.com">

<link rel="preconnect" href="https://fonts.shopifycdn.com" crossorigin="">

<link rel="preload" as="style" href="//www.chocolate.co.uk/cdn/shop/t/60/assets/theme.css?v=88009966438304226991661266159">

<link rel="preload" as="script" href="//www.chocolate.co.uk/cdn/shop/t/60/assets/vendor.js?v=31715688253868339281661185416">

<link rel="preload" as="script" href="//www.chocolate.co.uk/cdn/shop/t/60/assets/theme.js?v=165761096224975728111661185416">

<meta property="og:type" content="website">

<meta property="og:title" content="Products">

<meta property="og:image" content="http://www.chocolate.co.uk/cdn/shop/files/Chocolate_Logo1_White-01-400-400_c4b78d19-83c5-4be0-8e5f-5be1eefa9386.png?v=1637350942">

<meta property="og:image:secure_url" content="https://www.chocolate.co.uk/cdn/shop/files/Chocolate_Logo1_White-01-400-400_c4b78d19-83c5-4be0-8e5f-5be1eefa9386.png?v=1637350942">

<meta property="og:image:width" content="1200">

<meta property="og:image:height" content="628">

<meta property="og:url" content="https://www.chocolate.co.uk/collections/all">

<meta property="og:site_name" content="The Chocolate Society">

<meta name="twitter:card" content="summary">

<meta name="twitter:title" content="Products">

<meta name="twitter:description" content="">

<meta name="twitter:image" content="https://www.chocolate.co.uk/cdn/shop/files/Chocolate_Logo1_White-01-400-400_c4b78d19-83c5-4be0-8e5f-5be1eefa9386_1200x1200_crop_center.png?v=1637350942">

<meta name="twitter:image:alt" content="">

</head>

</html>

...

...

...

...

Note that, in the above code, we use the Chrome WebDriver path to launch the browser. You can also use chromedrivermanager to easily manage and open the browser. See the below code:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager # Extra imported

driver = webdriver.Chrome(options=options, service=Service(

ChromeDriverManager().install()))

Switching to Headless Mode

If you want to switch to headless Chrome, which runs without a graphical user interface (GUI) and is useful for automation and server-side tasks, you need to first create an Options object. Then, use the add_argument method on the Options object to set the --headless flag.

from selenium import webdriver

from webdriver_manager.chrome import ChromeDriverManager

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options # Extra imported

options = Options()

options.add_argument("--headless")

driver = webdriver.Chrome(options=options, service=Service(

ChromeDriverManager().install()))

Extracting Data

Let's update our scraper to extract the desired data. We'll do this by using class names and CSS selectors.

We’ll use the find_elements and find_element methods to locate specific elements. The find_elements method searches for and returns a list of all elements that match the given criteria, while the find_element method searches for and returns the first element that matches the criteria.

Find Product Selectors

To extract product details using selectors, open the website and then open the developer tools console (right-click and choose "Inspect" or "Inspect Element").

Using the inspect element, hover over a product and examine its IDs and classes. Each product has its unique product-item component.

This line of code finds all the elements on the web page that have the class name product-item. The products variable stores a list of these elements. Currently, it holds 24 products, representing all products on the first page.

products = driver.find_elements(By.CLASS_NAME, "product-item")

print(len(products)) # 24

Extract Product Details

Now, let's extract the name, price, and URL of each item in the product list. We'll use the first product (products[0]) to test our CSS selectors while iterating through the list when updating the spider code.

Single Product: Get a single product from the list.

product = products[0]

Name: Get the name of the product with the product-item-meta__title class name.

name = product.find_element(By.CLASS_NAME, "product-item-meta__title").text

## --> '100% Dark Hot Chocolate Flakes'

Price: Get the price of the product with the price class name.

price = product.find_element(By.CLASS_NAME, "price").text

## --> 'Sale price\n£9.95

The price data contains some unwanted text. To remove this extra text, we can use the .replace() method. This method will replace both occurrences of unwanted text with empty quotes ‘’.

product.find_element(By.CLASS_NAME, "price").text.replace("Sale price\n£", "")

## --> '9.95'

Product URL: Now, let's see how to extract the product URL for each item. We can get this using the get_attribute("href") method.

product.find_element(By.CLASS_NAME, "product-item-meta__title").get_attribute("href")

## --> 'https://www.chocolate.co.uk/products/100-dark-hot-chocolate-flakes'

Updated Scraper

Now that we've identified the correct CSS selectors, let's update our scraper code. The updated code will look like this:

from selenium import webdriver

from selenium.webdriver.common.by import By

from webdriver_manager.chrome import ChromeDriverManager

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

options = Options()

options.add_argument("--headless")

driver = webdriver.Chrome(

options=options, service=Service(ChromeDriverManager().install())

)

list_of_urls = [

"https://www.chocolate.co.uk/collections/all",

]

scraped_data = []

def start_scrape():

print("Scraping started...")

for url in list_of_urls:

driver.get(url)

products = driver.find_elements(By.CLASS_NAME, "product-item")

for product in products:

name = product.find_element(

By.CLASS_NAME, "product-item-meta__title").text

price_text = product.find_element(By.CLASS_NAME, "price").text

price = price_text.replace("Sale price\n£", "")

url = product.find_element(

By.CLASS_NAME, "product-item-meta__title"

).get_attribute("href")

scraped_data.append({"name": name, "price": price, "url": url})

if __name__ == "__main__":

start_scrape()

print(scraped_data)

driver.quit()

Our scraper performs the following steps:

- Load the target URL: It uses the

driver.get(url)to load the website's URL in the browser for further processing and data extraction. - Extract product elements: It finds all web elements containing the class name "product-item" using

driver.find_elements(By.CLASS_NAME, "product-item"). These elements represent individual product items on the webpage. - Iterate and extract data: It iterates through each product element and extracts the name, price, and URL.

- Store extracted data: It adds the extracted information to the

scraped_datalist, where it can be stored in a desired format like CSV, JSON, or a database.

When we run the scraper now, we should receive an output similar to this.

[{'name': '100% Dark Hot Chocolate Flakes', 'price': '9.95', 'url': 'https://www.chocolate.co.uk/products/100-dark-hot-chocolate-flakes'},

{'name': '2.5kg Bulk 41% Milk Hot Chocolate Drops', 'price': '32.00', 'url': 'https://www.chocolate.co.uk/products/2-5kg-bulk-of-our-41-milk-hot-chocolate-drops'},

{'name': '2.5kg Bulk 61% Dark Hot Chocolate Drops', 'price': '32.00', 'url': 'https://www.chocolate.co.uk/products/2-5kg-of-our-best-selling-61-dark-hot-chocolate-drops'},

{'name': '41% Milk Hot Chocolate Drops', 'price': '8.75', 'url': 'https://www.chocolate.co.uk/products/41-colombian-milk-hot-chocolate-drops'},

{'name': '61% Dark Hot Chocolate Drops', 'price': '8.75', 'url': 'https://www.chocolate.co.uk/products/62-dark-hot-chocolate'},

{'name': '70% Dark Hot Chocolate Flakes', 'price': '9.95', 'url': 'https://www.chocolate.co.uk/products/70-dark-hot-chocolate-flakes'},

{'name': 'Almost Perfect', 'price': '2.00', 'url': 'https://www.chocolate.co.uk/products/almost-perfect'},

{'name': 'Assorted Chocolate Malt Balls', 'price': '9.00', 'url': 'https://www.chocolate.co.uk/products/assorted-chocolate-malt-balls'},

{'name': 'Blonde Caramel', 'price': '5.00', 'url': 'https://www.chocolate.co.uk/products/blonde-caramel-chocolate-bar'},

...

...

...

]

Saving Data to CSV

In Part 4 of this beginner series, we'll dive deeper into saving data to various file formats and databases. But to start you off, let's create a simple function to save the data we've scraped and stored in scraped_data into a CSV file.

To do so, we'll create a function called save_to_csv(data, filename). This function takes two arguments: the scraped data and the desired filename for the CSV file.

Here’s the code snippet:

import csv

def save_to_csv(data_list, filename):

keys = data_list[0].keys()

with open(filename + '.csv', 'w', newline='') as output_file:

dict_writer = csv.DictWriter(output_file, keys)

dict_writer.writeheader()

dict_writer.writerows(data_list)

And update our scraper to use this function:

from selenium import webdriver

from selenium.webdriver.common.by import By

from webdriver_manager.chrome import ChromeDriverManager

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

import csv

options = Options()

options.add_argument("--headless") # Enables headless mode

# Using ChromedriverManager to automatically download and install Chromedriver

driver = webdriver.Chrome(

options=options, service=Service(ChromeDriverManager().install())

)

list_of_urls = [

"https://www.chocolate.co.uk/collections/all",

]

scraped_data = []

def start_scrape():

print("Scraping started...")

for url in list_of_urls:

driver.get(url)

products = driver.find_elements(By.CLASS_NAME, "product-item")

for product in products:

name = product.find_element(

By.CLASS_NAME, "product-item-meta__title").text

price_text = product.find_element(By.CLASS_NAME, "price").text

price = price_text.replace("Sale price\n£", "")

url = product.find_element(

By.CLASS_NAME, "product-item-meta__title"

).get_attribute("href")

scraped_data.append({"name": name, "price": price, "url": url})

def save_to_csv(data_list, filename):

keys = data_list[0].keys()

with open(filename + ".csv", "w", newline="") as output_file:

dict_writer = csv.DictWriter(output_file, keys)

dict_writer.writeheader()

dict_writer.writerows(data_list)

if __name__ == "__main__":

start_scrape()

save_to_csv(scraped_data, "scraped_data")

driver.quit() # Close the browser window after finishing

After running the scraper, it will create a scraped_data.csv file containing all the extracted data.

Here's an example of what the output will look like:

💡DATA QUALITY: As you may have noticed in the CSV file above, the price for the 'Almost Perfect' product (line 8) appears to have a data quality issue. We'll address this in Part 2: Data Cleaning & Edge Cases.

Navigating to the "Next Page"

So far, the code works well, but it only retrieves products from the first page of the site specified in the list_of_urls list. The next logical step is to grab products from subsequent pages if they exist.

To accomplish this, we need to identify the correct CSS selector for the "next page" button and extract the URL from its href attribute.

driver.find_element(By.CSS_SELECTOR,"a[rel='next']").get_attribute("href")

We'll now update our scraper to identify and extract the URL for the next page, adding it to the list_of_urls for subsequent scraping. Here's the updated code:

from selenium import webdriver

from selenium.webdriver.common.by import By

from webdriver_manager.chrome import ChromeDriverManager

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import csv

import time

options = Options()

options.add_argument("--headless") # Enables headless mode

# Using ChromedriverManager to automatically download and install Chromedriver

driver = webdriver.Chrome(

options=options, service=Service(ChromeDriverManager().install())

)

list_of_urls = [

"https://www.chocolate.co.uk/collections/all",

]

scraped_data = []

def start_scrape():

print("Scraping started...")

for url in list_of_urls:

driver.get(url)

wait = WebDriverWait(driver, 10)

products = wait.until(EC.visibility_of_all_elements_located(

(By.CLASS_NAME, "product-item")))

for product in products:

name = product.find_element(

By.CLASS_NAME, "product-item-meta__title").text

price_text = product.find_element(By.CLASS_NAME, "price").text

price = price_text.replace("Sale price\n£", "")

url = product.find_element(

By.CLASS_NAME, "product-item-meta__title"

).get_attribute("href")

scraped_data.append({"name": name, "price": price, "url": url})

try:

next_page = driver.find_element(By.CSS_SELECTOR, "a[rel='next']")

if next_page:

list_of_urls.append(next_page.get_attribute("href"))

print("Scraped page", len(list_of_urls), "...")

time.sleep(1) # Add a brief pause between page loads

except:

print("No more pages found!")

def save_to_csv(data_list, filename):

keys = data_list[0].keys()

with open(filename + ".csv", "w", newline="") as output_file:

dict_writer = csv.DictWriter(output_file, keys)

dict_writer.writeheader()

dict_writer.writerows(data_list)

if __name__ == "__main__":

start_scrape()

save_to_csv(scraped_data, "scraped_data")

print("Scraping completed successfully!")

driver.quit() # Close the browser window after finishing

Next Steps

We hope you have enough of the basics to get up and running scraping a simple ecommerce site with the above tutorial.

If you would like the code from this example please check out on Github here!

In Part 2 of the series we will work on Cleaning Dirty Data & Dealing With Edge Cases. Web data can be messy, unstructured, and have lots of edge cases so will make our scraper robust to these edge cases, using DataClasses and Data Pipelines.