Scrapy Cloud: 3 Free & Cheap Alternatives

Created by Zyte (formerly Scrapinghub), Scrapy Cloud is a scalable cloud hosting solution for running & scheduling your Scrapy spiders.

Styled as a Heroku for Scrapy spiders, it makes deploying, scheduling and running your spiders in the cloud very easy.

It does offer a free plan, but as you can't schedule jobs with it and can only execute 1 hour of crawl time then it can't really be used for production scraping. You will need to use their Professional plan which starts at $9 per Scrapy Unit per month (1 Scrapy Unit = 1 GB of RAM * 1 concurrent crawl).

This can be bit expensive for small Hobby projects and can quickly get very expensive when scraping at scale.

So in this guide we are going to look at some of the best free and cheap Scrapy Cloud alternatives for running your scrapers in the cloud:

Including ways to make use of $100 free credits with DigitalOcean and Vultr.

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

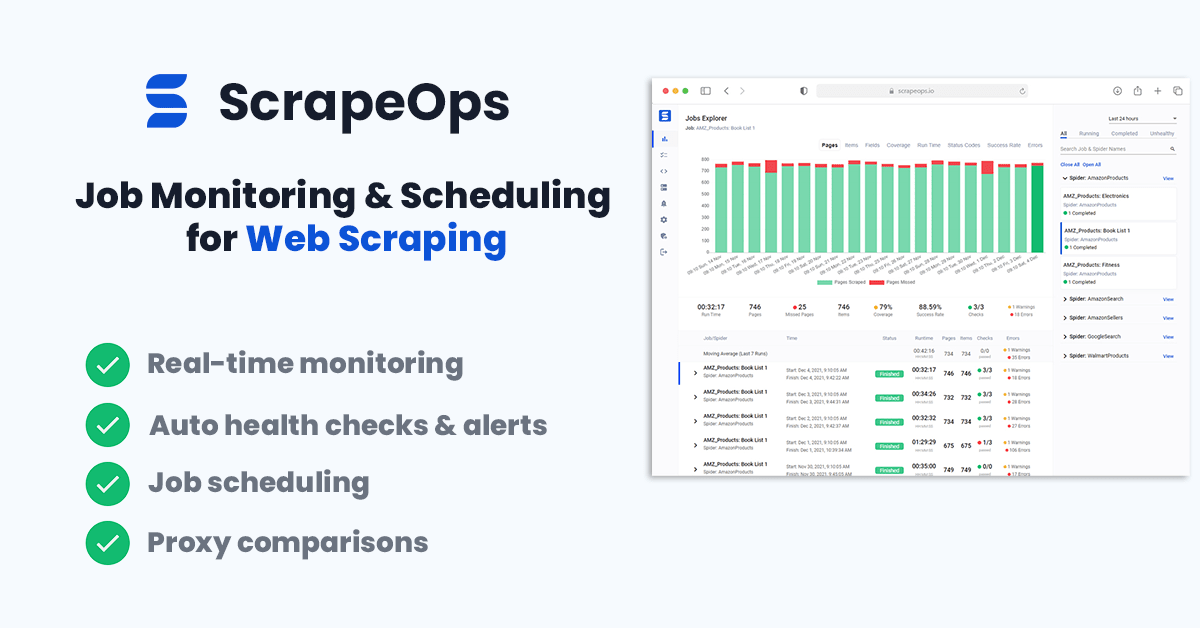

ScrapeOps

First on our list is ScrapeOps, your complete web scraping toolkit.

ScrapeOps allows you connect to any server via SSH or Scrapyd and deploy, schedule, run, and monitor your spiders from the ScrapeOps dashboard.

From there ScrapeOps will allow you to create scheduled jobs and monitor them from your dashboard without having to worry about your servers.

Live demo here: ScrapeOps Demo

You can use any server/VM that can be connected to via SSH or supports running a Scrapyd server, including DigitalOcean and Vultr.

ScrapeOps' free plan allows you to connect to 1 server and run as many spiders/jobs as you would like from the ScrapeOps dashboard. Making it perfect for small hobby or business projects.

The paid version starts at $4.95 per month, and allows you to connect to an unlimited number of servers.

ScrapeOps has a number of advantages over Scrapy Cloud:

- Price: When combined with a free Vultr or DigitalOcean server you can schedule your spiders to run in the cloud using ScrapeOps for free, whereas with Scrapy Cloud it will start off by costing you $9 per month, and rising from there.

- Unlimited Concurrent Jobs: With Scrapy Cloud you can only have 1 job running at a time per $9 Scrapy Cloud server, whereas with ScrapeOps you can run as many jobs concurrently as your server can handle.

- Any Language: With ScrapeOps you can schedule & run any type of scraper on your server (Scrapy, Selenium, Puppeteer, etc.) whereas with Scrapy Cloud you can only run Scrapy spiders (unless you use Docker).

Scrapyd

Scrapyd is open source tool that allows you to deploy Scrapy spiders on a server and run them remotely using a JSON API. Making it a great free alternative to using Scrapy Cloud.

Scrapyd allows you to:

- Run Scrapy jobs.

- Pause & Cancel Scrapy jobs.

- Manage Scrapy project/spider versions.

- Access Scrapy logs remotely.

The dashboard Scrapyd comes with is pretty limited.

However, with Scrapyd you can manage multiple servers from one central point by using a ready-made Scrapyd management tool like ScrapeOps, an open source alternative or by building your own.

Here you can check out the full Scrapyd docs and Github repo.

Again, you can run your Scrapy spiders for free if you combine Scrapyd, with ScrapeOps and a free Vultr or DigitalOcean server.

The other option is to use a free Heroku server, however, by default the free Heroku server shutsdown after 30 minutes so you would need to create a script to keep it active in case your scraping jobs often run over 30 minutes.

Scrapyd has a couple of advantages and disadvantages versus Scrapy Cloud:

Advantages

- Price: When combined with a free Vultr or DigitalOcean server you can schedule your spiders to run in the cloud using Scrapyd for free, whereas with Scrapy Cloud it will start off by costing you $9 per month, and rising from there.

- Unlimited Concurrent Jobs: With Scrapy Cloud you can only have 1 job running at a time per $9 Scrapy Cloud server, whereas with Scrapyd you can run as many jobs concurrently as your server can handle.

Disadvantages

- Only Scrapy: You can only use Scrapyd with Scrapy spiders.

- Tricky To Setup: It can sometimes be a bit tricky to setup, make it secure, and link it to a dashboard.

- Need Dashboard: Technically you can use Scrapyd without a 3rd party dashboard, but in reality you really need to connect it to a 3rd party dashboard.

Schedule via Cron

Another great free & cheaper option to running your Scrapy spiders on Scrapy Cloud is scheduling jobs via a Crontab.

This is a more DIY approach to deploying & scheduling your Scrapy jobs than the other methods as you will need to get your hands dirty with SSH'ing into servers, setting up Crontabs, and a deployment flow from your Github accounts.

Using this method you will SSH into your server and edit the crontab file.

Here you can create a cron to run a spider at a set interval using the crontab syntax.

PATH=/usr/bin

* 5 * * * cd project_folder/project_name/ && scrapy crawl spider_name

Using Crontab's is a very common way to run scripts, so there is no reason why you can't run Scrapy spiders with crontabs as well.

Using this method does have its advantages and disadvantages versus Scrapy Cloud:

Advantages

- Price: When combined with a free Vultr or DigitalOcean server you can schedule your spiders to run in the cloud using a Crontab for free, whereas with Scrapy Cloud it will start off by costing you $9 per month, and rising from there.

- Unlimited Concurrent Jobs: With Scrapy Cloud you can only have 1 job running at a time per $9 Scrapy Cloud server, whereas with a Crontab you can run as many jobs concurrently as your server can handle.

- Run Any Script: You can run any type of script or scraper with this method. So once you set it up, it can use it for scheduling scripts to run other than your scrapers.

Disadvantages

- Tricky To Setup: Trickiest to setup as you need to SSH into the server and edit the Crontab in your command line.

- Need Dashboard: Using this method you have no dashboard or visability on when your scrapers will run, without SSH'ing into your server and checking the Crontab and logs.

More Scrapy Tutorials

That's it for how to use Scrapyd to run your Scrapy spiders. If you would like to learn more about Scrapy, then be sure to check out The Scrapy Playbook.