How to Scrape Bing With NodeJS Puppeteer

There are many popular search engines, and while Google often takes the spotlight, Microsoft's Bing, launched in 2009, remains a robust alternative.

Today, we'll explore how to build a Bing scraper using Puppeteer, a Node.js library that provides a high-level API to control Chrome or Chromium. We’ll walk through building a Bing search crawler to extract metadata from websites.

- TLDR: How to Scrape Bing

- How To Architect Our Scraper

- Understanding How To Scrape Bing

- Setting Up Our Bing Scraper

- Build A Bing Search Crawler

- Build A Bing Scraper

- Legal and Ethical Considerations

- Conclusion

TLDR - How to Scrape Bing

If you're pressed for time and need to scrape Bing quickly, here's a solution using Puppeteer.

- Set up a new project folder and add a

config.jsonfile containing your API key for any proxy service you might use. - Copy the provided JavaScript code and paste it into a new file.

- Run

node name_of_your_script.jsto execute the scraper.

const puppeteer = require('puppeteer');

const fs = require('fs');

const path = require('path');

async function scrapeBing(keyword, location = 'us', pages = 1) {

const browser = await puppeteer.launch({ headless: true });

const page = await browser.newPage();

for (let i = 0; i < pages; i++) {

const resultNumber = i * 10;

const url = `https://www.bing.com/search?q=${encodeURIComponent(keyword)}&first=${resultNumber}`;

await page.goto(url, { waitUntil: 'networkidle2' });

const results = await page.evaluate(() => {

const items = [];

document.querySelectorAll('li.b_algo h2 a').forEach(link => {

items.push({

title: link.innerText,

url: link.href,

});

});

return items;

});

saveResults(keyword, results, i);

}

await browser.close();

}

function saveResults(keyword, results, pageNumber) {

const filename = path.join(__dirname, `${keyword.replace(/\s+/g, '-')}-page-${pageNumber}.json`);

fs.writeFileSync(filename, JSON.stringify(results, null, 2), 'utf-8');

console.log(`Results saved to ${filename}`);

}

scrapeBing('learn JavaScript', 'us', 3);

To Modify:

- Pages: Change the number of pages you want to scrape in the

scrapeBingfunction call. - Keyword: Modify the

keywordargument in the function call to change the search term. - Location: If needed, you can modify the

locationparameter to simulate geolocated searches.

How To Architect Our Bing Scraper

Our Bing scraper project will involve two main components:

- Search Crawler: This part will scrape search results from Bing.

- Metadata Scraper: This component will gather metadata (like titles and descriptions) from the websites returned by the search results.

Key tasks include:

- Crawl Bing Search: Extract search result data such as titles and URLs.

- Paginate Through Results: Retrieve multiple pages of results.

- Store Results: Save the scraped data into files.

- Use Proxies (Optional): Utilize a proxy to avoid getting blocked by Bing.

Understanding How To Scrape Bing

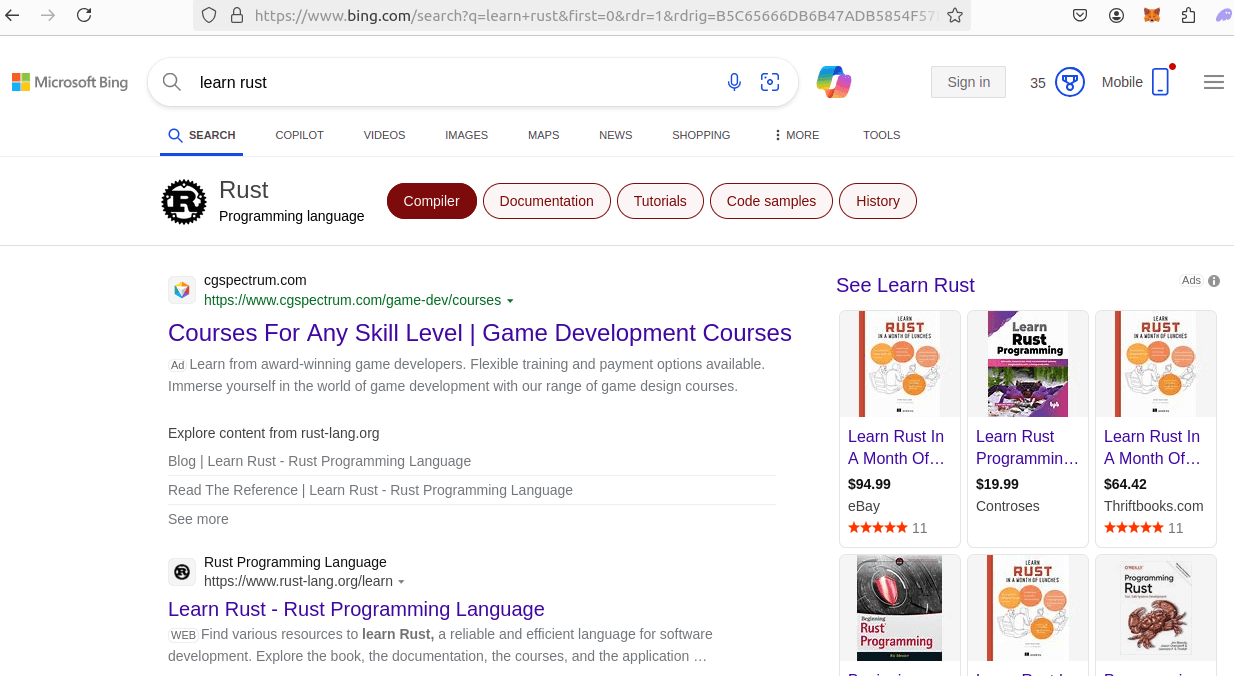

Step 1: Understanding Bing’s Search URL Structure

The format of a Bing search URL is simple:

https://www.bing.com/search?q=your+search+term

To paginate through results, we append a first parameter that indicates the result number to start from. For example, &first=10 would start the results from item 11.

Step 2: Extracting Data from Bing Search Results

We extract search results by selecting all h2 elements inside Bing's result list (li.b_algo). The URLs are contained within the a tags.

await page.evaluate(() => {

const results = [];

document.querySelectorAll('li.b_algo h2 a').forEach(link => {

results.push({ title: link.innerText, url: link.href });

});

return results;

});

Setting Up Our Bing Scraper

To get started, you’ll need to set up your environment.

Step 1: Install Node.js and Puppeteer

Install Node.js if you haven’t already. Then, in your project directory, initialize the project and install Puppeteer:

npm init -y

npm install puppeteer

You can also install a proxy service or library if you want to scrape anonymously.

Step 2: Build the Basic Bing Scraper

Start by writing a basic crawler that can extract search results for a given query.

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://www.bing.com/search?q=learn+javascript');

const results = await page.evaluate(() => {

const items = [];

document.querySelectorAll('li.b_algo h2 a').forEach(link => {

items.push({ title: link.innerText, url: link.href });

});

return items;

});

console.log(results);

await browser.close();

})();

Build a Bing Search Crawler

Step 1: Add Pagination

To paginate through Bing search results, we modify the URL by adding the first parameter and iterate through the pages.

async function scrapeBing(keyword, location, pages = 1) {

const browser = await puppeteer.launch();

const page = await browser.newPage();

for (let i = 0; i < pages; i++) {

const resultNumber = i * 10;

const url = `https://www.bing.com/search?q=${encodeURIComponent(keyword)}&first=${resultNumber}`;

await page.goto(url, { waitUntil: 'networkidle2' });

const results = await page.evaluate(() => {

const items = [];

document.querySelectorAll('li.b_algo h2 a').forEach(link => {

items.push({ title: link.innerText, url: link.href });

});

return items;

});

console.log(results);

}

await browser.close();

}

scrapeBing('learn node.js', 'us', 5);

Step 2: Save Results to File

To make the data persistent, let’s save it as a JSON file.

const fs = require('fs');

const path = require('path');

function saveResults(keyword, results, pageNumber) {

const filename = path.join(__dirname, `${keyword.replace(/\s+/g, '-')}-page-${pageNumber}.json`);

fs.writeFileSync(filename, JSON.stringify(results, null, 2), 'utf-8');

console.log(`Results saved to ${filename}`);

}

Step 3: Add Proxy Support (Optional)

Now, we need to unlock the power of proxy. With the ScrapeOps Proxy API, we can bypass pretty much any anti-bot system. This proxy provides us with a new IP address in the country of our choosing.

We pass the following params into ScrapeOps: "api_key", "url", "country".

const getScrapeOpsUrl = (url, location = 'us') => {

const params = new URLSearchParams({

api_key: API_KEY,

url: url,

country: location,

});

const proxyUrl = `https://proxy.scrapeops.io/v1/?${params.toString()}`;

return proxyUrl;

};

Explanation:

- "api_key" holds your ScrapeOps API key.

- "url" is the target URL to scrape.

- "country" is the country you'd like your requests routed through.

- The function returns a properly configured URL for the ScrapeOps proxy.

Build a Bing Scraper

After retrieving the URLs from Bing’s search results, we now need to visit each of these URLs to extract metadata such as page titles and descriptions.

async function scrapeMetadata(url) {

const browser = await puppeteer.launch();

const page = await browser.newPage();

try {

await page.goto(url, { waitUntil: 'networkidle2' });

const metadata = await page.evaluate(() => {

const title = document.querySelector('title') ? document.querySelector('title').innerText : 'No title';

const description = document.querySelector('meta[name="description"]') ?

document.querySelector('meta[name="description"]').getAttribute('content') : 'No description';

return { title, description };

});

console.log(metadata);

} catch (error) {

console.error(`Failed to scrape ${url}:`, error);

} finally {

await browser.close();

}

}

Legal and Ethical Considerations

When scraping any website, including Bing, ensure that your actions comply with the website's terms of service. Review Bing’s robots.txt file to see which sections of the site are disallowed for crawlers.

Always avoid scraping data behind login walls or personal data that isn't publicly available.

Conclusion

Now you have a functional Bing scraper built using Puppeteer. You've learned how to:

- Scrape search results

- Paginate through results

- Extract metadata from linked websites

- Use Puppeteer to automate and scrape websites

Feel free to expand and improve on this project by adding concurrency, handling more complex anti-bot mechanisms, or integrating advanced storage solutions.

If you'd like to learn more from our "How To Scrape" series, check out the links below.