How To Make Playwright Undetectable

Websites deploy a variety of techniques to detect and block automated tools, ranging from simple measures like monitoring IP addresses and user-agent strings to more advanced methods involving behavioral analysis and fingerprinting. As anti-bot measures become more sophisticated, simply using Playwright is no longer enough to ensure seamless and undetected interactions with web pages.

In this article, we will learn how to make Playwright undetectable.

- TLDR - How To Make Playwright Undetectable

- Understanding Website Bot Detection Mechanisms

- How To Make Playwright Undetectable To Anti-Bots

- Strategies To Make Playwright Undetectable

- Testing Your Playwright Scraper

- Handling Errors and Captchas

- Why Make Playwright Undetectable

- Benefits of Making Playwright Undetectable

- Case Study: Evading Playwright Detection on G2

- Best Practices and Considerations

- Conclusion

- More Playwright Web Scraping Guides

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

TLDR: How to Make Playwright Undetectable

To make Playwright undetectable, you must customize browser settings, spoof user agent strings, disable automation flags, and use realistic interaction patterns. By doing this, you can reduce the likelihood of websites detecting your automated scripts.

We can see an example of implementing this in a Playwright script:

const { chromium } = require('playwright');

(async () => {

const browser = await chromium.launch({

headless: false,

args: ['--disable-blink-features=AutomationControlled']

});

const context = await browser.newContext({

userAgent: 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

viewport: { width: 1280, height: 720 },

deviceScaleFactor: 1,

});

const page = await context.newPage();

await page.goto('https://cnn.com');

await browser.close();

})();

-

Disable Automation Flags: We can use the

--disable-blink-features=AutomationControlledargument to remove the automation flag, which can help prevent detection. -

Set Realistic Viewport and Device Characteristics: Configure the browser context to match typical user settings. We will set the viewport to a typical display so we don't raise any flags.

-

Modify User Agent String: We can use different user agent strings to appear as a more commonly used browser.

Understanding Website Bot Detection Mechanisms

To effectively make Playwright undetectable, it's crucial to understand the techniques websites use to identify bots and automated scripts. This knowledge will help you implement strategies to avoid detection.

- IP Analysis and Rate Limiting

Websites monitor the number of requests coming from a single IP address over a specific period. The IP may be flagged as a potential bot if the number of requests exceeds a certain threshold.

Rate limiting is a common method to control traffic and reduce the likelihood of automated abuse. To mitigate this, you can use rotating proxies to distribute requests across multiple IP addresses.

- Browser Fingerprinting

Browser fingerprinting involves collecting information about a user's browser and device configuration to create a unique identifier. This includes data like browser version, operating system, installed plugins, screen resolution, and more. By comparing this fingerprint to known patterns, websites can identify automated scripts. To counteract this, you can modify your browser's fingerprint to mimic a real user more closely.

- Checking for Headless Browser Environments

Many bots run in headless browser environments, which lack a graphical user interface. Websites can detect headless browsers by looking for specific flags or characteristics unique to headless modes. Running Playwright in non-headless mode and spoofing characteristics typical of full browsers can help bypass this detection method.

- Analyzing User Behavior Patterns

Websites analyze user interactions to detect unusual patterns indicative of automation. This includes rapid clicking, consistent navigation paths, and uniform mouse movements. To avoid detection, you can simulate human-like interactions with realistic delays and erratic mouse movements, making your automation behavior appear more natural.

Several measures can block your access if a website detects your automation script. You may:

- Encounter CAPTCHA challenges that interrupt your script's flow,

- IP blocking that prevents further requests from your address,

- account suspension if the site requires user accounts, and

- limited functionality that reduces the effectiveness of your automation efforts.

Understanding these risks is crucial for maintaining undetected and efficient Playwright scripts.

How To Make Playwright Undetectable To Anti-Bots

Different techniques can be used to make your Playwright undetectable to websites’ anti-bot mechanisms.

Here are some of the most commonly used methods.

1. Setting User-Agent Strings

The User-Agent request header is a characteristic string that lets servers and network peers identify the application, operating system, vendor, and/or version of the requestingbrowser.

We can modify the user-agent string to mimic popular browsers, ensuring it matches the browser version and operating system. We can set up the browser user agent string like this in Playwright

const context = await browser.newContext({

userAgent: 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

});

2. Enabling WebGL and Hardware Acceleration

We can ensure WebGL and hardware acceleration are enabled to replicate the typical capabilities of a human-operated web browser by specifying specific arguments when launching our Chromium browser.

const browser = await chromium.launch({

args: [

'--enable-webgl',

'--use-gl=swiftshader',

'--enable-accelerated-2d-canvas'

]

});

3. Mimicking Human-Like Browser Environments

We can configure our browser context to reflect common user settings, such as viewport size, language, and time zone.

const context = await browser.newContext({

viewport: { width: 1280, height: 720 },

locale: 'en-US',

timezoneId: 'America/New_York'

});

4. Using Rotating Residential Proxies

Residential proxies are a type of proxy server that uses IP addresses assigned by Internet Service Providers (ISPs) to regular household users. These proxies differ from datacenter proxies, which use IP addresses provided by cloud service providers and are more easily detectable and blockable by websites. You can employ residential proxies that use real IP addresses from ISPs, making it harder for websites to detect and block your requests.

You can also use rotating residential proxies to change your IP addresses regularly to avoid multiple requests from the same IP, which can raise red flags.

const browser = await chromium.launch({

proxy: {

server: 'http://myproxy.com:3128',

username: 'usr',

password: 'pwd'

}

});

If you want a better understanding of residential proxies, consider exploring the article Residential Proxies Explained: How You Can Scrape Without Getting Blocked.

5. Mimicking Human Behavior

We can mimic human behavior when interacting with a website to avoid detection. Introducing realistic delays, random mouse movements and natural scrolling is essential. Here are some techniques to achieve this:

const { chromium } = require("playwright");

(async () => {

// Launch a new browser instance

const browser = await chromium.launch({ headless: false }); // Set headless to true if you don't need to see the browser

const context = await browser.newContext();

const page = await context.newPage();

// Navigate to the target website and wait for the page to load completely

await page.goto("https://www.saucedemo.com/", {

waitUntil: "domcontentloaded",

});

// Function to generate random delays between 50ms and 200ms

const getRandomDelay = () => Math.random() * (200 - 50) + 50;

// Type into the username field with a random delay to simulate human typing

await page.type("#user-name", "text", { delay: getRandomDelay() });

// Type into the password field with a random delay to simulate human typing

await page.type("#password", "text", { delay: getRandomDelay() });

// Click the login button with a random delay to simulate human click

await page.click("#login-button", { delay: getRandomDelay() });

// Scroll down the page to simulate reading content after login

await page.evaluate(() => {

window.scrollBy(0, window.innerHeight);

});

// Introduce random mouse movements to simulate human interaction

await page.mouse.move(Math.random() * 800, Math.random() * 600);

// Close the browser

await browser.close();

})();

6. Using Playwright with Real Browsers

We can run Playwright with full browser environments rather than headless modes to reduce the likelihood of detection. You can launch your Chromium or Firefox browser by following the script below:

const browser = await chromium.launch({ headless: false });

const browser = await firefox.launch({ headless: false });

Strategies To Make Playwright Undetectable

You can use one or more of the following strategies to make your Playwright scraper undetectable. Each strategy can be tailored to enhance your scraping effectiveness while minimizing detection risks.

1. Use Playwright Extra With Residential Proxies

Playwright Extra is a library that helps disguise Playwright scripts to make them appear as human-like as possible. It modifies browser behavior to evade detection techniques like fingerprinting and headless browser detection.

This library, originally developed for Puppeteer, is known as puppeteer-extra-plugin-stealth and is designed to enhance browser automation by minimizing detection. Below, we will see it in action with an example demonstrating how to use this plugin.

- We will start by installing the required libraries:

npm install playwright-extra

npm install puppeteer-extra-plugin-stealth

- We will navigate to bot.sannysoft, it is a website designed to test and analyze how well your browser or automation setup can evade detection by anti-bot systems.

We will also add a residential proxy to enhance our setup's stealth capabilities further. This comprehensive approach will help us ensure that our automated interactions closely mimic real user behavior, reducing the likelihood of detection.

const { chromium } = require("playwright-extra");

const stealth = require("puppeteer-extra-plugin-stealth")();

chromium.use(stealth);

// Replace with your residential proxy address and port

chromium.launch({ headless: true,

args: [ '--proxy-server=http://your-residential-proxy-address:port'] }).then(async (browser) => {

const page = await browser.newPage();

console.log("Testing the stealth plugin..");

await page.goto("https://bot.sannysoft.com", { waitUntil: "networkidle" });

await page.screenshot({ path: "stealth.png", fullPage: true });

console.log("All done, check the screenshot.");

await browser.close();

});

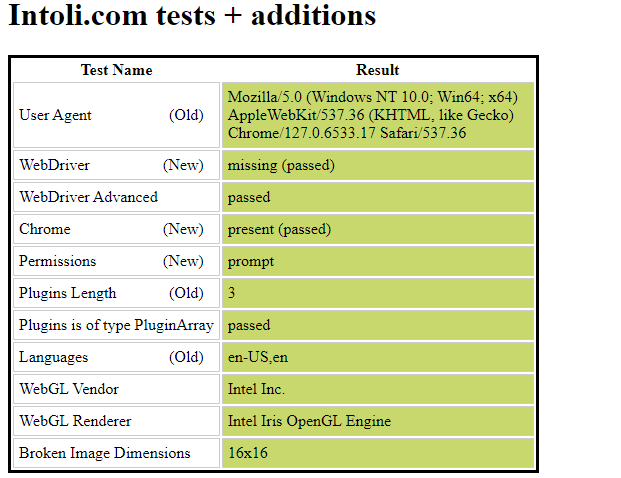

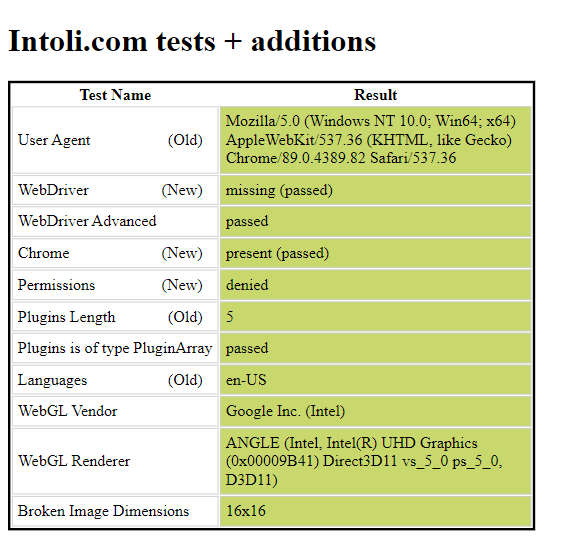

Below, we can see the results from our Playwright script, which uses the stealth plugin and a residential proxy. This setup has successfully passed most of the website's tests.

In contrast, a Playwright script without any evasion mechanisms fails several tests presented by the website.

The comparison highlights the effectiveness of using the stealth plugin and residential proxy for evading detection.

Residential and mobile proxies are typically more expensive than data center proxies because they use IP addresses from real residential users or mobile devices. ISPs charge for the bandwidth used, making it crucial to optimize resource usage.

2. Use Hosted Fortified Version of Playwright

Hosted and fortified versions of Playwright are optimized for scraping and include built-in anti-bot bypass mechanisms, including rotating residential proxies.

Below, we will explore the integration of Brightdata with Playwright, a leading provider of hosted web scraping solutions and proxies.

const playwright = require("playwright");

// Here we will input the Username and Password we get from Brightdata

const { AUTH = "USER:PASS", TARGET_URL = "https://bot.sannysoft.com" } =

process.env;

async function scrape(url = TARGET_URL) {

if (AUTH == "USER:PASS") {

throw new Error(

`Provide Scraping Browsers credentials in AUTH` +

` environment variable or update the script.`

);

}

// This is our Brightdata Proxy URL endpoint that we can use

const endpointURL = `wss://${AUTH}@brd.superproxy.io:9222`;

/**

* This is where the magic happens. Here, we connect to a remote browser instance fortified by Brightdata.

* They utlulize all the methods we talked about and more to make the Chromium instance evade detection effectively.

*/

const browser = await playwright.chromium.connectOverCDP(endpointURL);

// Now we have instance of chromium browser that is fortfied we can navigate to our desired url.

try {

console.log(`Connected! Navigating to ${url}...`);

const page = await browser.newPage();

await page.goto(url, { timeout: 2 * 60 * 1000 });

console.log(`Navigated! Scraping page content...`);

const data = await page.content();

console.log(`Scraped! Data: ${data}`);

} finally {

await browser.close();

}

}

if (require.main == module) {

scrape().catch((error) => {

console.error(error.stack || error.message || error);

process.exit(1);

});

}

- The script starts by checking if the necessary authentication details for the Brightdata proxy service are provided. If not, it throws an error.

- Next, it connects to a Chromium browser instance through the Brightdata proxy using Playwright’s connectOverCDP method.

- Brightdata fortifies this connection, which means it’s configured to evade common bot detection techniques.

- Once connected, the script navigates to the target URL and retrieves the page content.

Even though this is a great solution, it is very expensive to implement on a large scale.

3. Fortify Playwright Yourself

Fortifying Playwright involves making your automated browser behavior appear more like a real user and less like a bot.

By implementing different techniques, you can make your Playwright scripts more robust and stealthy, reducing the likelihood of being detected as a bot by websites.

Let us visit bot.sannysoft with a Playwright script that we fortified:

const { chromium } = require("playwright");

(async () => {

const browser = await chromium.launch({

// Set arguments to mimic real browser

args: [

"--disable-blink-features=AutomationControlled",

"--disable-extensions",

"--disable-infobars",

"--enable-automation",

"--no-first-run",

"--enable-webgl",

],

ignoreHTTPSErrors: true,

headless: false,

});

const context = await browser.newContext({

// Emulate user behavior by setting up userAgent and viewport

userAgent:

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.82 Safari/537.36",

viewport: { width: 1280, height: 720 },

geolocation: { longitude: 12.4924, latitude: 41.8902 }, // Example: Rome, Italy

permissions: ["geolocation"],

});

const page = await context.newPage();

await page.goto("https://bot.sannysoft.com");

await page.screenshot({ path: "screenshot.png" });

await browser.close();

})();

| Flag | Description |

|---|---|

-disable-blink-features=AutomationControlled | This flag disables the JavaScript property navigator.webdriver from being true. Some websites use this property to detect if the browser is being controlled by automation tools like Puppeteer or Playwright. |

--disable-extensions | This flag disables all Chrome extensions. Extensions can interfere with the behavior of the browser and the webpage, so it’s often best to disable them when automating browser tasks. |

--disable-infobars | This flag disables infobars on the top of the browser window, such as the “Chrome is being controlled by automated test software” infobar. |

--enable-automation | This flag enables automation-related APIs in Chrome. |

--no-first-run | This flag skips the first-run experience in Chrome, which is a series of setup steps shown the first time Chrome is launched. |

--enable-webgl | This flag enables WebGL in a browser. |

We can now run our fortified Playwright script to get an output of.

As demonstrated, we got the same result as we did with the Playwright-stealth library. The configuration of our Playwright script can be tailored based on the specific website and the degree of its anti-bot defenses.

We can also enhance our applications by adding rotating residential proxies to make it harder for bot detectors to spot us.

By experimenting with various strategies tailored to our unique requirements, we can achieve an optimal setup robust enough to bypass the protective measures of a website we want to scrape.

4. Leverage ScrapeOps Proxy to Bypass Anti-Bots

Depending on your use case, leveraging a proxy solution with built-in anti-bot bypasses can be a simpler and more cost-effective approach compared to manually optimizing your Playwright scrapers. This way, you can focus on extracting the data you need without worrying about making your bot undetectable.

ScrapeOps Proxy Aggregator is another proxy solution to make Puppeteer undetectable to bot detectors even complex one's like cloudflare.

-

Sign Up for ScrapeOps Proxy Aggregator: Start by signing up for ScrapeOps Proxy Aggregator and obtain your API key.

-

Installation and Configuration: Install Playwright and set up your scraping environment.

-

Initialize Playwright with ScrapeOps Proxy: Set up Playwright to use ScrapeOps Proxy Aggregator by providing the proxy URL and authentication credentials.

Below, we will see how to use Playwright with ScrapeOps to get the ultimate web scraping application.

const { chromium } = require("playwright");

// Replace with your residential proxy details

const PROXY_SERVER = "http://residential-proxy.scrapeops.io:8181";

const PROXY_USERNAME = "scrapeops";

const PROXY_PASSWORD = "YOUR-SCRAPEOPS-RESIDENTIAL-PROXY-API-KEY";

(async () => {

// Launch browser with proxy settings

const browser = await chromium.launch({

headless: false,

args: [`--proxy-server=${PROXY_SERVER}`], // Only the proxy server URL

});

// Create a browser context with authentication for the proxy

const context = await browser.newContext({

proxy: {

server: PROXY_SERVER,

username: PROXY_USERNAME,

password: PROXY_PASSWORD

}

});

const page = await context.newPage();

console.log("Testing residential proxy...");

await page.goto("https://bot.sannysoft.com", { waitUntil: "networkidle" });

await page.screenshot({ path: "screenshot.png" });

console.log("Screenshot saved as 'screenshot.png'");

await browser.close();

})();

ScrapeOps offers a variety of bypass levels to navigate through different anti-bot systems.

These bypasses range from generic ones, designed to handle a wide spectrum of anti-bot systems, to more specific ones tailored for platforms like Cloudflare and Datadome.

Each bypass level is crafted to tackle a certain complexity of anti-bot mechanisms, making it possible to scrape data from websites with varying degrees of bot protection.

| Bypass Level | Description | Typical Use Case |

|---|---|---|

generic_level_1 | Simple anti-bot bypass | Low-level bot protections |

generic_level_2 | Advanced configuration for common bot detections | Medium-level bot protections |

generic_level_3 | High complexity bypass for tough detections | High-level bot protections |

cloudflare | Specific for Cloudflare protected sites | Sites behind Cloudflare |

incapsula | Targets Incapsula protected sites | Sites using Incapsula for security |

perimeterx | Bypasses PerimeterX protections | Sites with PerimeterX security |

datadome | Designed for DataDome protected sites | Sites using DataDome |

With ScrapeOps, you don’t have to worry about maintaining your script’s anti-bot bypassing techniques, as it handles these complexities for you.

Testing Your Playwright Scraper

To ensure your Playwright scraper is effectively fortified against bot detection, it’s essential to test it using fingerprinting tools.

Earlier, we demonstrated using websites like bot.sannysoft.com to test your Playwright code stealthiness.

Another excellent tool for this purpose is bot.incolumitas.com, which provides a comprehensive analysis of your browser's fingerprint and highlights potential leaks that might reveal your browser identity.

Without Fortification

First, let's look at a basic Playwright script without any fortification techniques. We will test this script using the tools mentioned to get a behavioralClassificationScore.

This score will allow us to determine if we are being detected as human or a bot.

const playwright = require("playwright");

function sleep(ms) {

return new Promise((resolve) => setTimeout(resolve, ms));

}

/**

* This is obviously not the best approach to

* solve the bot challenge. Here comes your creativity.

*

* @param {*} page

*/

async function solveChallenge(page) {

// wait for form to appear on page

await page.waitForSelector("#formStuff");

// overwrite the existing text by selecting it

// with the mouse with a triple click

const userNameInput = await page.$('[name="userName"]');

await userNameInput.click({ clickCount: 3 });

await userNameInput.type("bot3000");

// same stuff here

const emailInput = await page.$('[name="eMail"]');

await emailInput.click({ clickCount: 3 });

await emailInput.type("bot3000@gmail.com");

await page.selectOption('[name="cookies"]', "I want all the Cookies");

await page.click("#smolCat");

await page.click("#bigCat");

// submit the form

await page.click("#submit");

// handle the dialog

page.on("dialog", async (dialog) => {

console.log(dialog.message());

await dialog.accept();

});

// wait for results to appear

await page.waitForSelector("#tableStuff tbody tr .url");

// just in case

await sleep(100);

// now update both prices

// by clicking on the "Update Price" button

await page.waitForSelector("#updatePrice0");

await page.click("#updatePrice0");

await page.waitForFunction(

'!!document.getElementById("price0").getAttribute("data-last-update")'

);

await page.waitForSelector("#updatePrice1");

await page.click("#updatePrice1");

await page.waitForFunction(

'!!document.getElementById("price1").getAttribute("data-last-update")'

);

// now scrape the response

let data = await page.evaluate(function () {

let results = [];

document.querySelectorAll("#tableStuff tbody tr").forEach((row) => {

results.push({

name: row.querySelector(".name").innerText,

price: row.querySelector(".price").innerText,

url: row.querySelector(".url").innerText,

});

});

return results;

});

console.log(data);

}

(async () => {

const browser = await playwright["chromium"].launch({

headless: false,

args: ["--start-maximized"],

});

const context = await browser.newContext({ viewport: null });

const page = await context.newPage();

await page.goto("https://bot.incolumitas.com/");

await solveChallenge(page);

await sleep(6000);

const new_tests = JSON.parse(

await page.$eval("#new-tests", (el) => el.textContent)

);

const old_tests = JSON.parse(

await page.$eval("#detection-tests", (el) => el.textContent)

);

console.log(new_tests);

console.log(old_tests);

//await page.close();

await browser.close();

})();

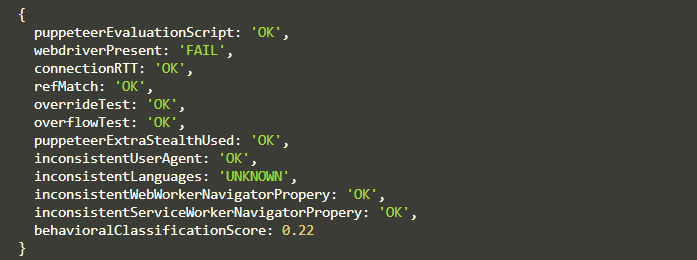

As you can see from the result above, we get a behavioralClassificationScore of 22% human , indicating it is likely to be detected as a bot.

This low score reflects that the script's behavior does not closely mimic that of a real human user, making it more susceptible to bot detection mechanisms.

With Fortification

Now, let's see a fortified version of the Playwright script:

We will be implementing different enhancements to make the script's behavior appear more like that of a real human user.

Here are the key fortification techniques implemented:

- Random Mouse Movements :

- Adding random mouse movements simulates the unpredictable nature of human mouse behavior.

- This is achieved by moving the mouse cursor to random positions on the screen with slight pauses between movements.

- Delays Between Actions :

- Introducing delays between key presses, clicks, and dialog interactions mimics the natural time a human takes to perform these actions.

- This includes delays between typing characters, clicking elements, and handling dialog boxes.

- User Agent and Context Configuration :

- Setting a common user agent helps in blending the browser's identity with real user patterns.

- The browser context is configured with typical user settings, such as geolocation, permissions, and locale.

- Browser Launch Arguments :

- Modifying the browser launch arguments to disable features that can reveal automation, such as

--disable-blink-features=AutomationControlled,--disable-extensions,--disable-infobars,--enable-automation, and--no-first-run. - These arguments help make the browser appear more like a standard user browser.

Here's the fortified version of the Playwright script:

const playwright = require("playwright");

function sleep(ms) {

return new Promise((resolve) => setTimeout(resolve, ms));

}

async function randomMouseMove(page) {

for (let i = 0; i < 5; i++) {

await page.mouse.move(Math.random() _ 1000, Math.random() _ 1000);

await sleep(100);

}

}

async function solveChallenge(page) {

await page.evaluate(() => {

const form = document.querySelector("#formStuff");

form.scrollIntoView();

});

await sleep(1000);

const userNameInput = await page.$('[name="userName"]');

await userNameInput.click({ clickCount: 3 });

for (let char of "bot3000") {

await userNameInput.type(char);

await sleep(500);

}

const emailInput = await page.$('[name="eMail"]');

await emailInput.click({ clickCount: 3 });

for (let char of "bot3000@gmail.com") {

await emailInput.type(char);

await sleep(600);

}

await page.selectOption('[name="cookies"]', "I want all the Cookies");

await sleep(1200);

await page.click("#smolCat");

await sleep(900);

await page.click("#submit");

page.on("dialog", async (dialog) => {

console.log(dialog.message());

await sleep(2000); // add delay before accepting the dialog

await dialog.accept();

});

await page.waitForSelector("#tableStuff tbody tr .url");

await sleep(100);

await page.waitForSelector("#updatePrice0");

await page.click("#updatePrice0");

await page.waitForFunction(

'!!document.getElementById("price0").getAttribute("data-last-update")'

);

await sleep(1000);

await page.waitForSelector("#updatePrice1");

await page.click("#updatePrice1");

await page.waitForFunction(

'!!document.getElementById("price1").getAttribute("data-last-update")'

);

await sleep(800);

let data = await page.evaluate(function () {

let results = [];

document.querySelectorAll("#tableStuff tbody tr").forEach((row) => {

results.push({

name: row.querySelector(".name").innerText,

price: row.querySelector(".price").innerText,

url: row.querySelector(".url").innerText,

});

});

return results;

});

console.log(data);

}

(async () => {

const browser = await playwright["chromium"].launch({

headless: false,

args: [

"--start-maximized",

"--disable-blink-features=AutomationControlled",

"--disable-extensions",

"--disable-infobars",

"--enable-automation",

"--no-first-run",

],

});

const context = await browser.newContext({

viewport: null,

userAgent:

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3",

geolocation: { longitude: 12.4924, latitude: 41.8902 },

permissions: ["geolocation"],

locale: "en-US",

});

const page = await context.newPage();

await page.goto("https://bot.incolumitas.com/");

await randomMouseMove(page); // add random mouse movements before solving the challenge

await solveChallenge(page);

await sleep(6000);

const new_tests = JSON.parse(

await page.$eval("#new-tests", (el) => el.textContent)

);

const old_tests = JSON.parse(

await page.$eval("#detection-tests", (el) => el.textContent)

);

console.log(new_tests);

console.log(old_tests);

await browser.close();

})();

We’ve made several enhancements to a script that interacts with a webpage using the Playwright library. The goal was to make the script’s behavior appear more like that of a real human user on the webpage.

- Firstly, we introduced delays between key presses and clicks to simulate the time a real user might take to perform these actions. We also added random mouse movements across the page to mimic a user’s cursor movements.

- Secondly, we added a delay before accepting any dialog boxes that appear on the webpage, simulating the time a real user might take to read and respond to the dialog.

- Lastly, we set a common user agent for the browser to make it appear more like a typical user’s browser.

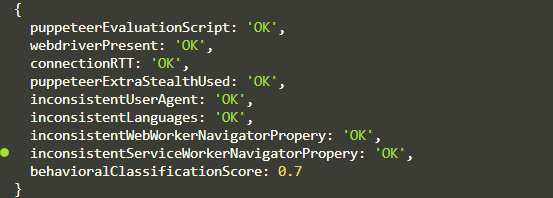

These enhancements significantly improved the script’s ability to mimic a real user’s behavior. In our case, our behavioralClassificationScore comes out to be 70% human, which is quite good considering the simplicity of our script.

Our script is now less likely to be detected as a bot by the webpage’s bot detection mechanisms.

Please note that this is a basic approach, and some websites may have more sophisticated bot detection mechanisms. You might need to consider additional strategies like integrating residential and mobile proxies.

Handling Errors and CAPTCHAs

Playwright is a powerful tool for automating browser interactions, but like any tool, it’s not immune to errors or challenges such as CAPTCHAs.

In this chapter, we’ll explore how to handle errors and CAPTCHAs when using Playwright.

Handling Errors

Errors can occur at any point during the execution of your script. Handling these errors is important so your script can recover or exit cleanly.

Here’s an example of how you might handle errors in Playwright:

const playwright = require('playwright');

(async () => {

try {

const browser = await playwright['chromium'].launch();

const context = await browser.newContext();

const page = await context.newPage();

await page.goto('https://cnn.com');

// We can perform our task here

await browser.close();

} catch (error) {

console.error('An error occurred:', error);

}

})();

We can also modify the script to retry the operation a specified number of times. If the operation fails repeatedly, the script will log an error message and terminate.

const playwright = require('playwright');

const MAX_RETRIES = 5; // Maximum number of retries

async function run() {

let retries = 0;

while (retries < MAX_RETRIES) {

try {

const browser = await playwright['chromium'].launch();

const context = await browser.newContext();

const page = await context.newPage();

await page.goto('https://cnn.com');

// Perform actions...

await browser.close();

// If the actions are performed successfully, break the loop

break;

} catch (error) {

console.error('An error occurred:', error);

console.log('Retrying...');

retries++;

if (retries === MAX_RETRIES) {

console.log('Maximum retries reached. Exiting.');

break;

}

}

}

}

run();

Handling CAPTCHAs

CAPTCHAs are designed to prevent automated interactions, so they can pose a significant challenge to a Playwright script.

There are several strategies you can use to handle CAPTCHAs:

- Avoidance: Some sites may present a CAPTCHA challenge after a certain number of actions have been performed in a short period of time. By introducing the different methods that we discussed above in your Playwright script, you may be able to avoid triggering the CAPTCHA.

- Manual Intervention: If a CAPTCHA does appear, you could pause the execution of your script and wait for a human to solve it manually.

- Third-Party Services: Several third-party services, such as 2Captcha and Anti-Captcha provide APIs to solve CAPTCHAs. These services generally work by sending the CAPTCHA image to a server, where a human or advanced OCR software solves it and sends back the solution.

Here’s an example of how you might use such a service in your Playwright script

const playwright = require('playwright');

const axios = require('axios'); // for making HTTP requests

// Replace with your 2Captcha API key

const captchaApiKey = 'YOUR_2CAPTCHA_API_KEY';

async function solveCaptcha(captchaImage) {

// Send a request to the 2Captcha service

const response = await axios.post('https://2captcha.com/in.php', {

method: 'base64',

key: captchaApiKey,

body: captchaImage,

});

if (response.data.status !== 1) {

throw new Error('Failed to submit CAPTCHA for solving');

}

// Poll the 2Captcha service for the solution

while (true) {

const solutionResponse = await axios.get(

`https://2captcha.com/res.php?key=${captchaApiKey}&action=get&id=${response.data.request}`

);

if (solutionResponse.data.status === 1) {

return solutionResponse.data.request;

}

// If the CAPTCHA is not solved yet, wait for a few seconds before polling again

await new Promise(resolve => setTimeout(resolve, 5000));

}

}

(async () => {

const browser = await playwright['chromium'].launch();

const context = await browser.newContext();

const page = await context.newPage();

await page.goto('https://example.com');

// If a CAPTCHA appears...

const captchaElement = await page.$('#captcha');

if (captchaElement) {

// Take a screenshot of the CAPTCHA image

const captchaImage = await captchaElement.screenshot({ encoding: 'base64' });

// Solve the CAPTCHA

const captchaSolution = await solveCaptcha(captchaImage);

// Enter the CAPTCHA solution

await page.fill('#captchaSolution', captchaSolution);

}

await browser.close();

})();

In this example, when a CAPTCHA is detected, the script takes a screenshot of the CAPTCHA image and sends it to the 2Captcha service for solving.

It then polls the 2Captcha service for the solution and enters it on the page. Please be aware that this solution depends highly on the website you are trying to access.

Why Make Playwright Undetectable

Making Playwright undetectable to web services is a critical aspect of this field, and there are several compelling reasons for this.

Automation Resistance

The first reason lies in the phenomenon known as Automation Resistance. Websites today are more intelligent than ever. They have mechanisms in place to block or limit automated traffic. This is primarily to prevent spamming, abuse, and to ensure a fair usage policy for all users. However, this poses a significant challenge for legitimate automation tasks.

For instance, if you’re running an automated script to test the functionality of a website, the last thing you want is for your script to be blocked or limited. This is where the stealthy nature of Playwright comes into play.

Making Playwright undetectable ensures that your automation tasks can run smoothly without being hindered by the website’s anti-automation measures.

Data Collection

The second reason is related to Data Collection. In the age of information, data is king. However, access to this data is often restricted or monitored. Being undetectable is a significant advantage for tasks that involve data collection or web scraping.

If a website detects that a bot is scraping its data, it might block access or serve misleading information. Therefore, making Playwright undetectable allows for uninterrupted scraping, ensuring you can collect the data you need without any obstacles.

Testing

The third reason pertains to Testing scenarios. When testing a website, it’s crucial to simulate a real user’s browser as closely as possible. If a website detects that it’s being accessed by an automated script, it might behave differently, leading to inaccurate test results.

By making Playwright undetectable, it allows the automation script to mimic a real user’s browser without raising any automation flags. This ensures that the testing environment is as close to the real user experience as possible, leading to more accurate and reliable test results.

In conclusion, the undetectability of Playwright is a powerful feature that allows it to overcome automation resistance, facilitate data collection, and enable accurate testing. As we continue to rely on web automation for various tasks, the ability to remain undetectable will only become more valuable.

Benefits of Making Playwright Undetectable

A stealthy Playwright setup offers significant advantages. It provides improved access to web content, bypassing measures that limit or block automated access. This is particularly beneficial for web scraping or testing tasks, allowing for efficient data collection and smooth operation.

Furthermore, it creates an accurate testing environment by mimicking a real user’s browser without detection, leading to reliable and precise test results.

Moreover, a stealthy setup reduces the risk of being identified as a bot, thereby minimizing the chance of IP bans or rate limiting.

This ensures the uninterrupted execution of automation tasks, making Playwright an invaluable tool in web automation.

Case Study: Evading Playwright Detection on G2

Many sites employ sophisticated anti-scraping mechanisms to detect and block automated scripts. G2, a popular platform for software reviews, employs various measures to prevent automated access to its product categories pages.

In this case study we will explore the experience of scraping the G2 categories page using Playwright. We compare the results of a bare minimum script versus a fortified version.

Our goal is to scrape the product information without getting blocked.

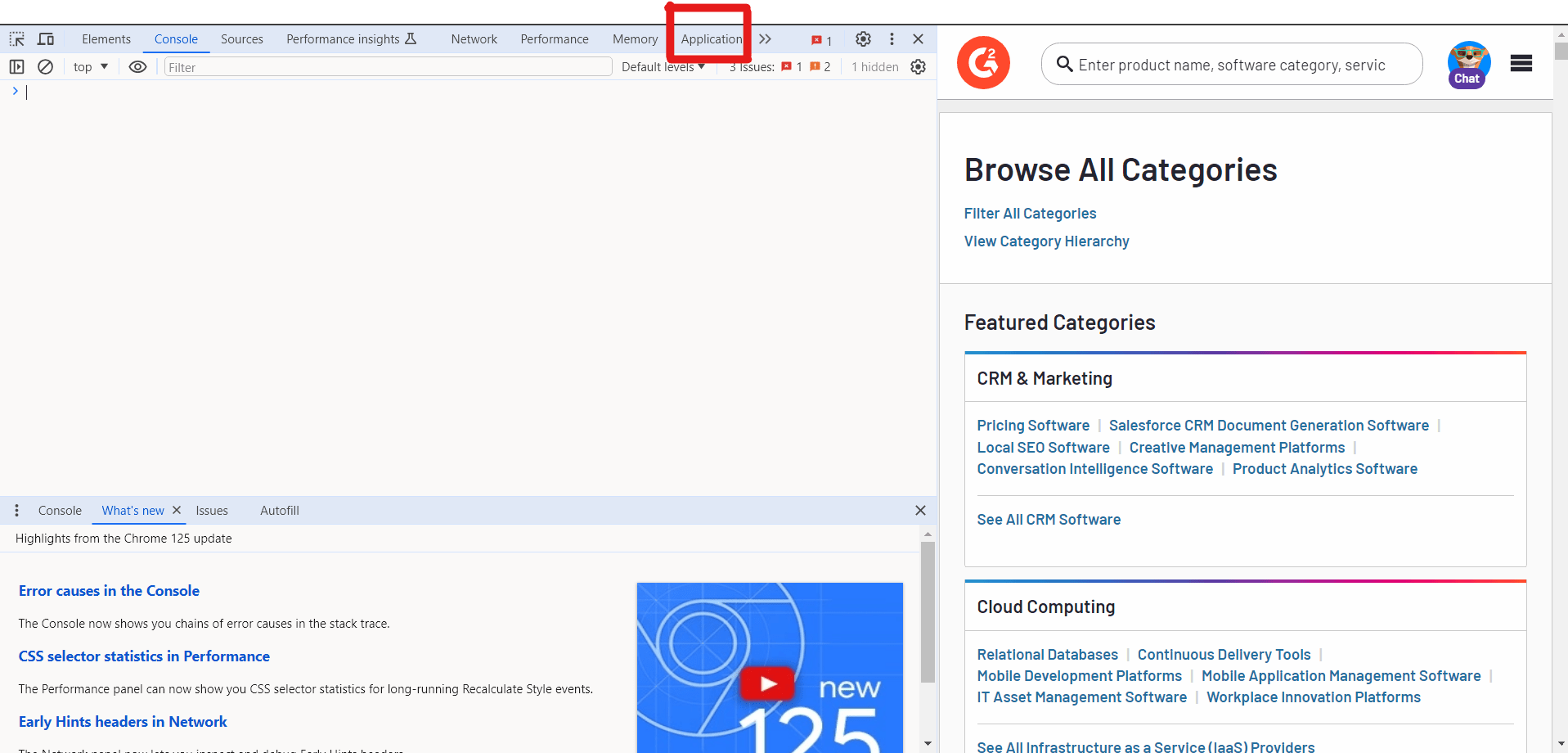

Step 1: Standard Playwright Setup for Scraping G2

With our first approach We will use a basic Playwright script to navigate to the G2 product page and capture a screenshot

const playwright = require("playwright");

(async () => {

const browser = await playwright.firefox.launch({ headless: false });

const context = await browser.newContext();

const page = await context.newPage();

await page.goto("https://www.g2.com/categories");

await page.screenshot({ path: "product-page.png" });

await browser.close();

})();

This is a typical setup for using Playwright to scrape G2's product page.

- It launches a new Chromium browser, opens a new page, and navigates to G2 product page.

- In the standard setup, no specific configurations are made to evade detection.

- This means that the website will be detected.

This is the output:

This script failed to retrieve the desired information. The page encountered CAPTCHA challenges. This result highlighted the limitations of a bare minimum approach, which does not mimic real user behavior.

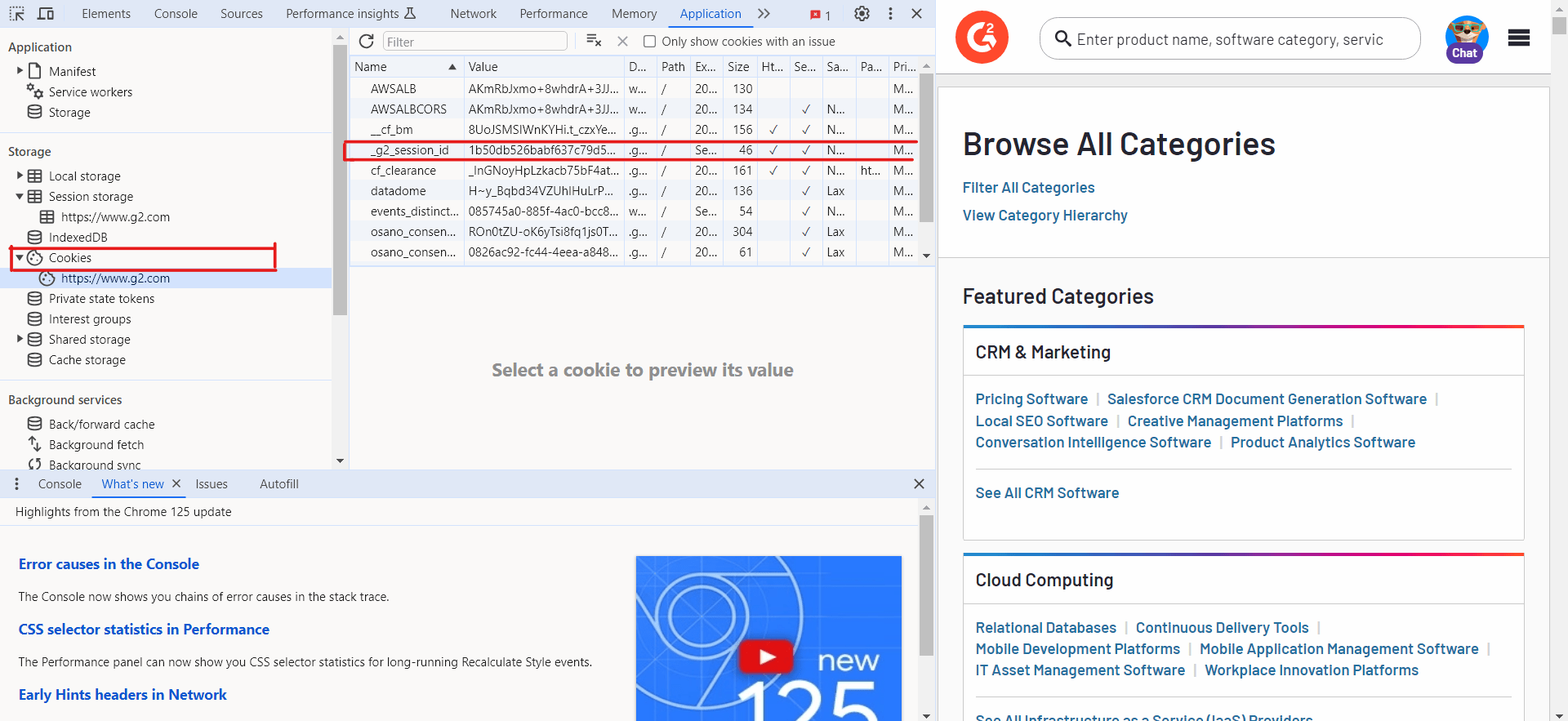

Step 2: Fortified Playwright Script

To overcome these challenges, we fortified our script by modifying the browser-context and set-up session cookies. These modifications aimed to make our automated session appear more like a real user.

Finding Session Cookies

-

Open Browser Developer Tools : Navigate to the G2 categories page in a real browser (e.g., Chrome or Firefox).

-

Access Developer Tools : Right-click on the page and select "Inspect" or press

Control + Shift + Ito open the Developer Tools. -

Navigate to Application/Storage : In Developer Tools, go to the "Application" tab in Chrome or "Storage" tab in Firefox.

-

Locate Cookies : Under "Cookies," select the

https://www.g2.comentry. Here, you will see a list of cookies set by the site. -

Copy Session Cookie : Find the cookie named

_g2_session_id. Copy its value, as this will be used in your Playwright script.

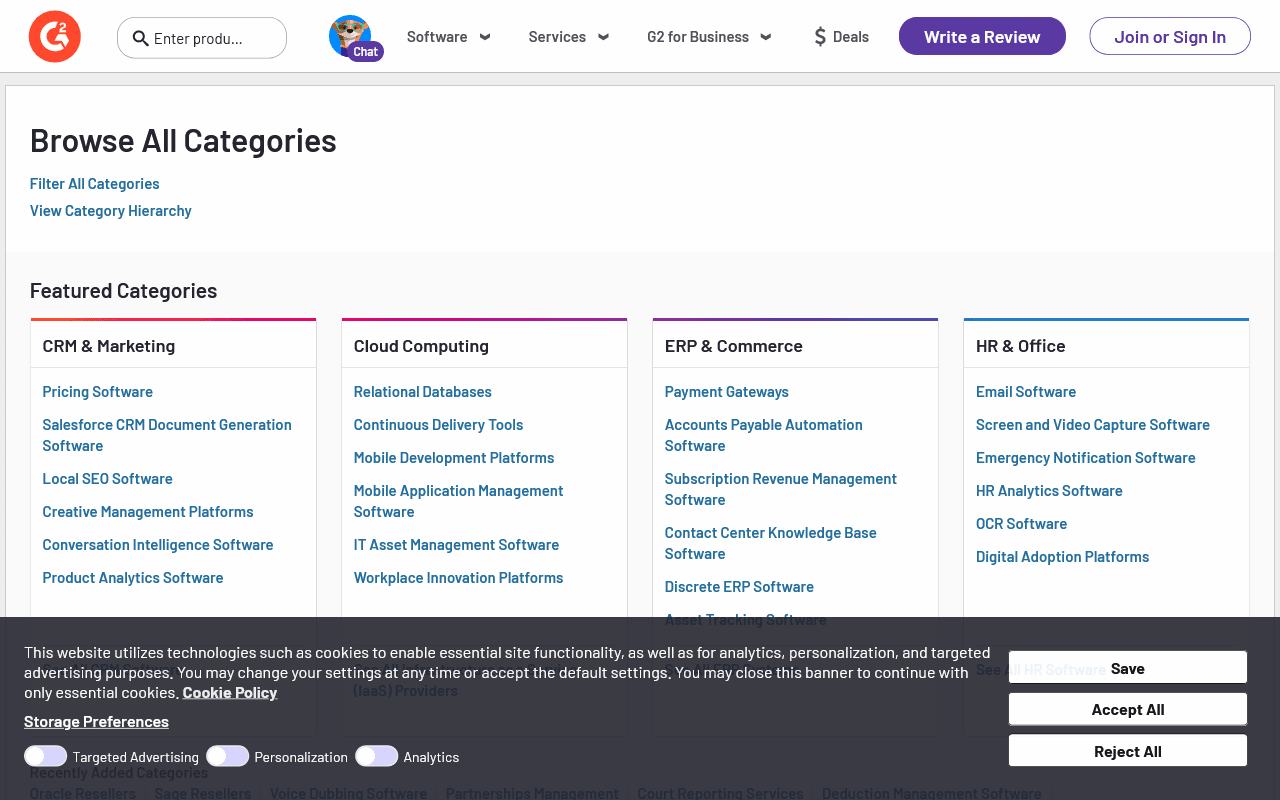

Here's the fortified Playwright script that includes the browser context modification and session cookies:

const playwright = require("playwright");

(async () => {

const browser = await playwright.firefox.launch({ headless: false });

const context = await browser.newContext({

userAgent:

"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:127.0) Gecko/20100101 Firefox/127.0",

viewport: { width: 1280, height: 800 },

locale: "en-US",

geolocation: { longitude: 12.4924, latitude: 41.8902 },

permissions: ["geolocation"],

extraHTTPHeaders: {

"Accept-Language": "en-US,en;q=0.9",

"Accept-Encoding": "gzip, deflate, br",

Referer: "https://www.g2.com/",

},

});

// Set the session ID cookie

await context.addCookies([

{

name: "_g2_session_id",

value: "0b484c21dba17c9e2fff8a4da0bac12d",

domain: "www.g2.com",

path: "/",

},

]);

const page = await context.newPage();

await page.goto("https://www.g2.com/categories");

await page.screenshot({ path: "product-page.png" });

await browser.close();

})();

Outcome

With the fortified script, the page loaded correctly, and we successfully bypassed the initial bot detection mechanisms. The addition of user agent modification and session cookies helped in simulating a real user session, which was crucial for avoiding detection and scraping the necessary data.

Comparison

- Bare Minimum Script : Failed to bypass bot detection, leading to incomplete page loads and CAPTCHA challenges.

- Fortified Script : Successfully mimicked a real user, allowing us to load the page and scrape data without interruptions.

Best Practices and Considerations

Ethical Usage and Legal Implications��

Respecting the website’s terms of service and privacy policies is crucial when scraping. Unauthorized data extraction can lead to legal consequences. Always seek permission when necessary and avoid scraping sensitive information.

Balancing Scraping Speed and Stealth

While speed is important in scraping, too fast can lead to detection and blocking. To maintain stealth, implement delays between requests, mimic human behavior, and use rotating IP addresses.

Monitoring and Adjusting Strategies for Evolving Bot Detection Techniques

Bot detection techniques are constantly evolving. Regularly monitor your scraping strategies and adjust them as needed. Keep an eye on changes in website structures and update your scraping code accordingly.

Combining Multiple Techniques for Enhanced Effectiveness

For effective scraping, combine multiple techniques, such as using different user agents, IP rotation, and CAPTCHA-solving services. This can help bypass anti-scraping measures and improve the success rate of your scraping tasks.

Conclusion

In conclusion, ensuring that Playwright remains undetectable when automating web interactions involves employing various sophisticated techniques. By customizing browser settings, spoofing user agent strings, disabling automation flags, and simulating realistic user behaviors, You can minimize the risk of detection by websites' anti-bot mechanisms.

Additionally, leveraging tools like residential proxies and browser fingerprinting evasion techniques further enhances the stealthiness of Playwright scripts. These strategies not only optimize scraping efficiency but also mitigate potential interruptions such as CAPTCHA challenges and IP blocking.

Ultimately, by implementing these measures effectively,you can maintain the reliability and effectiveness of Playwright's automated data retrieval processes.

Check the official documentation of Playwright library to get more information.

More Playwright Web Scraping Guides

To learn more about Playwright and web scraping techniques, check out some of our other articles.