Playwright Guide - How to Bypass DataDome with Playwright

When scraping the web in production, we often run into roadblocks. These roadblocks are usually created by anti-bot software such as Cloudflare, DataDome and PerimeterX. DataDome, offers a suite of security solutions that employ advanced techniques to detect and mitigate automated attacks in real-time, ensuring the integrity and availability of online assets.

In this article, we'll explore how Playwright can be utilized to bypass DataDome's protective layers.

- TLDR: How to Bypass DataDome with Playwright

- Understanding DataDome

- How DataDome Detects Web Scrapers and Prevents Automated Access

- How to Bypass DataDome

- How to Bypass DataDome With Playwright

- Conclusion

- More Playwright Web Scraping Guides

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

TLDR: How to Bypass DataDome with Playwright

There are several approaches we can take when trying to bypass DataDome with Playwright. Let's try to scrape Lebencoin which is protected by DataDome.

The provided code demonstrates a basic workflow for bypassing DataDome security measures using Puppeteer in conjunction with the ScrapeOps proxy service.

const playwright = require("playwright");

const PROXY_SERVER = "http://residential-proxy.scrapeops.io:8181";

const PROXY_USERNAME = "scrapeops";

const PROXY_PASSWORD = "YOUR-SCRAPEOPS-RESIDENTIAL-PROXY-API-KEY"; // Replace with your actual password

async function main() {

const browser = await playwright.chromium.launch({

headless: true,

proxy: { server: PROXY_SERVER, username: PROXY_USERNAME, password: PROXY_PASSWORD }

});

const page = await browser.newPage();

await page.setDefaultTimeout(20000);

await page.goto("https://leboncoin.fr");

await page.waitForTimeout(4000);

await page.screenshot({ path: "leboncoin-proxied.png" });

await browser.close();

}

main();

In the script above:

- First, we import the Playwright library.

- Then, we define a function

getScrapeOpsUrlto convert regular URLs into proxied URLs using ScrapeOps. - After that, we define an async function called main to scrape data and launch a headless Chromium browser using Playwright.

- Next, we create a new browser page for interaction and set a longer timeout since ScrapeOps might introduce additional latency.

- After setting the timeout, we navigate to the target website using the proxied URL.

- Then, we wait for the page to load completely and after the waiting period, we capture a screenshot of the page.

You should be able to view the Lebencoin homepage.

There is no substitute for a good proxy. If you need to bypass any type of anti-bot software, get an API key and get started with the ScrapeOps Proxy API Aggregator today.

Understanding DataDome

Through rigorous security and bot checks, DataDome detects and blocks scrapers from all over the world. It uses numerous techniques to identify non-human traffic and immediately block even the best automated browsers.

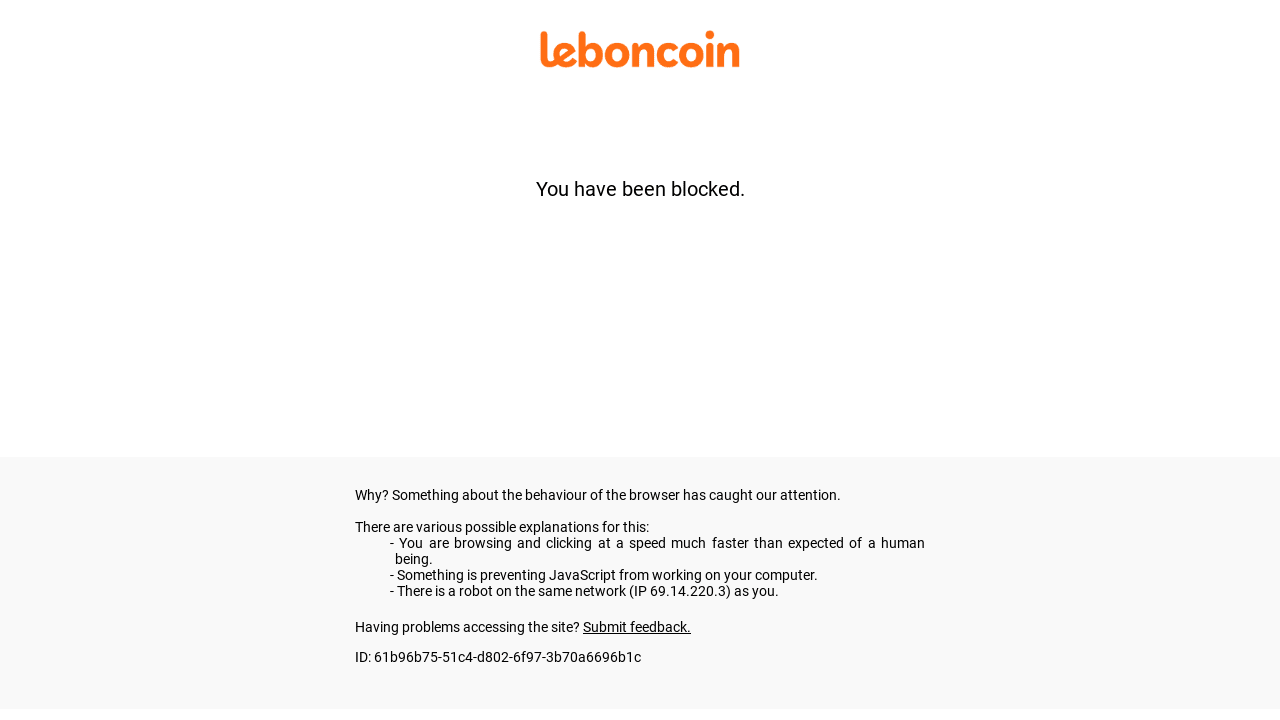

Below is an example of gettting blocked from Leboncoin, a very popular French e-commerce platform.

As you can see in the example, the site itself lists several of the reasons that we might have been blocked:

- We're browsing faster than a normal human

- JavaScript isn't working

- There has been a bot detected on our local network

DataDome works very well at detecting and preventing bots from gaining access to websites. The reasons listed above actually breakdown into alot more information than you glimpse from their block screen.

In the next section, we're going to look at their detection methods more in-depth and explain how they work.

How DataDome Detects Web Scrapers and Prevents Automated Access

DataDome employs numerous strategies to detect and ban bots. For starters, each visitor to the site is given what's called a "trust score" and this score is used to determine whether or not the visitor is a human or a bot. While DataDome's official scoring technique is a closely guarded trade secret, we can assume that they employ the following:

Server Side

- Behavioral Analysis: DataDome tracks the visitors to the site, and what they're doing. They probably track how fast we click, whether or not we wait for pages to load, and how quickly we load new pages. If our bot clicks on 100 different items in a minute, it's most likely going to be detected.

- IP Monitoring and Blocking: Along with fingerprinting our browser, they will also monitor our IP address. This allows them not only to block a specific user, but a specific location of the globe if there are bots detected on the network. If I use a scraper to access Leboncoin, and try too many times, DataDome might just block my IP instead. This would disable my normal browser from viewing the site as well.

- Header Analysis: Whenever we go somewhere on the web, our scraper (or our browser) is sending requests to a server. When using a legitimate browser, these requests will have a header that contains our

user agent. For instance, if you are using Chrome, there will be a header that identifies your user agent as Chrome. - DNS Monitoring: Through DNS monitoring, DataDome can check to see if your domain is listed as malicous. They can also see if you are coming from a Residential, Mobile, or Server IP address. Most sites using DataDome are going to block traffic coming from a DataCenter full of servers because this is obviously non-human traffic.

Client Side

- Fingerprinting: In order to track the behavior of a visitor, each vistor (browser) receives their own unique fingerprint. Without fingerprinting, DataDome can't tag our behavior to one specific source (i.e. our browser).

- CAPTCHA Challenges: Because DataDome is so good at what it does, most scrapers are blocked before they would ever need to solve a CAPTCHA, but when it's not sure whether a visitor is human, it can sometimes force the end user to solve a CAPTCHA.

- Session Tracking: This is actually a part of how fingerprints work. Your browser is given a cookie from the site. That cookie is then used to track your behavior.

How to Bypass DataDome

In order to bypass DataDome, we should use either Residential or Mobile IP addresses. We need to use real browser headers, our headers (including our user agents) should be identical to those of a real browser like FireFox or Chrome. When scraping without a proxy, we should also use fortified browsers which are far less likely to leak information about our scraper.

Use Residential & Mobile IPs

When scraping, we do not want our IP to show that we're at a datacenter. When you're doing simple scraping tasks at home, this is easy because you already have a residential IP address. When scraping in production, often the scraper runs on a server somewhere which makes this part a little more difficult. The ScrapeOps Proxy Aggregator uses a rotation of the best proxies in the industry. This ensures that we'll always have a decent IP address to work with.

Rotate Real Browser Headers

It is also a good idea to rotate our browser headers. This means that for one request, we could show up as Chrome, and for the next request, we could show up as FireFox. Rotating our headers makes it far more difficult to fingerprint our scraper...it's always showing up as something different!

Use Headless Browsers

Automated browsers tend to work the best against anti-bot software. Whether we're using Puppeteer, Playwright, or Selenium, each one actually runs and controls a real browser under the hood!. This allows our bot to appear far more human than if we were just making standard HTTP requests... Since we're using an actual browser, our headers are handled for us! When we click on a page element (as long as we're not clicking too frequently), our actions appear similar to that of a real human user. Automated browsers are a crucial element when scraping protected websites.

When using a headless browser always make sure to set a custom user agent. Headless browsers tend to give themselves away with their default user agent.

How to Bypass DataDome with Playwright

DataDome is among the absolute best of anti-bot software. If we can get past DataDome, we can get past pretty much anything out there.

To actuallly stand a chance against DataDome we're going to try each of the following methods:

- Fortifying Playwright: With this method, we fortify our browser to make it less likely to leak information.

- Playwright Stealth: Here, we actually configure Playwright to run with the Puppeteer Stealth plugin which uses numerous methods under the hood to hide the fact that we're scraping.

- ScrapeOps Proxy Aggregator: The ScrapeOps Proxy Aggregator uses a rotation of the best proxies in the industry. This allows us to show up at a different IP any time we do anything. With the ScrapeOps Proxy, it is near impossible to identify a scraper, since it is showiing up as many different clients from many different locations.

All three of these methods are said to enhance your privacy when scraping and limit your chances of getting detected as a bot. Today, we're going to put that to the test and see which of these methods actually work for getting past DataDome.

Option 1: Fortifying Your Playwright Scraper

While Playwright is a great framework for browser automation, it was actually built for website testing, not scraping. Because of this, it will actually give itself away with browser headers and other information.

Even when we code human actions into our browser (scrolling and clicking), we're not going to appear completely human because machines simply aren't very good at pretending to be human? Have you ever dealt with a robocall? I'm sure it didn't take long to figure out that you were talking to a robot.

While we have the option of running our browser with a head (GUI), we're going to run our browser in headless mode. This is a great way to save resources and when you're running in production (especially on a VPS at a datacenter), running with a GUI is simply not an option.

Here is a code example that attempts to accomplish these actions:

//import playwright

const playwright = require("playwright")

//list of user agents

const userAgents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.82 Safari/537.36',

'Mozilla/5.0 (iPhone; CPU iPhone OS 14_4_2 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/14.0.3 Mobile/15E148 Safari/604.1',

'Mozilla/4.0 (compatible; MSIE 9.0; Windows NT 6.1)',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36 Edg/87.0.664.75',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36 Edge/18.18363',

];

const randomIndex = Math.floor(Math.random() * userAgents.length);

//async main function

async function main() {

//open a new browser

const browser = await playwright.chromium.launch({headless: true});

//create a new context

const context = await browser.newContext({

userAgent: userAgents[randomIndex]

})

//create a new page

const page = await browser.newPage();

//set our viewport to that of a mobile phone

await page.setViewportSize({width: 1080, height: 1920});

//go to the site

await page.goto("https://whatismybrowser.com");

//wait a few seconds to appear more human

await page.waitForTimeout(5000);

//take a screenshot

await page.screenshot({path: "what-is-fortified.png"});

//close the browser

await browser.close();

}

main();

In the code above, we:

- Create a list of user agents

- Choose a

randomIndexfrom our list - Open a browser with

await playwright.chromium.launch({headless: true}); - Set a user agent with

browser.newContext() - Create a new page with

browser.newPage() - Use a mobile phone viewport with

page.setViewportSize({width: 1080, height: 1920});. This allows us to appear more like a mobile user. - Before doing anything else, we use

page.waitForTimeout()in order to wait a few seconds like a real user would when viewing the page. page.screenshot()takes a screenshot of our result before the browser is closedawait browser.close()shuts our browser down properly

Here is the result from whatismybrowser:

Option 2: Bypass DataDome Using Playwright Stealth

We can also attempt to do all of this using Playwright Stealth. Playwright Stealth is somewhat of a misleading name because we're actually using a Puppeteer Stealth Plugin added to Playwright. First, we need to install Playwright Extra and Puppeteer Extra Stealth.

npm install playwright-extra puppeteer-extra-plugin-stealth

Playwright and Puppeteer actually share a common origin in Google's DevTools. They are similar enough that we can actually configure Playwright to run with the Puppeteer Extra Stealth plugin. Take a look at the code written below.

//import playwright

const {chromium} = require("playwright-extra");

//import stealth

const stealth = require("puppeteer-extra-plugin-stealth")();

//define async function to scrape the data

async function main() {

//set chromium to use the stealth plugin

chromium.use(stealth);

//launch playwright without a head

const browser = await chromium.launch({headless: true});

//open a new browser page

const page = await browser.newPage();

//go to the site

await page.goto("https://whatismybrowser.com");

//wait 4 seconds so we can view the screen

await page.waitForTimeout(4000);

await page.screenshot({path: "what-is-stealth.png"});

//close the browser

await browser.close();

}

main();

In this code, we:

- Set Playwright to use the Stealth plugin with

chromium.use(stealth); - Launch a browser with

await chromium.launch({headless: true}); - Once again navigate to the site with

page.goto() page.waitForTimeout(4000);once again sets us up to wait 4 secondsawait page.screenshot({path: "what-is-stealth.png"});takes our new screenshot

Here is the result from whatismybrowser:

Option 3: Bypass DataDome Using ScrapeOps Proxy Aggregator

The ScrapeOps Proxy Aggregator will rotate proxies for us and actually do all the talking to the site's server. With the ScrapeOps Proxy, we can actually use Playwright the way we normally would right out of the box, and we should still get through DataDome with relative ease.

In the example below, we'll create a function that converts regular urls into urls with the ScrapeOps Proxy. The only real issue here is that we set a longer timeout because we're relying on two servers.

const playwright = require("playwright");

const PROXY_SERVER = "http://residential-proxy.scrapeops.io:8181";

const PROXY_USERNAME = "scrapeops";

const PROXY_PASSWORD = "YOUR-SCRAPEOPS-RESIDENTIAL-PROXY-API-KEY";

async function main() {

const browser = await playwright.chromium.launch({

headless: true,

proxy: {

server: PROXY_SERVER,

username: PROXY_USERNAME,

password: PROXY_PASSWORD

}

});

const page = await browser.newPage();

await page.setDefaultTimeout(20000);

await page.goto("https://whatismybrowser.com");

await page.waitForTimeout(4000);

await page.screenshot({ path: "what-is-proxied.png" });

await browser.close();

}

main();

Now let's explain the subtle differences in this code from a standard Playwright script:

getScrapeOpsUrl()takes in a url and converts it to a proxied urlawait page.setDefaultTimeout(20000);increases our default timeout to 20 seconds. we need to increase the timeout because we are now waiting on two servers.

In a non-proxied situation, the line of communication goes as follows:

- Playwright sends a get request to the server.

- The server intreprets the request.

- The server sends a response back to Playwright.

With a proxy, our line of communication looks more like this:

- Playwright sends a get request to the proxy server.

- The proxy server interprets the request.

- Proxy server sends a get request to the actual site.

- Site server interprets the request.

- Site server sends a response to the proxy server.

- The proxy server sends the response back to Playwright.

While there are a bunch of extra steps going on behind the scenes, our top level code for scraping remains largely simple. The ScrapeOps Proxy handles the difficult stuff so we don't have to!

Here is our result from whatismybrowser:

Case Study: Bypassing DataDome on Leboncoin.fr

Leboncoin uses DataDome to protect itself from malicious activity. Since this site uses DataDome, it is a perfect candidate for this case study.

This is the front page of the site when you first get there:

To get the screenshot above, I actually had to complete a CAPTCHA from inside my regular browser. Leboncoin is so suspicious, that it actually thought my normal setup could have been a bot. If any of the methods below can mimic this screenshot and bypass the CAPTCHA entirely, they will be considered successful.

Any screenshot that doesn't look like the one above will be considered a fail.

Scraping Leboncoin with a Fortified Browser

This method is not generally recommended. You typically always get detected sooner or later. We took the time to code it, so let's take the time to run it. Below is our Fortified Browser example, but adjusted for the site we want to scrape.

//import playwright

const playwright = require("playwright")

//list of user agents

const userAgents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.82 Safari/537.36',

'Mozilla/5.0 (iPhone; CPU iPhone OS 14_4_2 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/14.0.3 Mobile/15E148 Safari/604.1',

'Mozilla/4.0 (compatible; MSIE 9.0; Windows NT 6.1)',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36 Edg/87.0.664.75',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36 Edge/18.18363',

];

const randomIndex = Math.floor(Math.random() * userAgents.length);

//async main function

async function main() {

//open a new browser

const browser = await playwright.chromium.launch({headless: true});

//create a new context

const context = await browser.newContext({

userAgent: userAgents[randomIndex]

})

//create a new page

const page = await browser.newPage();

//set our viewport to that of a mobile phone

await page.setViewportSize({width: 1080, height: 1920});

//go to the site

await page.goto("https://leboncoin.fr");

//wait a few seconds to appear more human

await page.waitForTimeout(5000);

//take a screenshot

await page.screenshot({path: "leboncoin-fortified.png"});

//close the browser

await browser.close();

}

main();

Here is the result from the Fortifying Your Scraper section.

Unsurprisingly, we've been blocked.

Result: Fail

Scraping leboncoin.fr With Puppeteer-extra-plugin-stealth

For this attempt we'll use the code example from the Playwright Stealth sectioon. While our chances are theoretically better, DataDome and other companies are working tirelessly to combat stealth plugins.

Here is our adjusted code for the Stealth example:

//import playwright

const {chromium} = require("playwright-extra");

//import stealth

const stealth = require("puppeteer-extra-plugin-stealth")();

//define async function to scrape the data

async function main() {

//set chromium to use the stealth plugin

chromium.use(stealth);

//launch playwright without a head

const browser = await chromium.launch({headless: true});

//open a new browser page

const page = await browser.newPage();

//go to the site

await page.goto("https://leboncoin.fr");

//wait 4 seconds so we can view the screen

await page.waitForTimeout(4000);

await page.screenshot({path: "leboncoin-stealth.png"});

//close the browser

await browser.close();

}

main();

Here are the results:

The Stealth plugin succeeded! Don't expect repeated success from this method. Whenever a new Stealth plugin is released, anti-bot companies work tirelessly to detect it.

Result: Success

Scraping leboncoin.fr With ScrapeOps Proxy Aggregator

This attempt will use the ScrapeOps Proxy. We introduced this code example in the ScrapeOps Proxy Aggregator example. Here is the proxy code adjusted for the new site:

const playwright = require("playwright");

const PROXY_SERVER = "http://residential-proxy.scrapeops.io:8181";

const PROXY_USERNAME = "scrapeops";

const PROXY_PASSWORD = "YOUR-SCRAPEOPS-RESIDENTIAL-PROXY-API-KEY";

async function main() {

const browser = await playwright.chromium.launch({

headless: true,

proxy: {

server: PROXY_SERVER,

username: PROXY_USERNAME,

password: PROXY_PASSWORD

}

});

const page = await browser.newPage();

await page.setDefaultTimeout(20000);

await page.goto("https://leboncoin.fr");

await page.waitForTimeout(4000);

await page.screenshot({ path: "leboncoin-proxied-code3.png" });

await browser.close();

}

main();

Here is the result:

As you can see, we made it to the front page of the site. ScrapeOps Proxy handled this project with ease and we were immediately given access to the site.

Result: Success

Conclusion

In summary, when getting past anti-bot software, there are many strategies we can try. While all of them are legitimate and can sometimes meet our needs, there is no substitute for a good proxy.

If you'd like to learn more about Playwright specifically, take a look at the Playwright Documentation.

More Playwright Web Scraping Guides

Want to learn more about scraping but don't know where to start? Check out these Playwright guides: