How to Scrape Google Search With Puppeteer

Data is everything in today's world. Everybody wants data driven results and that's why we have search engines to begin with. As the world's most popular search engine, Google is the place that pretty much everyone starts when they look something up.

When you know how to scrape Google Search, you have the foundation to build a super powered webcrawler. Depending on how intricate you wish to make it, you can actually build a data miner if you know how to scrape a search engine.

In this extensive guide, we'll take you through how to scrape Google Search Results using Puppeteer.

- TLDR: How to Scrape Google Search with Puppeteer

- How To Architect Our Google Scraper

- Understanding How to Scrape Google Search

- Building A Google Search Scraper

- Legal and Ethical Considerations

- Conclusion

- More Cool Scraping Guides

Need help scraping the web?

Then check out ScrapeOps, the complete toolkit for web scraping.

TLDR - How to Scrape Google Search with Puppeteer

When scraping search results, pay attention to the following things:

- We get our results in batches

- Each result is highly nested and uses dynamically generated CSS

- Each result has both a

nameand alink

const puppeteer = require('puppeteer');

const createCsvWriter = require('csv-writer').createObjectCsvWriter;

const fs = require("fs");

const API_KEY = "YOUR-SUPER-SECRET-API-KEY";

const outputFile = "production.csv";

const fileExists = fs.existsSync(outputFile);

//set up the csv writer

const csvWriter = createCsvWriter({

path: outputFile,

header: [

{ id: 'name', title: 'Name' },

{ id: 'link', title: 'Link' },

{ id: 'result_number', title: 'Result Number' },

{ id: 'page', title: 'Page Number' }

],

append: fileExists

});

//convert regular urls into proxied ones

function getScrapeOpsURL(url, location) {

const params = new URLSearchParams({

api_key: API_KEY,

url: url,

country: location

});

return `https://proxy.scrapeops.io/v1/?${params.toString()}`

}

//scrape page, this is our main logic

async function scrapePage(browser, query, pageNumber, location, retries=3, num=100) {

let tries = 0;

while (tries <= retries) {

const page = await browser.newPage();

try {

const url = `https://www.google.com/search?q=${query}&start=${pageNumber * num}&num=${num}`;

const proxyUrl = getScrapeOpsURL(url, location);

//set a long timeout, sometimes the server take awhile

await page.goto(proxyUrl, { timeout: 300000 });

//find the nested divs

const divs = await page.$$("div > div > div > div > div > div > div > div");

const scrapeContent = []

seenLinks = [];

let index = 0;

for (const div of divs) {

const h3s = await div.$("h3");

const links = await div.$("a");

//if we have the required info

if (h3s && links) {

//pull the name

const name = await div.$eval("h3", h3 => h3.textContent);

//pull the link

const linkHref = await div.$eval("a", a => a.href);

//filter out bad links

if (!linkHref.includes("https://proxy.scrapeops.io/") && !seenLinks.includes(linkHref)) {

scrapeContent.push({ name: name, link: linkHref, page: pageNumber, result_number: index});

seenLinks.push(linkHref);

index++;

}

}

}

//we failed to get a result, throw an error and attempt a retry

if (scrapeContent.length === 0) {

throw new Error(`Failed to scrape page ${pageNumber}`);

//we have a page result, write it to the csv

} else {

await csvWriter.writeRecords(scrapeContent);

//exit the function

return;

}

} catch(err) {

console.log(`ERROR: ${err}`);

console.log(`Retries left: ${retries-tries}`)

tries++;

} finally {

await page.close();

}

}

throw new Error(`Max retries reached: ${tries}`);

}

//function to launch a browser and scrape each page concurrently

async function concurrentScrape(query, totalPages, location, num=10, retries=3) {

const browser = await puppeteer.launch();

const tasks = [];

for (let i = 0; i < totalPages; i++) {

tasks.push(scrapePage(browser, query, i, location, retries, num));

}

await Promise.all(tasks);

await browser.close();

}

//main function

async function main() {

const queries = ['cool stuff'];

const location = 'us';

const totalPages = 3;

const batchSize = 20;

const retries = 5;

console.log('Starting scrape...');

for (const query of queries) {

await concurrentScrape(query, totalPages, location, num=batchSize, retries);

console.log(`Scrape complete, results saved to: ${outputFile}`);

}

};

//run the main function

main();

The code above gives you a production ready Google Search scraper.

- You can change the query in order to change the query to whatever you'd like.

- You can change the

locationandtotalPages(or any of the other constants in the main function) variables to change your results as well. - Make sure to replace

"YOUR-SUPER-SECRET-API-KEY"with your ScrapeOps API key.

How To How To Architect Our Google Search Scraper

In order to properly scrape Google Search, we need to be able to do the following:

- Perform a query

- Interpret the results

- Repeat steps one and two until we have our desired data

- Store the scraped data

Our best implementation of a Google Scraper will be able to parse a page. It also needs to manage pagination. It should be able to perform tasks with concurrency. It should also be set up to work with a proxy.

Why does our scraper need these qualities?

- To extract data from a page, we need to parse the HTML.

- To request different pages (batches of data), we need to control our pagination.

- When parsing our data concurrently, our scraper will complete tasks quicker because multiple things are happening at the same time.

- When we use a proxy, we greatly decrease our chances of getting blocked, and we can also choose our location much more successfully because the proxy will give us an IP address matching the location we choose.

In this tutorial, we're going to use puppeteer to perform and interpret our results. We'll use csv-writer and fs for handling the filesystem and storing our data.

These dependencies give us the power to not only extract page data, but also filter and store our data safely and efficiently.

Understanding How To Scrape Google Search

When we scrape Google Search, we need to be able to request a page, extract the data, control our pagination, and deal with geolocation.

In the next few sections, we'll go over how all this works before we dive head first into code.

- We make our requests and extract the data using Puppeteer itself.

- We also control the pagination using the url that we construct.

- In early examples, we do set our

geo_locationinside the url, but later on in development, we remove this and let the ScrapeOps Proxy handle our location for us.

Step 1: How To Request Google Search Pages

When we perform a Google search, our url comes in a format like this:

https://www.google.com/search?q=${query}

If we want to search for cool stuff, our url would be:

https://www.google.com/search?q=cool+stuff

Here's an example when you look it up in your browser. We can also attempt to control our batch results with the num query.

The num query tends to get mixed results since most normal users are on default settings with approximately 10 results. If you choose to use the num query, exercise caution.

Google does block suspicious traffic and the num query does make you look less human.

Additional queries are added with the & and the the query name and value. We'll explore these additional queries in the coming sections.

Step 2: How To Extract Data From Google Search

When we perform a Google Search, our results come deeply nested in the page's HTML. In order to extract them, we need to parse through the HTML and pull the data from it. Take a look at the image below.

While it's possible to scrape data using CSS selectors, doing so with Google is a mistake. Google tends to use dynamic CSS selectors, so if we hard code our CSS selectors, and then the selectors change... our scraper will break!

As you can see, the CSS class is basically just a ton of jumbled garbage. We could go ahead and scrape using this CSS class, but it would be a costly mistake.

The CSS classes are dynamically generated and our scraper would more than likely break very quickly. If we're going to build a scraper that can hold up in production, we need to dig deep into the nasty nested layout of this page.

Step 3: How To Control Pagination

As mentioned previously, additional queries are added to our url with &. In the olden days, Google gave us actual pages. In modern day, Google gives us all of our results on a single page.

At first glance, this would make our scrape much more difficult, however, our results come in batches. This makes it incredibly simple to simulate pages.

To control which result we start at, we can use the start parameter. If we want to start at result 0, our url would be:

https://www.google.com/search?q=$cool+stuff&start=0

When we fetch our results, they come in batches of approximately 10. To fetch the next batch, we would GET

https://www.google.com/search?q=$cool+stuff&start=10

This process repeats until we're staisfied with our results.

Step 4: Geolocated Data

To handle geolocation, we can use the geo_location parameter. If we want to look up cool stuff and use a location of Japan, our url would look like this:

https://www.google.com/search?q=cool+stuff&geo_location=japan

Google still attempts to use our device's location, so the best way to change our location based result is by using either a VPN or a proxy such as the ScrapeOps Proxy.

When we use a proxy, it's actually very important to set our location with the proxy so we can keep our data consistent. The ScrapeOps Proxy uses rotating IP addresses.

If we don't set our location, our first page of cool stuff could be cool stuff in France, our second page of cool stuff could be cool stuff in Japan.

Setting Up Our Google Search Scraper

Let's get started building. First, we need to create a new project folder. Then we'll intialize our project. After initializing it, we can go ahead and install our dependencies.

We only have three dependencies, puppeteer for web browsing and parsing HTML, csv-writer to store our data, and fs for basic file operations.

You can start by making a new folder in your file explorer or you can create one from the command line with the command below:

mkdir puppeteer-google-search

Then we need to open a terminal/shell instance inside this folder. You switch into the directory with the following command:

cd puppeteer-google-search

Now, we initialize our project. The command below transforms our new folder into a NodeJS project:

npm init --y

Now to install our dependencies:

npm install puppeteer

npm install csv-writer

We don't need to install fs, because it comes with NodeJS. In our scraper, we simply require it.

Build A Google Search Scraper

Our scraper is actually the very foundation of a powerful crawler. It needs to do the following:

- Perform a query based on the result we want

- Parse the response

- Repeat this process until we have our desired data

- Save the data to a CSV file

Step 1: Create Simple Search Data Parser

Here, we need to create a simple parser. The goal of our parser is simple: read HTML and spit out data.

Here's a parser that gets the page, finds the nested divs, and extracts the link and name in each div.

const puppeteer = require("puppeteer");

const createCsvWriter = require("csv-writer").createObjectCsvWriter;

const fs = require("fs");

async function scrapePage(query) {

//set up our page and browser

const url = `https://www.google.com/search?q=${query}`;

const browser = await puppeteer.launch();

const page = await browser.newPage();

//go to the site

await page.goto(url);

//extract the nested divs

const divs = await page.$$("div > div > div > div > div > div > div > div");

const scrapeContent = []

seenLinks = [];

let index = 0;

for (const div of divs) {

const h3s = await div.$("h3");

const links = await div.$("a");

//if we have the required info

if (h3s && links) {

//pull the name

const name = await div.$eval("h3", h3 => h3.textContent);

//pull the link

const linkHref = await div.$eval("a", a => a.href);

//filter out bad links

if (!linkHref.includes("https://proxy.scrapeops.io/") && !seenLinks.includes(linkHref)) {

scrapeContent.push({ name: name, link: linkHref, result_number: index});

//add the link to our list of seen links

seenLinks.push(linkHref);

index++;

}

}

}

await browser.close();

return scrapeContent;

}

//main function

async function main() {

const results = await scrapePage("cool stuff");

for (const result of results) {

console.log(result);

}

}

//run the main function

main();

The order of operations here is pretty simple. The complex logic comes when we're parsing through the HTML. Let's explore the parsing logic in detail:

scrapeContentis an array that holds our results to return- The

seenLinksarray is strictly for holding links we've already scraped indexholds our index on the pageconst divs = await page.$$("div > div > div > div > div > div > div > div");finds all of our super nested divs- Iterate through the divs

foreach div, we:- Use

div.$()to check for presence ofh3andaelements - If these elements are present, we extract them with

div.$eval() - If the links are good, and we haven't seen them we add them to our

scrapedContent - Once they've been scraped, we add them to our

seenLinksso we don't scrape them again

- Use

We need to parse through these nested divs. Google uses dynamic CSS selectors. Do not hard code CSS Selectors into your Google Scraper. It will break! You don't want to depoy a scraper to production only to find out that it no longer works.

Step 2: Add Pagination

As mentioned earlier, to add pagination, our url needs to look like this:

https://www.google.com/search?q=$cool+stuff&start=0

Our results tend to come in batches of 10, so we'll need to multiply our pageNumber by 10.

Taking pagination into account, our url will now look like this:

https://www.google.com/search?q=${query}&start=${pageNumber}

Here is our code adjusted for pagination.

const puppeteer = require("puppeteer");

const createCsvWriter = require("csv-writer").createObjectCsvWriter;

const fs = require("fs");

async function scrapePage(query, pageNumber) {

//set up our page and browser

const url = `https://www.google.com/search?q=${query}&start=${pageNumber}`;

const browser = await puppeteer.launch();

const page = await browser.newPage();

//go to the site

await page.goto(url);

//extract the nested divs

const divs = await page.$$("div > div > div > div > div > div > div > div");

const scrapeContent = []

seenLinks = [];

let index = 0;

for (const div of divs) {

const h3s = await div.$("h3");

const links = await div.$("a");

//if we have the required info

if (h3s && links) {

//pull the name

const name = await div.$eval("h3", h3 => h3.textContent);

//pull the link

const linkHref = await div.$eval("a", a => a.href);

//filter out bad links

if (!linkHref.includes("https://proxy.scrapeops.io/") && !seenLinks.includes(linkHref)) {

scrapeContent.push({ name: name, link: linkHref,pageNumber: pageNumber, result_number: index});

//add the link to our list of seen links

seenLinks.push(linkHref);

index++;

}

}

}

await browser.close();

return scrapeContent;

}

//main function

async function main() {

const results = await scrapePage("cool stuff", 0);

for (const result of results) {

console.log(result);

}

}

//run the main function

main();

Here are the differences from our first prototype:

scrapePage()now takes two arguments,queryandpageNumber- Our url includes the

pageNumbermultiplied by our typical batch size (10) const results = await scrapePage("cool stuff", 0)says we want our results to start at zero

The pageNumber argument is the foundation to everything we'll add in the coming sections. It's really hard for your scraper to organize its tasks and data if it has no idea which page its on.

Step 3: Storing the Scraped Data

As you've probably noticed, our last two iterations have unused imports, csv-writer and fs. Now it's time to use them. We'll use fs to check the existence of our outputFile and csv-writer to write the results to the actual CSV file.

Pay close attention to fileExists in this section. If our file already exists, we do not want to overwrite it. If it doesn't exist, we need to create a new file. The csvWriter in the code below does exactly this.

Here's our adjusted code:

const puppeteer = require("puppeteer");

const createCsvWriter = require("csv-writer").createObjectCsvWriter;

const fs = require("fs");

const outputFile = "add-storage.csv";

const fileExists = fs.existsSync(outputFile);

//set up the csv writer

const csvWriter = createCsvWriter({

path: outputFile,

header: [

{ id: 'name', title: 'Name' },

{ id: 'link', title: 'Link' },

{ id: 'result_number', title: 'Result Number' },

{ id: 'page', title: 'Page Number' }

],

append: fileExists

});

async function scrapePage(query, pageNumber) {

//set up our page and browser

const url = `https://www.google.com/search?q=${query}&start=${pageNumber}`;

const browser = await puppeteer.launch();

const page = await browser.newPage();

//go to the site

await page.goto(url);

//extract the nested divs

const divs = await page.$$("div > div > div > div > div > div > div > div");

const scrapeContent = []

seenLinks = [];

let index = 0;

for (const div of divs) {

const h3s = await div.$("h3");

const links = await div.$("a");

//if we have the required info

if (h3s && links) {

//pull the name

const name = await div.$eval("h3", h3 => h3.textContent);

//pull the link

const linkHref = await div.$eval("a", a => a.href);

//filter out bad links

if (!linkHref.includes("https://proxy.scrapeops.io/") && !seenLinks.includes(linkHref)) {

scrapeContent.push({ name: name, link: linkHref,pageNumber: pageNumber, result_number: index});

//add the link to our list of seen links

seenLinks.push(linkHref);

index++;

}

}

}

await browser.close();

await csvWriter.writeRecords(scrapeContent);

}

//main function

async function main() {

console.log("Starting scrape...")

await scrapePage("cool stuff", 0);

console.log(`Scrape complete, results save to: ${outputFile}`);

}

//run the main function

main();

Key differences here:

fileExistsis a boolean,trueif our file exists andfalseif it doesn'tcsvWriteropens the file in append mode if the file exists, otherwise it creates the file

Instead of returning our results, we write the batch to the outputFile as soon as it has been processed. This helps us write everything we possibly can even in the event of a crash.

Once we're scraping multiple pages at once, if our scraper succeeds on page 1, but fails on page 2 or page 0, we will still have some results that we can review!

Step 4: Adding Concurrency

JavaScript is single threaded by default, so adding concurrency to our scraper is a little bit tricky, but JavaScript's async support makes this completely doable. In this section, let's add a concurrentScrape() function. The goal of this function is simple, run the scrapePage() function on multiple pages at the same time.

Since we're dealing with Promise objects, it's a good idea to add some error handling in scrapePage(). We don't want a Promise to resolve with bad results.

The code below adds concurrency and error handling to ensure our scrape completes properly.

const puppeteer = require("puppeteer");

const createCsvWriter = require("csv-writer").createObjectCsvWriter;

const fs = require("fs");

const outputFile = "add-concurrency.csv";

const fileExists = fs.existsSync(outputFile);

//set up the csv writer

const csvWriter = createCsvWriter({

path: outputFile,

header: [

{ id: 'name', title: 'Name' },

{ id: 'link', title: 'Link' },

{ id: 'result_number', title: 'Result Number' },

{ id: 'page', title: 'Page Number' }

],

append: fileExists

});

async function scrapePage(browser, query, pageNumber, location, retries=3) {

let tries = 0;

while (tries <= retries) {

const page = await browser.newPage();

try {

const url = `https://www.google.com/search?q=${query}&start=${pageNumber * 10}`;

//set a long timeout, sometimes the server take awhile

await page.goto(url, { timeout: 300000 });

//find the nested divs

const divs = await page.$$("div > div > div > div > div > div > div > div");

const scrapeContent = []

seenLinks = [];

let index = 0;

for (const div of divs) {

const h3s = await div.$("h3");

const links = await div.$("a");

//if we have the required info

if (h3s && links) {

//pull the name

const name = await div.$eval("h3", h3 => h3.textContent);

//pull the link

const linkHref = await div.$eval("a", a => a.href);

//filter out bad links

if (!linkHref.includes("https://proxy.scrapeops.io/") && !seenLinks.includes(linkHref)) {

scrapeContent.push({ name: name, link: linkHref, page: pageNumber, result_number: index});

seenLinks.push(linkHref);

index++;

}

}

}

//we failed to get a result, throw an error and attempt a retry

if (scrapeContent.length === 0) {

throw new Error(`Failed to scrape page ${pageNumber}`);

//we have a page result, write it to the csv

} else {

await csvWriter.writeRecords(scrapeContent);

//exit the function

return;

}

} catch(err) {

console.log(`ERROR: ${err}`);

console.log(`Retries left: ${retries-tries}`)

tries++;

} finally {

await page.close();

}

}

throw new Error(`Max retries reached: ${tries}`);

}

//scrape multiple pages at once

async function concurrentScrape(query, totalPages) {

const browser = await puppeteer.launch();

const tasks = [];

for (let i = 0; i < totalPages; i++) {

tasks.push(scrapePage(browser, query, i));

}

await Promise.all(tasks);

await browser.close();

}

//main function

async function main() {

console.log("Starting scrape...")

await concurrentScrape("cool stuff", 3);

console.log(`Scrape complete, results save to: ${outputFile}`);

}

//run the main function

main();

There are some major improvements in this version of our script:

scrapePage()now takes our browser as a argument and instead opening and closing abrowser, it opens and closes apage- When we attempt to scrape a page, we get three retries (you can change this to any amount you'd like)

- If a scrape doesn't return the data we want, we

throwan error and retry the scrape - If we run out of retries, the function crashes entirely and let's the user know which page it failed on

- Once our

try/catchlogic has completed, we usefinallyto close the page and free up some memory concurrentScrape()runsscrapePage()on a bunch of separate pages asynchronously to speed up our results

When scraping at scale, there is always a possibility of either bad data or a failed scrape. Our code needs to be able to take this into account. Basic error handling can take you a really long way.

Step 5: Bypassing Anti-Bots

When scraping the web, we often run into anti-bots. Designed to protect against malicious software, they're a very important part of the web.

While our scraper isn't designed to be malicious, it's really fast. It's much faster than a typical human user. Even though we're not doing anything malicious, we're doing stuff way faster than a human would, so anti-bots tend to see this as a red flag and block us.

To get around anti-bots, it's imperative to use a good proxy. The ScrapeOps Proxy actually rotates between the best proxies available and this ensures that we can get a result pretty much every time we page.goto(url).

In this section, we'll bring our scraper up to production quality and integrate it with the ScrapeOps proxy.

- We'll create a simple string formatting function,

getScrapeOpsURL(). - We'll add our

locationparameter toscrapePage()andconcurrentScrape()as well. - In this case, we pass our

locationto the ScrapeOps Proxy because they can then route us through an actual server in that location.

Here is our proxied scraper:

const puppeteer = require('puppeteer');

const createCsvWriter = require('csv-writer').createObjectCsvWriter;

const fs = require("fs");

const API_KEY = "YOUR-SUPER-SECRET-API-KEY";

const outputFile = "production.csv";

const fileExists = fs.existsSync(outputFile);

//set up the csv writer

const csvWriter = createCsvWriter({

path: outputFile,

header: [

{ id: 'name', title: 'Name' },

{ id: 'link', title: 'Link' },

{ id: 'result_number', title: 'Result Number' },

{ id: 'page', title: 'Page Number' }

],

append: fileExists

});

//convert regular urls into proxied ones

function getScrapeOpsURL(url, location) {

const params = new URLSearchParams({

api_key: API_KEY,

url: url,

country: location

});

return `https://proxy.scrapeops.io/v1/?${params.toString()}`

}

//scrape page, this is our main logic

async function scrapePage(browser, query, pageNumber, location, retries=3, num=100) {

let tries = 0;

while (tries <= retries) {

const page = await browser.newPage();

try {

const url = `https://www.google.com/search?q=${query}&start=${pageNumber * num}&num=${num}`;

const proxyUrl = getScrapeOpsURL(url, location);

//set a long timeout, sometimes the server take awhile

await page.goto(proxyUrl, { timeout: 300000 });

//find the nested divs

const divs = await page.$$("div > div > div > div > div > div > div > div");

const scrapeContent = []

seenLinks = [];

let index = 0;

for (const div of divs) {

const h3s = await div.$("h3");

const links = await div.$("a");

//if we have the required info

if (h3s && links) {

//pull the name

const name = await div.$eval("h3", h3 => h3.textContent);

//pull the link

const linkHref = await div.$eval("a", a => a.href);

//filter out bad links

if (!linkHref.includes("https://proxy.scrapeops.io/") && !seenLinks.includes(linkHref)) {

scrapeContent.push({ name: name, link: linkHref, page: pageNumber, result_number: index});

seenLinks.push(linkHref);

index++;

}

}

}

//we failed to get a result, throw an error and attempt a retry

if (scrapeContent.length === 0) {

throw new Error(`Failed to scrape page ${pageNumber}`);

//we have a page result, write it to the csv

} else {

await csvWriter.writeRecords(scrapeContent);

//exit the function

return;

}

} catch(err) {

console.log(`ERROR: ${err}`);

console.log(`Retries left: ${retries-tries}`)

tries++;

} finally {

await page.close();

}

}

throw new Error(`Max retries reached: ${tries}`);

}

//function to launch a browser and scrape each page concurrently

async function concurrentScrape(query, totalPages, location, num=10, retries=3) {

const browser = await puppeteer.launch();

const tasks = [];

for (let i = 0; i < totalPages; i++) {

tasks.push(scrapePage(browser, query, i, location, retries, num));

}

await Promise.all(tasks);

await browser.close();

}

//main function

async function main() {

const queries = ['cool stuff'];

const location = 'us';

const totalPages = 3;

const batchSize = 20;

const retries = 5;

console.log('Starting scrape...');

for (const query of queries) {

await concurrentScrape(query, totalPages, location, num=batchSize, retries);

console.log(`Scrape complete, results saved to: ${outputFile}`);

}

};

//run the main function

main();

Just a few differences here:

- We have a new function,

getScrapeOpsURL() - We now pass our

locationintoconcurrentScrape(),scrapePage()andgetScrapeOpsURL() - When we

page.goto()a site, we pass the url intogetScrapeOpsURL()and pass the result intopage.goto() - We added in the

numparameter so we can tell Google how many results we want.

Always use num with caution. Google sometimes bans suspicious traffic and the num query can make your scraper look abnormal. Even if they choose not to ban you, they hold the right to send you less than 100 results, causing your scraper to miss important data!

Step 6: Production Run

We now have a production level scraper. To edit input variables, we can simply change some stuff in our main() function. Take a look at our main():

async function main() {

const queries = ['cool stuff'];

const location = 'us';

const totalPages = 3;

const batchSize = 20;

const retries = 5;

console.log('Starting scrape...');

for (const query of queries) {

await concurrentScrape(query, totalPages, location, num=batchSize, retries);

console.log(`Scrape complete, results saved to: ${outputFile}`);

}

};

If we want to scrape 100 pages of boring stuff, we'd change query to 'boring stuff' and totalPages to 100. To change the location, simply change the location variable from 'us' to whatever you'd like. I named my production scraper, production.js. I can run it with the node command.

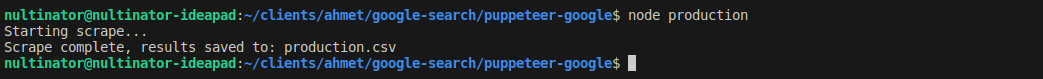

The image below shows both the command to run it and the console ouput. In fact, feel free to change any of the constants declared in main(). That's exactly why they're there! These constants make it easy to tweak our results.

Here's the CSV it spits out:

Legal and Ethical Considerations

When scraping any site, always pay attention to their terms and conditions and always consult their robots.txt file if you're not sure about something. You can view Google's robot.txt here. If you're scraping as a guest (not logged into any site), the information your scraper sees is public and therefore fair game. If a site requires you to login, the information you see afterward is considered private. Don't log in with scrapers!!!

Also, always pay attention to the Terms and Conditions of the site you're scraping. You can view Google's Terms here.

Google does reserve the right to suspend, block, and/or delete your account if you violate their terms. Always check a site's Terms before you attempt to scrape it.

Also, if you turn your Google Scraper into a crawler that scrapes the sites in your results, remember, you are subject to the Terms and Conditions of those sites as well!

Conclusion

You've now built a production level scraper using NodeJS Puppeteer. You should have a decent grasp on how to parse HTML and how to save data to a CSV file. You've also learned how to use async and Promise to improve speed and concurrency. Go build something!!!

If you'd like to learn more about the tech stack used in this article, you can find some links below:

More NodeJS Web Scraping Guides

In life, you're never done learning...ever. The same goes for software development! At ScrapeOps, we have a seemingly endless list of learning resources.

If you're in the mood to learn more, check our extensive NodeJS Puppeteer Web Scraping Playbook or check out some of the articles below: